Azure fault domains vs availability zones: Achieving zero downtime migrations

The challenges of operating production-ready enterprise systems in the cloud are ensuring applications remain up to date, secure and benefit from the latest features. This can include operating system or application version upgrades, but it is not limited to advancements in cloud provider offerings or the retirement of older ones. Recently, NetApp Instaclustr undertook a migration activity for (almost) all our Azure fault domain customers to availability zones and Basic SKU IP addresses.

Understanding Azure fault domains vs availability zones

“Azure fault domain vs availability zone” reflects a critical distinction in ensuring high availability and fault tolerance. Fault domains offer physical separation within a data center, while availability zones expand on this by distributing workloads across data centers within a region. This enhances resiliency against failures, making availability zones a clear step forward.

The need for migrating from fault domains to availability zones

NetApp Instaclustr has supported Azure as a cloud provider for our Managed open source offerings since 2016. Originally this offering was distributed across fault domains to ensure high availability using “Basic SKU public IP Addresses”, but this solution had some drawbacks when performing particular types of maintenance. Once released by Azure in several regions we extended our Azure support to availability zones which have a number of benefits including more explicit placement of additional resources, and we leveraged “Standard SKU Public IP’s” as part of this deployment.

When we introduced availability zones, we encouraged customers to provision new workloads in them. We also supported migrating workloads to availability zones, but we had not pushed existing deployments to do the migration. This was initially due to the reduced number of regions that supported availability zones.

In early 2024, we were notified that Azure would be retiring support for Basic SKU public IP addresses in September 2025. Notably, no new Basic SKU public IPs would be created after March 1, 2025. For us and our customers, this had the potential to impact cluster availability and stability – as we would be unable to add nodes, and some replacement operations would fail.

Very quickly we identified that we needed to migrate all customer deployments from Basic SKU to Standard SKU public IPs. Unfortunately, this operation involves node-level downtime as we needed to stop each individual virtual machine, detach the IP address, upgrade the IP address to the new SKU, and then reattach and start the instance. For customers who are operating their applications in line with our recommendations, node-level downtime does not have an impact on overall application availability, however it can increase strain on the remaining nodes.

Given that we needed to perform this potentially disruptive maintenance by a specific date, we decided to evaluate the migration of existing customers to Azure availability zones.

Key migration consideration for Cassandra clusters

As with any migration, we were looking at performing this with zero application downtime, minimal additional infrastructure costs, and as safe as possible. For some customers, we also needed to ensure that we do not change the contact IP addresses of the deployment, as this may require application updates from their side. We quickly worked out several ways to achieve this migration, each with its own set of pros and cons.

For our Cassandra customers, our go to method for changing cluster topology is through a data center migration. This is our zero-downtime migration method that we have completed hundreds of times, and have vast experience in executing. The benefit here is that we can be extremely confident of application uptime through the entire operation and be confident in the ability to pause and reverse the migration if issues are encountered. The major drawback to a data center migration is the increased infrastructure cost during the migration period – as you effectively need to have both your source and destination data centers running simultaneously throughout the operation. The other item of note, is that you will need to update your cluster contact points to the new data center.

For clusters running other applications, or customers who are more cost conscious, we evaluated doing a “node by node” migration from Basic SKU IP addresses in fault domains, to Standard SKU IP addresses in availability zones. This does not have any short-term increased infrastructure cost, however the upgrade from Basic SKU public IP to Standard SKU is irreversible, and different types of public IPs cannot coexist within the same fault domain. Additionally, this method comes with reduced rollback abilities. Therefore, we needed to devise a plan to minimize risks for our customers and ensure a seamless migration.

Developing a zero-downtime node-by-node migration strategy

To achieve a zero-downtime “node by node” migration, we explored several options, one of which involved building tooling to migrate the instances in the cloud provider but preserve all existing configurations. The tooling automates the migration process as follows:

- Begin with stopping the first VM in the cluster. For cluster availability, ensure that only 1 VM is stopped at any time.

- Create an OS disk snapshot and verify its success, then do the same for data disks

- Ensure all snapshots are created and generate new disks from snapshots

- Create a new network interface card (NIC) and confirm its status is green

- Create a new VM and attach the disks, confirming that the new VM is up and running

- Update the private IP address and verify the change

- The public IP SKU will then be upgraded, making sure this operation is successful

- The public IP will then be reattached to the VM

- Start the VM

Even though the disks are created from snapshots of the original disks, we encountered several discrepancies in our testing, with settings between the original VM and the new VM. For instance, certain configurations, such as caching policies, did not automatically carry over, requiring manual adjustments to align with our managed standards.

Recognizing these challenges, we decided to extend our existing node replacement mechanism to streamline our migration process. This is done so that a new instance is provisioned with a new OS disk with the same IP and application data. The new node is configured by the Instaclustr Managed Platform to be the same as the original node.

The next challenge: our existing solution is built so that the replaced node was provisioned to be the exact same as the original. However, for this operation we needed the new node to be placed in an availability zone instead of the same fault domain. This required us to extend the replacement operation so that when we triggered the replacement, the new node was placed in the desired availability zone. Once this operation completed, we had a replacement tool that ensured that the new instance was correctly provisioned in the availability zone, with a Standard SKU, and without data loss.

Now that we had two very viable options, we went back to our existing Azure customers to outline the problem space, and the operations that needed to be completed. We worked with all impacted customers on the best migration path for their specific use case or application and worked out the best time to complete the migration. Where possible, we first performed the migration on any test or QA environments before moving onto production environments.

Collaborative customer migration success

Some of our Cassandra customers opted to perform the migration using our data center migration path, however most customers opted for the node-by-node method. We successfully migrated the existing Azure fault domain clusters over to the Availability Zone that we were targeting, with only a very small number of clusters remaining. These clusters are operating in Azure regions which do not yet support availability zones, but we were able to successfully upgrade their public IP from Basic SKUs that are set for retirement to Standard SKUs.

No matter what provider you use, the pace of development in cloud computing can require significant effort to support ongoing maintenance and feature adoption to take advantage of new opportunities. For business-critical applications, being able to migrate to new infrastructure and leverage these opportunities while understanding the limitations and impact they have on other services is essential.

NetApp Instaclustr has a depth of experience in supporting business critical applications in the cloud. You can read more about another large-scale migration we completed The worlds Largest Apache Kafka and Apache Cassandra Migration or head over to our console for a free trial of the Instaclustr Managed Platform.

The post Azure fault domains vs availability zones: Achieving zero downtime migrations appeared first on Instaclustr.

Integrating support for AWS PrivateLink with Apache Cassandra® on the NetApp Instaclustr Managed Platform

Discover how NetApp Instaclustr leverages AWS PrivateLink for secure and seamless connectivity with Apache Cassandra®. This post explores the technical implementation, challenges faced, and the innovative solutions we developed to provide a robust, scalable platform for your data needs.

Last year, NetApp achieved a significant milestone by fully integrating AWS PrivateLink support for Apache Cassandra® into the NetApp Instaclustr Managed Platform. Read our AWS PrivateLink support for Apache Cassandra General Availability announcement here. Our Product Engineering team made remarkable progress in incorporating this feature into various NetApp Instaclustr application offerings. NetApp now offers AWS PrivateLink support as an Enterprise Feature add-on for the Instaclustr Managed Platform for Cassandra, Kafka®, OpenSearch®, Cadence®, and Valkey™.

The journey to support AWS PrivateLink for Cassandra involved considerable engineering effort and numerous development cycles to create a solution tailored to the unique interaction between the Cassandra application and its client driver. After extensive development and testing, our product engineering team successfully implemented an enterprise ready solution. Read on for detailed insights into the technical implementation of our solution.

What is AWS PrivateLink?

PrivateLink is a networking solution from AWS that provides private connectivity between Virtual Private Clouds (VPCs) without exposing any traffic to the public internet. This solution is ideal for customers who require a unidirectional network connection (often due to compliance concerns), ensuring that connections can only be initiated from the source VPC to the destination VPC. Additionally, PrivateLink simplifies network management by eliminating the need to manage overlapping CIDRs between VPCs. The one-way connection allows connections to be initiated only from the source VPC to the managed cluster hosted in our platform (target VPC)—and not the other way around.

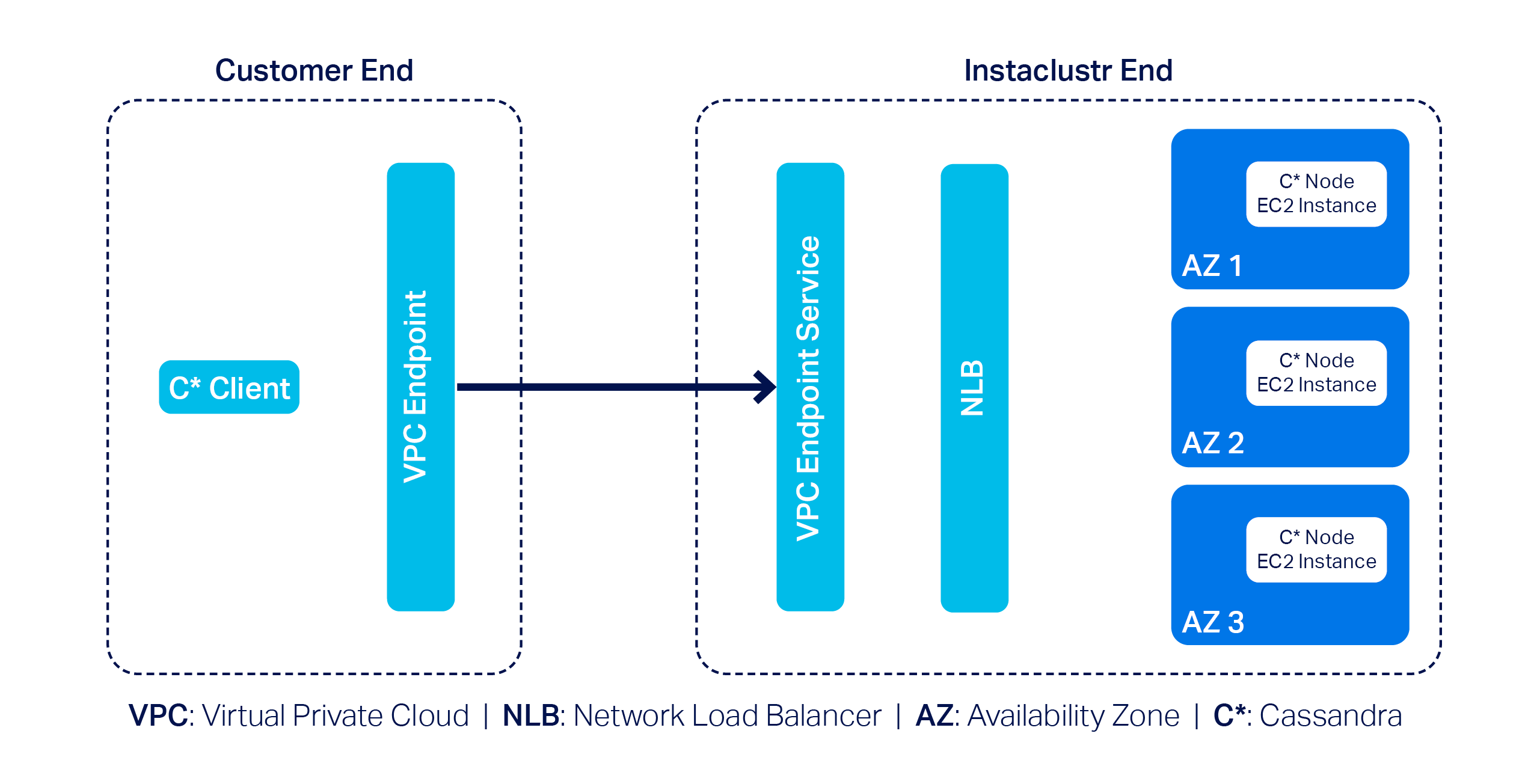

To get an idea of what major building blocks are involved in making up an end-to-end AWS PrivateLink solution for Cassandra, take a look at the following diagram—it’s a simplified representation of the infrastructure used to support a PrivateLink cluster:

In this example, we have a 3-node Cassandra cluster at the far right with one Cassandra node per Availability Zone (or AZ). Next, we have the VPC Endpoint Service and a Network Load Balancer (NLB). The Endpoint Service is essentially the AWS PrivateLink, and by design AWS needs it to be backed by an NLB–that’s pretty much what we have to manage on our side.

On the customer side, they must create a VPC Endpoint that enables them to privately connect to the AWS PrivateLink on our end; naturally, customers will also have to use a Cassandra client(s) to connect to the cluster.

AWS PrivateLink support with Instaclustr for Apache Cassandra

To incorporate AWS PrivateLink support with Instaclustr for Apache Cassandra on our platform, we came across a few technical challenges. First and foremost, the primary challenge was relatively straightforward: Cassandra clients need to talk to each individual node in a cluster.

However, the problem is that nodes in an AWS PrivateLink cluster are only assigned private IPs; that is what the nodes would announce by default when Cassandra clients attempt to discover the topology of the cluster. Cassandra clients cannot do much with the received private IPs as they cannot be used to connect to the nodes directly in an AWS PrivateLink setup.

We devised a plan of attack to get around this problem:

- Make each individual Cassandra node listen for CQL queries on unique ports.

- Configure the NLB so it can route traffic to the appropriate node based on the relevant unique port.

- Let clients implement the AddressTranslator interface from the Cassandra driver. The custom address translator will need to translate the received private IPs to one of the VPC Endpoint Elastic Network Interface (or ENI) IPs without altering the corresponding unique ports.

To understand this approach better, consider the following example:

Suppose we have a 3-node Cassandra cluster. According to the proposed approach we will need to do the followings:

- Let the nodes listen on ports 172.16.0.1:6001 (in AZ1), 172.16.0.2: 6002 (in AZ2) and 172.16.0.3: 6003 (in AZ3)

- Configure the NLB to listen on the same set of ports

- Define and associate target groups based on the port. For instance, the listener on port 6002 will be associated with a target group containing only the node that is listening on port 6002.

- As for how the custom address translator is expected to work,

let’s assume the VPC Endpoint ENI IPs are 192.168.0.1 (in AZ1),

192.168.0.2 (in AZ2) and 192.168.0.3 (in AZ3). The address

translator should translate received addresses like so:

- 172.16.0.1:6001 --> 192.168.0.1:6001 - 172.16.0.2:6002 --> 192.168.0.2:6002 - 172.16.0.3:6003 --> 192.168.0.3:6003

The proposed approach not only solves the connectivity problem but also allows for connecting to appropriate nodes based on query plans generated by load balancing policies.

Around the same time, we came up with a slightly modified approach as well: we realized the need for address translation can be mostly mitigated if we make the Cassandra nodes return the VPC Endpoint ENI IPs in the first place.

But the excitement did not last for long! Why? Because we quickly discovered a key problem: there is a limit to the number of listeners that can be added to any given AWS NLB of just 50.

While 50 is certainly a decent limit, the way we designed our solution meant we wouldn’t be able to provision a cluster with more than 50 nodes. This was quickly deemed to be an unacceptable limitation as it is not uncommon for a cluster to have more than 50 nodes; many Cassandra clusters in our fleet have hundreds of nodes. We had to abandon the idea of address translation and started thinking about alternative solution approaches.

Introducing Shotover Proxy

We were disappointed but did not lose hope. Soon after, we devised a practical solution centred around using one of our open source products: Shotover Proxy.

Shotover Proxy is used with Cassandra clusters to support AWS PrivateLink on the Instaclustr Managed Platform. What is Shotover Proxy, you ask? Shotover is a layer 7 database proxy built to allow developers, admins, DBAs, and operators to modify in-flight database requests. By managing database requests in transit, Shotover gives NetApp Instaclustr customers AWS PrivateLink’s simple and secure network setup with the many benefits of Cassandra.

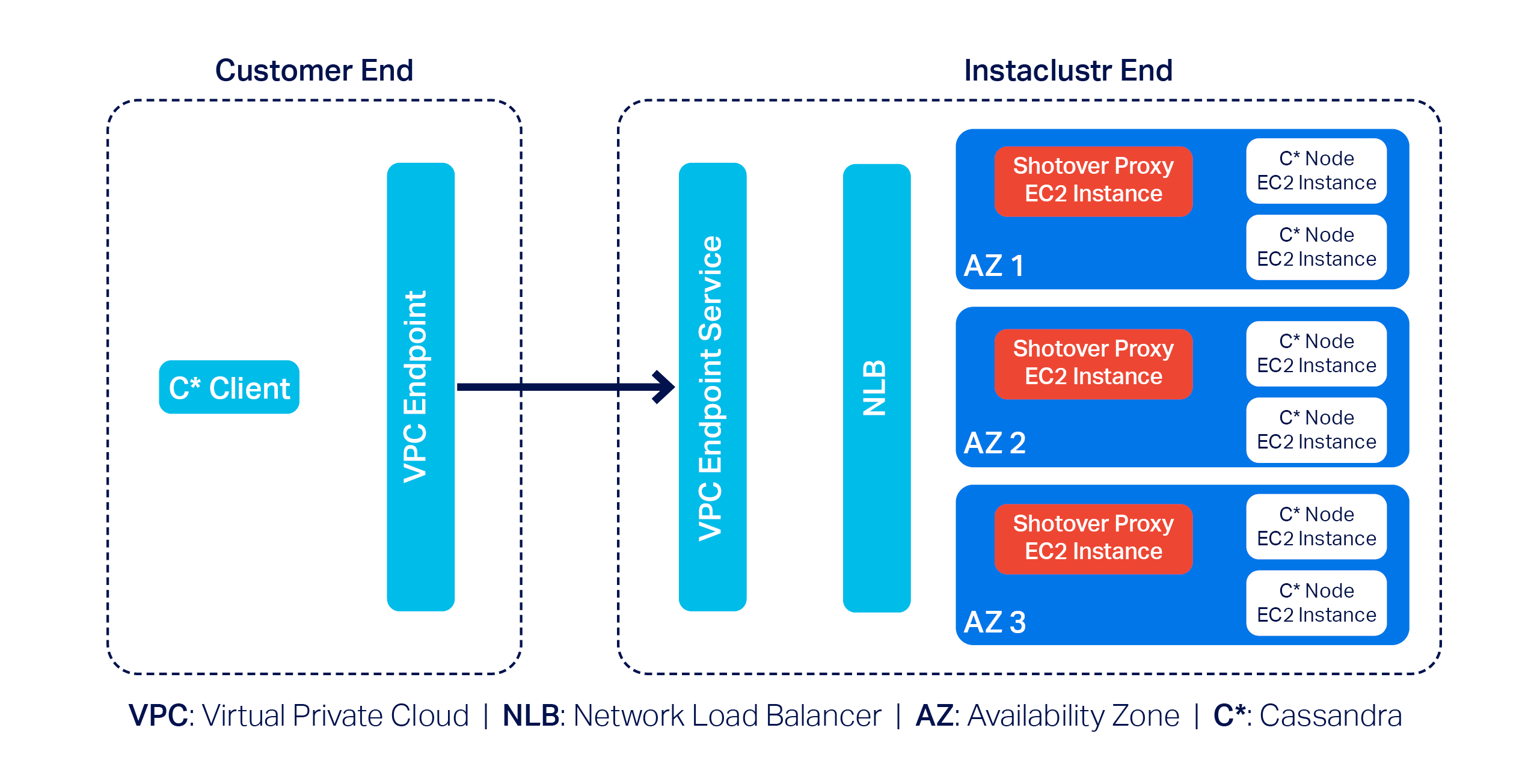

Below is an updated version of the previous diagram that introduces some Shotover nodes in the mix:

As you can see, each AZ now has a dedicated Shotover proxy node.

In the above diagram, we have a 6-node Cassandra cluster. The Cassandra cluster sitting behind the Shotover nodes is an ordinary Private Network Cluster. The role of the Shotover nodes is to manage client requests to the Cassandra nodes while masking the real Cassandra nodes behind them. To the Cassandra client, the Shotover nodes appear to be Cassandra nodes, and it is only them that make up the entire cluster! This is the secret recipe for AWS PrivateLink for Instaclustr for Apache Cassandra that enabled us to get past the challenges discussed earlier.

So how is this model made to work?

Shotover can alter certain requests from—and responses to—the client. It can examine the tokens allocated to the Cassandra nodes in its own AZ (aka rack) and claim to be the owner of all those tokens. This essentially makes them appear to be an aggregation of the nodes in its own rack.

Given the purposely crafted topology and token allocation metadata, while the client directs queries to the Shotover node, the Shotover node in turn can pass them on to the appropriate Cassandra node and then transparently send responses back. It is worth noting that the Shotover nodes themselves do not store any data.

Because we only have 1 Shotover node per AZ in this design and there may be at most about 5 AZs per region, we only need that many listeners in the NLB to make this mechanism work. As such, the 50-listener limit on the NLB was no longer a problem.

The use of Shotover to manage client driver and cluster interoperability may sound straight forward to implement, but developing it was a year-long undertaking. As described above, the initial months of development were devoted to engineering CQL queries on unique ports and the AddressTranslator interface from the Cassandra driver to gracefully manage client connections to the Cassandra cluster. While this solution did successfully provide support for AWS PrivateLink with a Cassandra cluster, we knew that the 50-listener limit on the NLB was a barrier for use and wanted to provide our customers with a solution that could be used for any Cassandra cluster, regardless of node count.

The next few months of engineering were then devoted to the Proof of Concept of an alternative solution with the goal to investigate how Shotover could manage client requests for a Cassandra cluster with any number of nodes. And so, after a solution to support a cluster with any number of nodes was successfully proved, subsequent effort was then devoted to work through stability testing the new solution, the results of that engineering being the stable solution described above.

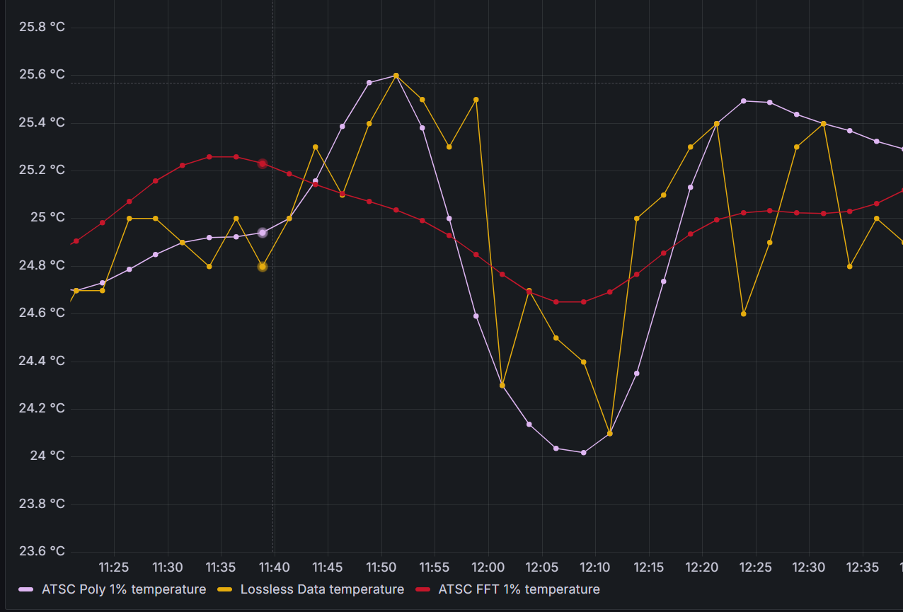

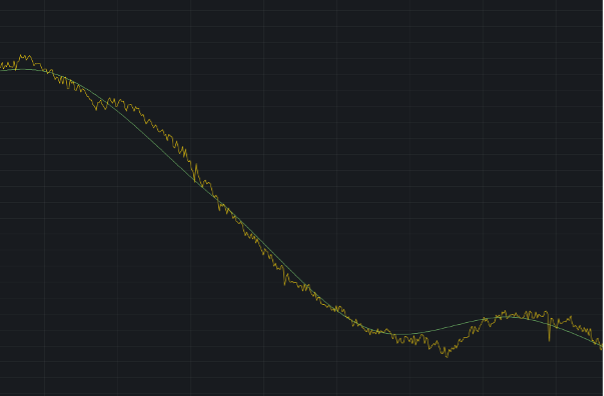

We have also conducted performance testing to evaluate the relative performance of a PrivateLink-enabled Cassandra cluster compared to its non-PrivateLink counterpart. Multiple iterations of performance testing were executed as some adjustments to Shotover were identified from test cases and resulted in the PrivateLink-enabled Cassandra cluster throughput and latency measuring near to a standard Cassandra cluster throughput and latency.

Related content: Read more about creating an AWS PrivateLink-enabled Cassandra cluster on the Instaclustr Managed Platform

The following was our experimental setup for identifying the max throughput in terms of Operations per second of a Cassandra PrivateLink cluster in comparison to a non-Cassandra PrivateLink cluster

- Baseline node size:

i3en.xlarge - Shotover Proxy node size on Cassandra Cluster:

CSO-PRD-c6gd.medium-54 - Cassandra version:

4.1.3 - Shotover Proxy version:

0.2.0 - Other configuration: Repair and backup disabled, Client Encryption disabled

Throughput results

| Operation | Operation rate with PrivateLink and Shotover | Operation rate without PrivateLink |

| Mixed-small (3 Nodes) | 16608 | 16206 |

| Mixed-small (6 Nodes) | 33585 | 33598 |

| Mixed-small (9 Nodes) | 51792 | 51798 |

Across different cluster sizes, we observed no significant difference in operation throughput between PrivateLink and non-PrivateLink configurations.

Latency results

Latency benchmarks were conducted at ~70% of the observed peak throughput (as above) to simulate realistic production traffic.

| Operation | Ops/second | Setup | Mean Latency (ms) | Median Latency (ms) | P95 Latency (ms) | P99 Latency (ms) |

| Mixed-small (3 Nodes) | 11630 | Non-PrivateLink | 9.90 | 3.2 | 53.7 | 119.4 |

| PrivateLink | 9.50 | 3.6 | 48.4 | 118.8 | ||

| Mixed-small (6 Nodes) | 23510 | Non-PrivateLink | 6 | 2.3 | 27.2 | 79.4 |

| PrivateLink | 9.10 | 3.4 | 45.4 | 104.9 | ||

| Mixed-small (9 Nodes) | 36255 | Non-PrivateLink | 5.5 | 2.4 | 21.8 | 67.6 |

| PrivateLink | 11.9 | 2.7 | 77.1 | 141.2 |

Results indicate that for lower to mid-tier throughput levels, AWS PrivateLink introduced minimal to negligible overhead. However, at higher operation rates, we observed increased latency, most notably at the p99 mark—likely due to network level factors or Shotover.

The increase in latency is expected as AWS PrivateLink introduces an additional hop to route traffic securely, which can impact latencies, particularly under heavy load. For the vast majority of applications, the observed latencies remain within acceptable ranges. However, for latency-sensitive workloads, we recommend adding more nodes (for high load cases) to help mitigate the impact of the additional network hop introduced by PrivateLink.

As with any generic benchmarking results, performance may vary depending on specific data model, workload characteristics, and environment. The results presented here are based on specific experimental setup using standard configurations and should primarily be used to compare the relative performance of PrivateLink vs. Non-PrivateLink networking under similar conditions.

Why choose AWS PrivateLink with NetApp Instaclustr?

NetApp’s commitment to innovation means you benefit from cutting-edge technology combined with ease of use. With AWS PrivateLink support on our platform, customers gain:

- Enhanced security: All traffic stays private, never touching the internet.

- Simplified networking: No need to manage complex CIDR overlaps.

- Enterprise scalability: Handles sizable clusters effortlessly.

By addressing challenges, such as the NLB listener cap and private-to-VPC IP translation, we’ve created a solution that balances efficiency, security, and scalability.

Experience PrivateLink today

The integration of AWS PrivateLink with Apache Cassandra® is now generally available with production-ready SLAs for our customers. Log in to the Console to create a Cassandra cluster with support for AWS PrivateLink with just a few clicks today. Whether you’re managing sensitive workloads or demanding performance at scale, this feature delivers unmatched value.

Want to see it in action? Book a free demo today and experience the Shotover-powered magic of AWS PrivateLink firsthand.

Resources

- Getting started: Visit the documentation to learn how to create an AWS PrivateLink-enabled Apache Cassandra cluster on the Instaclustr Managed Platform.

- Connecting clients: Already created a Cassandra cluster with AWS PrivateLink? Click here to read about how to connect Cassandra clients in one VPC to an AWS PrivateLink-enabled Cassandra cluster on the Instaclustr Platform.

- General availability announcement: For more details, read our General Availability announcement on AWS PrivateLink support for Cassandra.

The post Integrating support for AWS PrivateLink with Apache Cassandra® on the NetApp Instaclustr Managed Platform appeared first on Instaclustr.

Compaction Strategies, Performance, and Their Impact on Cassandra Node Density

This is the third post in my series on optimizing Apache Cassandra for maximum cost efficiency through increased node density. In the first post, I examined how streaming operations impact node density and laid out the groundwork for understanding why higher node density leads to significant cost savings. In the second post, I discussed how compaction throughput is critical to node density and introduced the optimizations we implemented in CASSANDRA-15452 to improve throughput on disaggregated storage like EBS.

Cassandra Compaction Throughput Performance Explained

This is the second post in my series on improving node density and lowering costs with Apache Cassandra. In the previous post, I examined how streaming performance impacts node density and operational costs. In this post, I’ll focus on compaction throughput, and a recent optimization in Cassandra 5.0.4 that significantly improves it, CASSANDRA-15452.

This post assumes some familiarity with Apache Cassandra storage engine fundamentals. The documentation has a nice section covering the storage engine if you’d like to brush up before reading this post.

CEP-24 Behind the scenes: Developing Apache Cassandra®’s password validator and generator

Introduction: The need for an Apache Cassandra® password validator and generator

Here’s the problem: while users have always had the ability to create whatever password they wanted in Cassandra–from straightforward to incredibly complex and everything in between–this ultimately created a noticeable security vulnerability.

While organizations might have internal processes for generating secure passwords that adhere to their own security policies, Cassandra itself did not have the means to enforce these standards. To make the security vulnerability worse, if a password initially met internal security guidelines, users could later downgrade their password to a less secure option simply by using “ALTER ROLE” statements.

When internal password requirements are enforced for an individual, users face the additional burden of creating compliant passwords. This inevitably involved lots of trial-and-error in attempting to create a compliant password that satisfied complex security roles.

But what if there was a way to have Cassandra automatically create passwords that meet all bespoke security requirements–but without requiring manual effort from users or system operators?

That’s why we developed CEP-24: Password validation/generation. We recognized that the complexity of secure password management could be significantly reduced (or eliminated entirely) with the right approach–and improving both security and user experience at the same time.

The Goals of CEP-24

A Cassandra Enhancement Proposal (or CEP) is a structured process for proposing, creating, and ultimately implementing new features for the Cassandra project. All CEPs are thoroughly vetted among the Cassandra community before they are officially integrated into the project.

These were the key goals we established for CEP-24:

- Introduce a way to enforce password strength upon role creation or role alteration.

- Implement a reference implementation of a password validator which adheres to a recommended password strength policy, to be used for Cassandra users out of the box.

- Emit a warning (and proceed) or just reject “create role” and “alter role” statements when the provided password does not meet a certain security level, based on user configuration of Cassandra.

- To be able to implement a custom password validator with its own policy, whatever it might be, and provide a modular/pluggable mechanism to do so.

- Provide a way for Cassandra to generate a password which would pass the subsequent validation for use by the user.

The Cassandra Password Validator and Generator builds upon an established framework in Cassandra called Guardrails, which was originally implemented under CEP-3 (more details here).

The password validator implements a custom guardrail introduced

as part of

CEP-24. A custom guardrail can validate and generate values of

arbitrary types when properly implemented. In the CEP-24 context,

the password guardrail provides

CassandraPasswordValidator by extending

ValueValidator, while passwords are generated by

CassandraPasswordGenerator by extending

ValueGenerator. Both components work with passwords as

String type values.

Password validation and generation are configured in the

cassandra.yaml file under the

password_validator section. Let’s explore the key

configuration properties available. First, the

class_name and generator_class_name

parameters specify which validator and generator classes will be

used to validate and generate passwords respectively.

Cassandra

ships CassandraPasswordValidator and CassandraPasswordGenerator out

of the box. However, if a particular enterprise decides that they

need something very custom, they are free to implement their own

validators, put it on Cassandra’s class path and reference it in

the configuration behind class_name parameter. Same for the

validator.

CEP-24 provides implementations of the validator and generator that the Cassandra team believes will satisfy the requirements of most users. These default implementations address common password security needs. However, the framework is designed with flexibility in mind, allowing organizations to implement custom validation and generation rules that align with their specific security policies and business requirements.

password_validator: # Implementation class of a validator. When not in form of FQCN, the # package name org.apache.cassandra.db.guardrails.validators is prepended. # By default, there is no validator. class_name: CassandraPasswordValidator # Implementation class of related generator which generates values which are valid when # tested against this validator. When not in form of FQCN, the # package name org.apache.cassandra.db.guardrails.generators is prepended. # By default, there is no generator. generator_class_name: CassandraPasswordGenerator

Password quality might be looked at as the number of characteristics a password satisfies. There are two levels for any password to be evaluated – warning level and failure level. Warning and failure levels nicely fit into how Guardrails act. Every guardrail has warning and failure thresholds. Based on what value a specific guardrail evaluates, it will either emit a warning to a user that its usage is discouraged (but ultimately allowed) or it will fail to be set altogether.

This same principle applies to password evaluation – each password is assessed against both warning and failure thresholds. These thresholds are determined by counting the characteristics present in the password. The system evaluates five key characteristics: the password’s overall length, the number of uppercase characters, the number of lowercase characters, the number of special characters, and the number of digits. A comprehensive password security policy can be enforced by configuring minimum requirements for each of these characteristics.

# There are four characteristics: # upper-case, lower-case, special character and digit. # If this value is set e.g. to 3, a password has to # consist of 3 out of 4 characteristics. # For example, it has to contain at least 2 upper-case characters, # 2 lower-case, and 2 digits to pass, # but it does not have to contain any special characters. # If the number of characteristics found in the password is # less than or equal to this number, it will emit a warning. characteristic_warn: 3 # If the number of characteristics found in the password is #less than or equal to this number, it will emit a failure. characteristic_fail: 2

Next, there are configuration parameters for each characteristic which count towards warning or failure:

# If the password is shorter than this value, # the validator will emit a warning. length_warn: 12 # If a password is shorter than this value, # the validator will emit a failure. length_fail: 8 # If a password does not contain at least n # upper-case characters, the validator will emit a warning. upper_case_warn: 2 # If a password does not contain at least # n upper-case characters, the validator will emit a failure. upper_case_fail: 1 # If a password does not contain at least # n lower-case characters, the validator will emit a warning. lower_case_warn: 2 # If a password does not contain at least # n lower-case characters, the validator will emit a failure. lower_case_fail: 1 # If a password does not contain at least # n digits, the validator will emit a warning. digit_warn: 2 # If a password does not contain at least # n digits, the validator will emit a failure. digit_fail: 1 # If a password does not contain at least # n special characters, the validator will emit a warning. special_warn: 2 # If a password does not contain at least # n special characters, the validator will emit a failure. special_fail: 1

It is also possible to say that illegal sequences of certain length found in a password will be forbidden:

# If a password contains illegal sequences that are at least this long, it is invalid. # Illegal sequences might be either alphabetical (form 'abcde'), # numerical (form '34567'), or US qwerty (form 'asdfg') as well # as sequences from supported character sets. # The minimum value for this property is 3, # by default it is set to 5. illegal_sequence_length: 5

Lastly, it is also possible to configure a dictionary of passwords to check against. That way, we will be checking against password dictionary attacks. It is up to the operator of a cluster to configure the password dictionary:

# Dictionary to check the passwords against. Defaults to no dictionary. # Whole dictionary is cached into memory. Use with caution with relatively big dictionaries. # Entries in a dictionary, one per line, have to be sorted per String's compareTo contract. dictionary: /path/to/dictionary/file

Now that we have gone over all the configuration parameters, let’s take a look at an example of how password validation and generation look in practice.

Consider a scenario where a Cassandra super-user (such as the default ‘cassandra’ role) attempts to create a new role named ‘alice’.

cassandra@cqlsh> CREATE ROLE alice WITH PASSWORD = 'cassandraisadatabase' AND LOGIN = true; InvalidRequest: Error from server: code=2200 [Invalid query] message="Password was not set as it violated configured password strength policy. To fix this error, the following has to be resolved: Password contains the dictionary word 'cassandraisadatabase'. You may also use 'GENERATED PASSWORD' upon role creation or alteration."

The password is not found in the dictionary, but it is not long enough. When an operator sees this, they will try to fix it by making the password longer:

cassandra@cqlsh> CREATE ROLE alice WITH PASSWORD = 'T8aum3?' AND LOGIN = true; InvalidRequest: Error from server: code=2200 [Invalid query] message="Password was not set as it violated configured password strength policy. To fix this error, the following has to be resolved: Password must be 8 or more characters in length. You may also use 'GENERATED PASSWORD' upon role creation or alteration."

The password is finally set, but it is not completely secure. It satisfies the minimum requirements but our validator identified that not all characteristics were met.

cassandra@cqlsh> CREATE ROLE alice WITH PASSWORD = 'mYAtt3mp' AND LOGIN = true; Warnings: Guardrail password violated: Password was set, however it might not be strong enough according to the configured password strength policy. To fix this warning, the following has to be resolved: Password must be 12 or more characters in length. Passwords must contain 2 or more digit characters. Password must contain 2 or more special characters. Password matches 2 of 4 character rules, but 4 are required. You may also use 'GENERATED PASSWORD' upon role creation or alteration.

The password is finally set, but it is not completely secure. It satisfies the minimum requirements but our validator identified that not all characteristics were met.

When an operator saw this, they noticed the note about the ‘GENERATED PASSWORD’ clause which will generate a password automatically without an operator needing to invent it on their own. This is a lot of times, as shown, a cumbersome process better to be left on a machine. Making it also more efficient and reliable.

cassandra@cqlsh> ALTER ROLE alice WITH GENERATED PASSWORD; generated_password ------------------ R7tb33?.mcAX

The generated password shown above will satisfy all the rules we have configured in the cassandra.yaml automatically. Every generated password will satisfy all of the rules. This is clearly an advantage over manual password generation.

When the CQL statement is executed, it will be visible in the CQLSH history (HISTORY command or in cqlsh_history file) but the password will not be logged, hence it cannot leak. It will also not appear in any auditing logs. Previously, Cassandra had to obfuscate such statements. This is not necessary anymore.

We can create a role with generated password like this:

cassandra@cqlsh> CREATE ROLE alice WITH GENERATED PASSWORD AND LOGIN = true; or by CREATE USER: cassandra@cqlsh> CREATE USER alice WITH GENERATED PASSWORD;

When a password is generated for alice (out of scope of this documentation), she can log in:

$ cqlsh -u alice -p R7tb33?.mcAX ... alice@cqlsh>

Note: It is recommended to save password to ~/.cassandra/credentials, for example:

[PlainTextAuthProvider] username = cassandra password = R7tb33?.mcAX

and by setting auth_provider in ~/.cassandra/cqlshrc

[auth_provider] module = cassandra.auth classname = PlainTextAuthProvider

It is also possible to configure password validators in such a way that a user does not see why a password failed. This is driven by configuration property for password_validator called detailed_messages. When set to false, the violations will be very brief:

alice@cqlsh> ALTER ROLE alice WITH PASSWORD = 'myattempt'; InvalidRequest: Error from server: code=2200 [Invalid query] message="Password was not set as it violated configured password strength policy. You may also use 'GENERATED PASSWORD' upon role creation or alteration."

The following command will automatically generate a new password that meets all configured security requirements.

alice@cqlsh> ALTER ROLE alice WITH GENERATED PASSWORD;

Several potential enhancements to password generation and validation could be implemented in future releases. One promising extension would be validating new passwords against previous values. This would prevent users from reusing passwords until after they’ve created a specified number of different passwords. A related enhancement could include restricting how frequently users can change their passwords, preventing rapid cycling through passwords to circumvent history-based restrictions.

These features, while valuable for comprehensive password security, were considered beyond the scope of the initial implementation and may be addressed in future updates.

Final thoughts and next steps

The Cassandra Password Validator and Generator implemented under CEP-24 represents a significant improvement in Cassandra’s security posture.

By providing robust, configurable password policies with built-in enforcement mechanisms and convenient password generation capabilities, organizations can now ensure compliance with their security standards directly at the database level. This not only strengthens overall system security but also improves the user experience by eliminating guesswork around password requirements.

As Cassandra continues to evolve as an enterprise-ready database solution, these security enhancements demonstrate a commitment to meeting the demanding security requirements of modern applications while maintaining the flexibility that makes Cassandra so powerful.

Ready to experience CEP-24 yourself? Try it out on the Instaclustr Managed Platform and spin up your first Cassandra cluster for free.

CEP-24 is just our latest contribution to open source. Check out everything else we’re working on here.

The post CEP-24 Behind the scenes: Developing Apache Cassandra®’s password validator and generator appeared first on Instaclustr.

Introduction to similarity search: Part 2–Simplifying with Apache Cassandra® 5’s new vector data type

In Part 1 of this series, we explored how you can combine Cassandra 4 and OpenSearch to perform similarity searches with word embeddings. While that approach is powerful, it requires managing two different systems.

But with the release of Cassandra 5, things become much simpler.

Cassandra 5 introduces a native VECTOR data type and built-in Vector Search capabilities, simplifying the architecture by enabling Cassandra 5 to handle storage, indexing, and querying seamlessly within a single system.

Now in Part 2, we’ll dive into how Cassandra 5 streamlines the process of working with word embeddings for similarity search. We’ll walk through how the new vector data type works, how to store and query embeddings, and how the Storage-Attached Indexing (SAI) feature enhances your ability to efficiently search through large datasets.

The power of vector search in Cassandra 5

Vector search is a game-changing feature added in Cassandra 5 that enables you to perform similarity searches directly within the database. This is especially useful for AI applications, where embeddings are used to represent data like text or images as high-dimensional vectors. The goal of vector search is to find the closest matches to these vectors, which is critical for tasks like product recommendations or image recognition.

The key to this functionality lies in embeddings: arrays of floating-point numbers that represent the similarity of objects. By storing these embeddings as vectors in Cassandra, you can use Vector Search to find connections in your data that may not be obvious through traditional queries.

How vectors work

Vectors are fixed-size sequences of non-null values, much like lists. However, in Cassandra 5, you cannot modify individual elements of a vector — you must replace the entire vector if you need to update it. This makes vectors ideal for storing embeddings, where you need to work with the whole data structure at once.

When working with embeddings, you’ll typically store them as vectors of floating-point numbers to represent the semantic meaning.

Storage-Attached Indexing (SAI): The engine behind vector search

Vector Search in Cassandra 5 is powered by Storage-Attached Indexing, which enables high-performance indexing and querying of vector data. SAI is essential for Vector Search, providing the ability to create column-level indexes on vector data types. This ensures that your vector queries are both fast and scalable, even with large datasets.

SAI isn’t just limited to vectors—it also indexes other types of data, making it a versatile tool for boosting the performance of your queries across the board.

Example: Performing similarity search with Cassandra 5’s vector data type

Now that we’ve introduced the new vector data type and the power of Vector Search in Cassandra 5, let’s dive into a practical example. In this section, we’ll show how to set up a table to store embeddings, insert data, and perform similarity searches directly within Cassandra.

Step 1: Setting up the embeddings table

To get started with this example, you’ll need access to a Cassandra 5 cluster. Cassandra 5 introduces native support for vector data types and Vector Search, available on Instaclustr’s managed platform. Once you have your cluster up and running, the first step is to create a table to store the embeddings. We’ll also create an index on the vector column to optimize similarity searches using SAI.

CREATE KEYSPACE aisearch WITH REPLICATION = {{'class': 'SimpleStrategy', ' replication_factor': 1}};

CREATE TABLE IF NOT EXISTS embeddings (

id UUID,

paragraph_uuid UUID,

filename TEXT,

embeddings vector<float, 300>,

text TEXT,

last_updated timestamp,

PRIMARY KEY (id, paragraph_uuid)

);

CREATE INDEX IF NOT EXISTS ann_index

ON embeddings(embeddings) USING 'sai';

This setup allows us to store the embeddings as 300-dimensional vectors, along with metadata like file names and text. The SAI index will be used to speed up similarity searches on the embedding’s column.

You can also fine-tune the index by specifying the similarity function to be used for vector comparisons. Cassandra 5 supports three types of similarity functions: DOT_PRODUCT, COSINE, and EUCLIDEAN. By default, the similarity function is set to COSINE, but you can specify your preferred method when creating the index:

CREATE INDEX IF NOT EXISTS ann_index

ON embeddings(embeddings) USING 'sai'

WITH OPTIONS = { 'similarity_function': 'DOT_PRODUCT' };

Each similarity function has its own advantages depending on your use case. DOT_PRODUCT is often used when you need to measure the direction and magnitude of vectors, COSINE is ideal for comparing the angle between vectors, and EUCLIDEAN calculates the straight-line distance between vectors. By selecting the appropriate function, you can optimize your search results to better match the needs of your application.

Step 2: Inserting embeddings into Cassandra 5

To insert embeddings into Cassandra 5, we can use the same code from the first part of this series to extract text from files, load the FastText model, and generate the embeddings. Once the embeddings are generated, the following function will insert them into Cassandra:

import time

from uuid import uuid4, UUID

from cassandra.cluster import Cluster

from cassandra.query import SimpleStatement

from cassandra.policies import DCAwareRoundRobinPolicy

from cassandra.auth import PlainTextAuthProvider

from google.colab import userdata

# Connect to the single-node cluster

cluster = Cluster(

# Replace with your IP list

["xxx.xxx.xxx.xxx", "xxx.xxx.xxx.xxx ", " xxx.xxx.xxx.xxx "], # Single-node cluster address

load_balancing_policy=DCAwareRoundRobinPolicy(local_dc='AWS_VPC_US_EAST_1'), # Update the local data centre if needed

port=9042,

auth_provider=PlainTextAuthProvider (

username='iccassandra',

password='replace_with_your_password'

)

)

session = cluster.connect()

print('Connected to cluster %s' % cluster.metadata.cluster_name)

def insert_embedding_to_cassandra(session, embedding, id=None, paragraph_uuid=None, filename=None, text=None, keyspace_name=None):

try:

embeddings = list(map(float, embedding))

# Generate UUIDs if not provided

if id is None:

id = uuid4()

if paragraph_uuid is None:

paragraph_uuid = uuid4()

# Ensure id and paragraph_uuid are UUID objects

if isinstance(id, str):

id = UUID(id)

if isinstance(paragraph_uuid, str):

paragraph_uuid = UUID(paragraph_uuid)

# Create the query string with placeholders

insert_query = f"""

INSERT INTO {keyspace_name}.embeddings (id, paragraph_uuid, filename, embeddings, text, last_updated)

VALUES (?, ?, ?, ?, ?, toTimestamp(now()))

"""

# Create a prepared statement with the query

prepared = session.prepare(insert_query)

# Execute the query

session.execute(prepared.bind((id, paragraph_uuid, filename, embeddings, text)))

return None # Successful insertion

except Exception as e:

error_message = f"Failed to execute query:\nError: {str(e)}"

return error_message # Return error message on failure

def insert_with_retry(session, embedding, id=None, paragraph_uuid=None,

filename=None, text=None, keyspace_name=None, max_retries=3,

retry_delay_seconds=1):

retry_count = 0

while retry_count < max_retries:

result = insert_embedding_to_cassandra(session, embedding, id, paragraph_uuid, filename, text, keyspace_name)

if result is None:

return True # Successful insertion

else:

retry_count += 1

print(f"Insertion failed on attempt {retry_count} with error: {result}")

if retry_count < max_retries:

time.sleep(retry_delay_seconds) # Delay before the next retry

return False # Failed after max_retries

# Replace the file path pointing to the desired file

file_path = "/path/to/Cassandra-Best-Practices.pdf"

paragraphs_with_embeddings =

extract_text_with_page_number_and_embeddings(file_path)

from tqdm import tqdm

for paragraph in tqdm(paragraphs_with_embeddings, desc="Inserting paragraphs"):

if not insert_with_retry(

session=session,

embedding=paragraph['embedding'],

id=paragraph['uuid'],

paragraph_uuid=paragraph['paragraph_uuid'],

text=paragraph['text'],

filename=paragraph['filename'],

keyspace_name=keyspace_name,

max_retries=3,

retry_delay_seconds=1

):

# Display an error message if insertion fails

tqdm.write(f"Insertion failed after maximum retries for UUID

{paragraph['uuid']}: {paragraph['text'][:50]}...")

This function handles inserting embeddings and metadata into Cassandra, ensuring that UUIDs are correctly generated for each entry.

Step 3: Performing similarity searches in Cassandra 5

Once the embeddings are stored, we can perform similarity searches directly within Cassandra using the following function:

import numpy as np

# ------------------ Embedding Functions ------------------

def text_to_vector(text):

"""Convert a text chunk into a vector using the FastText model."""

words = text.split()

vectors = [fasttext_model[word] for word in words if word in fasttext_model.key_to_index]

return np.mean(vectors, axis=0) if vectors else np.zeros(fasttext_model.vector_size)

def find_similar_texts_cassandra(session, input_text, keyspace_name=None, top_k=5):

# Convert the input text to an embedding

input_embedding = text_to_vector(input_text)

input_embedding_str = ', '.join(map(str, input_embedding.tolist()))

# Adjusted query without the ORDER BY clause and correct comment syntax

query = f"""

SELECT text, filename, similarity_cosine(embeddings, ?) AS similarity

FROM {keyspace_name}.embeddings

ORDER BY embeddings ANN OF [{input_embedding_str}]

LIMIT {top_k};

"""

prepared = session.prepare(query)

bound = prepared.bind((input_embedding,))

rows = session.execute(bound)

# Sort the results by similarity in Python

similar_texts = sorted([(row.similarity, row.filename, row.text) for row in rows], key=lambda x: x[0], reverse=True)

return similar_texts[:top_k]

from IPython.display import display, HTML

# The word you want to find similarities for

input_text = "place"

# Call the function to find similar texts in the Cassandra database

similar_texts = find_similar_texts_cassandra(session, input_text, keyspace_name="aisearch", top_k=10)

This function searches for similar embeddings in Cassandra and retrieves the top results based on cosine similarity. Under the hood, Cassandra’s vector search uses Hierarchical Navigable Small Worlds (HNSW). HNSW organizes data points in a multi-layer graph structure, making queries significantly faster by narrowing down the search space efficiently—particularly important when handling large datasets.

Step 4: Displaying the results

To display the results in a readable format, we can loop through the similar texts and present them along with their similarity scores:

# Print the similar texts along with their similarity scores

for similarity, filename, text in similar_texts:

html_content = f"""

<div style="margin-bottom: 10px;">

<p><b>Similarity:</b> {similarity:.4f}</p>

<p><b>Text:</b> {text}</p>

<p><b>File:</b> {filename}</p>

</div>

<hr/>

"""

display(HTML(html_content))

This code will display the top similar texts, along with their similarity scores and associated file names.

Cassandra 5 vs. Cassandra 4 + OpenSearch®

Cassandra 4 relies on an integration with OpenSearch to handle word embeddings and similarity searches. This approach works well for applications that are already using or comfortable with OpenSearch, but it does introduce additional complexity with the need to maintain two systems.

Cassandra 5, on the other hand, brings vector support directly into the database. With its native VECTOR data type and similarity search functions, it simplifies your architecture and improves performance, making it an ideal solution for applications that require embedding-based searches at scale.

| Feature | Cassandra 4 + OpenSearch | Cassandra 5 (Preview) |

| Embedding Storage | OpenSearch | Native VECTOR Data Type |

| Similarity Search | KNN Plugin in OpenSearch | COSINE, EUCLIDEAN, DOT_PRODUCT |

| Search Method | Exact K-Nearest Neighbor | Approximate Nearest Neighbor (ANN) |

| System Complexity | Requires two systems | All-in-one Cassandra solution |

Conclusion: A simpler path to similarity search with Cassandra 5

With Cassandra 5, the complexity of setting up and managing a separate search system for word embeddings is gone. The new vector data type and Vector Search capabilities allow you to perform similarity searches directly within Cassandra, simplifying your architecture and making it easier to build AI-powered applications.

Coming up: more in-depth examples and use cases that demonstrate how to take full advantage of these new features in Cassandra 5 in future blogs!

Ready to experience vector search with Cassandra 5? Spin up your first cluster for free on the Instaclustr Managed Platform and try it out!

The post Introduction to similarity search: Part 2–Simplifying with Apache Cassandra® 5’s new vector data type appeared first on Instaclustr.

Introduction to similarity search with word embeddings: Part 1–Apache Cassandra® 4.0 and OpenSearch®

Word embeddings have revolutionized how we approach tasks like natural language processing, search, and recommendation engines.

They allow us to convert words and phrases into numerical representations (vectors) that capture their meaning based on the context in which they appear. Word embeddings are especially useful for tasks where traditional keyword searches fall short, such as finding semantically similar documents or making recommendations based on textual data.

For example: a search for “Laptop” might return results related to “Notebook” or “MacBook” when using embeddings (as opposed to something like “Tablet”) offering a more intuitive and accurate search experience.

As applications increasingly rely on AI and machine learning to drive intelligent search and recommendation engines, the ability to efficiently handle word embeddings has become critical. That’s where databases like Apache Cassandra come into play—offering the scalability and performance needed to manage and query large amounts of vector data.

In Part 1 of this series, we’ll explore how you can leverage word embeddings for similarity searches using Cassandra 4 and OpenSearch. By combining Cassandra’s robust data storage capabilities with OpenSearch’s powerful search functions, you can build scalable and efficient systems that handle both metadata and word embeddings.

Cassandra 4 and OpenSearch: A partnership for embeddings

Cassandra 4 doesn’t natively support vector data types or specific similarity search functions, but that doesn’t mean you’re out of luck. By integrating Cassandra with OpenSearch, an open-source search and analytics platform, you can store word embeddings and perform similarity searches using the k-Nearest Neighbors (kNN) plugin.

This hybrid approach is advantageous over relying on OpenSearch alone because it allows you to leverage Cassandra’s strengths as a high-performance, scalable database for data storage while using OpenSearch for its robust indexing and search capabilities.

Instead of duplicating large volumes of data into OpenSearch solely for search purposes, you can keep the original data in Cassandra. OpenSearch, in this setup, acts as an intelligent pointer, indexing the embeddings stored in Cassandra and performing efficient searches without the need to manage the entire dataset directly.

This approach not only optimizes resource usage but also enhances system maintainability and scalability by segregating storage and search functionalities into specialized layers.

Deploying the environment

To set up your environment for word embeddings and similarity search, you can leverage the Instaclustr Managed Platform, which simplifies deploying and managing your Cassandra cluster and OpenSearch. Instaclustr takes care of the heavy lifting, allowing you to focus on building your application rather than managing infrastructure. In this configuration, Cassandra serves as your primary data store, while OpenSearch handles vector operations and similarity searches.

Here’s how to get started:

- Deploy a managed Cassandra cluster: Start by provisioning your Cassandra 4 cluster on the Instaclustr platform. This managed solution ensures your cluster is optimized, secure, and ready to store non-vector data.

- Set up OpenSearch with kNN plugin: Instaclustr also offers a fully managed OpenSearch service. You will need to deploy OpenSearch, with the kNN plugin enabled, which is critical for handling word embeddings and executing similarity searches.

By using Instaclustr, you gain access to a robust platform that seamlessly integrates Cassandra and OpenSearch, combining Cassandra’s scalable, fault-tolerant database with OpenSearch’s powerful search capabilities. This managed environment minimizes operational complexity, so you can focus on delivering fast and efficient similarity searches for your application.

Preparing the environment

Now that we’ve outlined the environment setup, let’s dive into the specific technical steps to prepare Cassandra and OpenSearch for storing and searching word embeddings.

Step 1: Setting up Cassandra

In Cassandra, we’ll need to create a table to store the metadata. Here’s how to do that:

- Create the Table:

Next, create a table to store the embeddings. This table will hold details such as the embedding vector, related text, and metadata:CREATE KEYSPACE IF NOT EXISTS aisearch WITH REPLICATION = {‘class’: ‘SimpleStrategy’, ‘

CREATE KEYSPACE IF NOT EXISTS aisearch WITH REPLICATION = {'class': 'SimpleStrategy', '

replication_factor': 3};

USE file_metadata;

DROP TABLE IF EXISTS file_metadata;

CREATE TABLE IF NOT EXISTS file_metadata (

id UUID,

paragraph_uuid UUID,

filename TEXT,

text TEXT,

last_updated timestamp,

PRIMARY KEY (id, paragraph_uuid)

);

Step 2: Configuring OpenSearch

In OpenSearch, you’ll need to create an index that supports vector operations for similarity search. Here’s how you can configure it:

- Create the index:

Define the index settings and mappings, ensuring that vector operations are enabled and that the correct space type (e.g., L2) is used for similarity calculations.

{

"settings": {

"index": {

"number_of_shards": 2,

"knn": true,

"knn.space_type": "l2"

}

},

"mappings": {

"properties": {

"file_uuid": {

"type": "keyword"

},

"paragraph_uuid": {

"type": "keyword"

},

"embedding": {

"type": "knn_vector",

"dimension": 300

}

}

}

}

This index configuration is optimized for storing and searching embeddings using the k-Nearest Neighbors algorithm, which is crucial for similarity search.

With these steps, your environment will be ready to handle word embeddings for similarity search using Cassandra and OpenSearch.

Generating embeddings with FastText

Once you have your environment set up, the next step is to generate the word embeddings that will drive your similarity search. For this, we’ll use FastText, a popular library from Facebook’s AI Research team that provides pre-trained word vectors. Specifically, we’re using the crawl-300d-2M model, which offers 300-dimensional vectors for millions of English words.

Step 1: Download and load the FastText model

To start, you’ll need to download the pre-trained model file. This can be done easily using Python and the requests library. Here’s the process:

1. Download the FastText model: The FastText model is stored in a zip file, which you can download from the official FastText website. The following Python script will handle the download and extraction:

import requests import zipfile import os # Adjust file_url and local_filename variables accordingly file_url = 'https://dl.fbaipublicfiles.com/fasttext/vectors-english/crawl-300d-2M.vec.zip' local_filename = '/content/gdrive/MyDrive/0_notebook_files/model/crawl-300d-2M.vec.zip' extract_dir = '/content/gdrive/MyDrive/0_notebook_files/model/' def download_file(url, filename): with requests.get(url, stream=True) as r: r.raise_for_status() os.makedirs(os.path.dirname(filename), exist_ok=True) with open(filename, 'wb') as f: for chunk in r.iter_content(chunk_size=8192): f.write(chunk) def unzip_file(filename, extract_to): with zipfile.ZipFile(filename, 'r') as zip_ref: zip_ref.extractall(extract_to) # Download and extract download_file(file_url, local_filename) unzip_file(local_filename, extract_dir)

2. Load the model: Once the model is downloaded and extracted, you’ll load it using Gensim’s KeyedVectors class. This allows you to work with the embeddings directly:

from gensim.models import KeyedVectors # Adjust model_path variable accordingly model_path = "/content/gdrive/MyDrive/0_notebook_files/model/crawl-300d-2M.vec" fasttext_model = KeyedVectors.load_word2vec_format(model_path, binary=False)

Step 2: Generate embeddings from text

With the FastText model loaded, the next task is to convert text into vectors. This process involves splitting the text into words, looking up the vector for each word in the FastText model, and then averaging the vectors to get a single embedding for the text.

Here’s a function that handles the conversion:

import numpy as np

import re

def text_to_vector(text):

"""Convert text into a vector using the FastText model."""

text = text.lower()

words = re.findall(r'\b\w+\b', text)

vectors = [fasttext_model[word] for word in words if word in fasttext_model.key_to_index]

if not vectors:

print(f"No embeddings found for text: {text}")

return np.zeros(fasttext_model.vector_size)

return np.mean(vectors, axis=0)

This function tokenizes the input text, retrieves the corresponding word vectors from the model, and computes the average to create a final embedding.

Step 3: Extract text and generate embeddings from documents

In real-world applications, your text might come from various types of documents, such as PDFs, Word files, or presentations. The following code shows how to extract text from different file formats and convert that text into embeddings:

import uuid

import mimetypes

import pandas as pd

from pdfminer.high_level import extract_pages

from pdfminer.layout import LTTextContainer

from docx import Document

from pptx import Presentation

def generate_deterministic_uuid(name):

return uuid.uuid5(uuid.NAMESPACE_DNS, name)

def generate_random_uuid():

return uuid.uuid4()

def get_file_type(file_path):

# Guess the MIME type based on the file extension

mime_type, _ = mimetypes.guess_type(file_path)

return mime_type

def extract_text_from_excel(excel_path):

xls = pd.ExcelFile(excel_path)

text_list = []

for sheet_index, sheet_name in enumerate(xls.sheet_names):

df = xls.parse(sheet_name)

for row in df.iterrows():

text_list.append((" ".join(map(str, row[1].values)), sheet_index + 1)) # +1 to make it 1 based index

return text_list

def extract_text_from_pdf(pdf_path):

return [(text_line.get_text().strip().replace('\xa0', ' '), page_num)

for page_num, page_layout in enumerate(extract_pages(pdf_path), start=1)

for element in page_layout if isinstance(element, LTTextContainer)

for text_line in element if text_line.get_text().strip()]

def extract_text_from_word(file_path):

doc = Document(file_path)

return [(para.text, (i == 0) + 1) for i, para in enumerate(doc.paragraphs) if para.text.strip()]

def extract_text_from_txt(file_path):

with open(file_path, 'r') as file:

return [(line.strip(), 1) for line in file.readlines() if line.strip()]

def extract_text_from_pptx(pptx_path):

prs = Presentation(pptx_path)

return [(shape.text.strip(), slide_num) for slide_num, slide in enumerate(prs.slides, start=1)

for shape in slide.shapes if hasattr(shape, "text") and shape.text.strip()]

def extract_text_with_page_number_and_embeddings(file_path, embedding_function):

file_uuid = generate_deterministic_uuid(file_path)

file_type = get_file_type(file_path)

extractors = {

'text/plain': extract_text_from_txt,

'application/pdf': extract_text_from_pdf,

'application/vnd.openxmlformats-officedocument.wordprocessingml.document': extract_text_from_word,

'application/vnd.openxmlformats-officedocument.presentationml.presentation': extract_text_from_pptx,

'application/zip': lambda path: extract_text_from_pptx(path) if path.endswith('.pptx') else [],

'application/vnd.openxmlformats-officedocument.spreadsheetml.sheet': extract_text_from_excel,

'application/vnd.ms-excel': extract_text_from_excel

}

text_list = extractors.get(file_type, lambda _: [])(file_path)

return [

{

"uuid": file_uuid,

"paragraph_uuid": generate_random_uuid(),

"filename": file_path,

"text": text,

"page_num": page_num,

"embedding": embedding

}

for text, page_num in text_list

if (embedding := embedding_function(text)).any() # Check if the embedding is not all zeros

]

# Replace the file path with the one you want to process

file_path = "../../docs-manager/Cassandra-Best-Practices.pdf"

paragraphs_with_embeddings = extract_text_with_page_number_and_embeddings(file_path)

This code handles extracting text from different document types, generating embeddings for each text chunk, and associating them with unique IDs.

With FastText set up and embeddings generated, you’re now ready to store these vectors in OpenSearch and start performing similarity searches.

Performing similarity searches

To conduct similarity searches, we utilize the k-Nearest Neighbors (kNN) plugin within OpenSearch. This plugin allows us to efficiently search for the most similar embeddings stored in the system. Essentially, you’re querying OpenSearch to find the closest matches to a word or phrase based on your embeddings.

For example, if you’ve embedded product descriptions, using kNN search helps you locate products that are semantically similar to a given input. This capability can significantly enhance your application’s recommendation engine, categorization, or clustering.

This setup with Cassandra and OpenSearch is a powerful combination, but it’s important to remember that it requires managing two systems. As Cassandra evolves, the introduction of built-in vector support in Cassandra 5 simplifies this architecture. But for now, let’s focus on leveraging both systems to get the most out of similarity searches.

Example: Inserting metadata in Cassandra and embeddings in OpenSearch

In this example, we use Cassandra 4 to store metadata related to files and paragraphs, while OpenSearch handles the actual word embeddings. By storing the paragraph and file IDs in both systems, we can link the metadata in Cassandra with the embeddings in OpenSearch.

We first need to store metadata such as the file name, paragraph UUID, and other relevant details in Cassandra. This metadata will be crucial for linking the data between Cassandra, OpenSearch and the file itself in filesystem.

The following code demonstrates how to insert this metadata into Cassandra and embeddings in OpenSearch, make sure to run the previous script, so the “paragraphs_with_embeddings” variable will be populated:

from tqdm import tqdm

# Function to insert data into both Cassandra and OpenSearch

def insert_paragraph_data(session, os_client, paragraph, keyspace_name, index_name):

# Insert into Cassandra

cassandra_result = insert_with_retry(

session=session,

id=paragraph['uuid'],

paragraph_uuid=paragraph['paragraph_uuid'],

text=paragraph['text'],

filename=paragraph['filename'],

keyspace_name=keyspace_name,

max_retries=3,

retry_delay_seconds=1

)

if not cassandra_result:

return False # Stop further processing if Cassandra insertion fails

# Insert into OpenSearch

opensearch_result = insert_embedding_to_opensearch(

os_client=os_client,

index_name=index_name,

file_uuid=paragraph['uuid'],

paragraph_uuid=paragraph['paragraph_uuid'],

embedding=paragraph['embedding']

)

if opensearch_result is not None:

return False # Return False if OpenSearch insertion fails

return True # Return True on success for both

# Process each paragraph with a progress bar

print("Starting batch insertion of paragraphs.")

for paragraph in tqdm(paragraphs_with_embeddings, desc="Inserting paragraphs"):

if not insert_paragraph_data(

session=session,

os_client=os_client,

paragraph=paragraph,

keyspace_name=keyspace_name,

index_name=index_name

):

print(f"Insertion failed for UUID {paragraph['uuid']}: {paragraph['text'][:50]}...")

print("Batch insertion completed.")

Performing similarity search

Now that we’ve stored both metadata in Cassandra and embeddings in OpenSearch, it’s time to perform a similarity search. This step involves searching OpenSearch for embeddings that closely match a given input and then retrieving the corresponding metadata from Cassandra.

The process is straightforward: we start by converting the input text into an embedding, then use the k-Nearest Neighbors (kNN) plugin in OpenSearch to find the most similar embeddings. Once we have the results, we fetch the related metadata from Cassandra, such as the original text and file name.

Here’s how it works:

- Convert text to embedding: Start by converting your input text into an embedding vector using the FastText model. This vector will serve as the query for our similarity search.

- Search OpenSearch for similar embeddings: Using the KNN search capability in OpenSearch, we find the top k most similar embeddings. Each result includes the corresponding file and paragraph UUIDs, which help us link the results back to Cassandra.

- Fetch metadata from Cassandra: With the UUIDs retrieved from OpenSearch, we query Cassandra to get the metadata, such as the original text and file name, associated with each embedding.

The following code demonstrates this process:

import uuid

from IPython.display import display, HTML

def find_similar_embeddings_opensearch(os_client, index_name, input_embedding, top_k=5):

"""Search for similar embeddings in OpenSearch and return the associated UUIDs."""

query = {

"size": top_k,

"query": {

"knn": {

"embedding": {

"vector": input_embedding.tolist(),

"k": top_k

}

}

}

}

response = os_client.search(index=index_name, body=query)

similar_uuids = []

for hit in response['hits']['hits']:

file_uuid = hit['_source']['file_uuid']

paragraph_uuid = hit['_source']['paragraph_uuid']

similar_uuids.append((file_uuid, paragraph_uuid))

return similar_uuids

def fetch_metadata_from_cassandra(session, file_uuid, paragraph_uuid, keyspace_name):

"""Fetch the metadata (text and filename) from Cassandra based on UUIDs."""

file_uuid = uuid.UUID(file_uuid)

paragraph_uuid = uuid.UUID(paragraph_uuid)

query = f"""

SELECT text, filename

FROM {keyspace_name}.file_metadata

WHERE id = ? AND paragraph_uuid = ?;

"""

prepared = session.prepare(query)

bound = prepared.bind((file_uuid, paragraph_uuid))

rows = session.execute(bound)

for row in rows:

return row.filename, row.text

return None, None

# Input text to find similar embeddings

input_text = "place"

# Convert input text to embedding

input_embedding = text_to_vector(input_text)

# Find similar embeddings in OpenSearch

similar_uuids = find_similar_embeddings_opensearch(os_client, index_name=index_name, input_embedding=input_embedding, top_k=10)

# Fetch and display metadata from Cassandra based on the UUIDs found in OpenSearch

for file_uuid, paragraph_uuid in similar_uuids:

filename, text = fetch_metadata_from_cassandra(session, file_uuid, paragraph_uuid,

keyspace_name)

if filename and text:

html_content = f"""

<div style="margin-bottom: 10px;">

<p><b>File UUID:</b> {file_uuid}</p>

<p><b>Paragraph UUID:</b> {paragraph_uuid}</p>

<p><b>Text:</b> {text}</p>

<p><b>File:</b> {filename}</p>

</div>

<hr/>

"""

display(HTML(html_content))

This code demonstrates how to find similar embeddings in OpenSearch and retrieve the corresponding metadata from Cassandra. By linking the two systems via the UUIDs, you can build powerful search and recommendation systems that combine metadata storage with advanced embedding-based searches.

Conclusion and next steps: A powerful combination of Cassandra 4 and OpenSearch

By leveraging the strengths of Cassandra 4 and OpenSearch, you can build a system that handles both metadata storage and similarity search. Cassandra efficiently stores your file and paragraph metadata, while OpenSearch takes care of embedding-based searches using the k-Nearest Neighbors algorithm. Together, these two technologies enable powerful, large-scale applications for text search, recommendation engines, and more.

Coming up in Part 2, we’ll explore how Cassandra 5 simplifies this architecture with built-in vector support and native similarity search capabilities.

Ready to try vector search with Cassandra and OpenSearch? Spin up your first cluster for free on the Instaclustr Managed Platform and explore the incredible power of vector search.

The post Introduction to similarity search with word embeddings: Part 1–Apache Cassandra® 4.0 and OpenSearch® appeared first on Instaclustr.

How Cassandra Streaming, Performance, Node Density, and Cost are All related

This is the first post of several I have planned on optimizing Apache Cassandra for maximum cost efficiency. I’ve spent over a decade working with Cassandra and have spent tens of thousands of hours data modeling, fixing issues, writing tools for it, and analyzing it’s performance. I’ve always been fascinated by database performance tuning, even before Cassandra.

A decade ago I filed one of my first issues with the project, where I laid out my target goal of 20TB of data per node. This wasn’t possible for most workloads at the time, but I’ve kept this target in my sights.

IBM acquires DataStax: What that means for customers–and why Instaclustr is a smart alternative

IBM’s recent acquisition of DataStax has certainly made waves in the tech industry. With IBM’s expanding influence in data solutions and DataStax’s reputation for advancing Apache Cassandra® technology, this acquisition could signal a shift in the database management landscape.

For businesses currently using DataStax, this news might have sparked questions about what the future holds. How does this acquisition impact your systems, your data, and, most importantly, your goals?

While the acquisition proposes prospects in integrating IBM’s cloud capabilities with high-performance NoSQL solutions, there’s uncertainty too. Transition periods for acquisitions often involve changes in product development priorities, pricing structures, and support strategies.

However, one thing is certain: customers want reliable, scalable, and transparent solutions. If you’re re-evaluating your options amid these changes, here’s why NetApp Instaclustr offers an excellent path forward.

Decoding the IBM-DataStax link-up

DataStax is a provider of enterprise solutions for Apache Cassandra, a powerful NoSQL database trusted for its ability to handle massive amounts of distributed data. IBM’s acquisition reflects its growing commitment to strengthening data management and expanding its footprint in the open source ecosystem.

While the acquisition promises an infusion of IBM’s resources and reach, IBM’s strategy often leans into long-term integration into its own cloud services and platforms. This could potentially reshape DataStax’s roadmap to align with IBM’s broader cloud-first objectives. Customers who don’t rely solely on IBM’s ecosystem—or want flexibility in their database management—might feel caught in a transitional limbo.

This is where Instaclustr comes into the picture as a strong, reliable alternative solution.

Why consider Instaclustr?

Instaclustr is purpose-built to empower businesses with a robust, open source data stack. For businesses relying on Cassandra or DataStax, Instaclustr delivers an alternative that’s stable, high-performing, and highly transparent.

Here’s why Instaclustr could be your best option moving forward:

1. 100% open source commitment

We’re firm believers in the power of open source technology. We offer pure Apache Cassandra, keeping it true to its roots without the proprietary lock-ins or hidden limitations. Unlike proprietary solutions, a commitment to pure open source ensures flexibility, freedom, and no vendor lock-in. You maintain full ownership and control.

2. Platform agnostic

One of the things that sets our solution apart is our platform-agnostic approach. Whether you’re running your workloads on AWS, Google Cloud, Azure, or on-premises environments, we make it seamless for you to deploy, manage, and scale Cassandra. This differentiates us from vendors tied deeply to specific clouds—like IBM.