ScyllaDB X Cloud: An Inside Look with Avi Kivity (Part 1)

ScyllaDB’s co-founder/CTO on the motivations and architectural shifts behind ScyllaDB X Cloud — focusing on Raft and tablets-based data distribution If you follow ScyllaDB, you’ve probably heard us talking about Raft and tablets-based data distribution for a few years now. The ultimate goal of these projects (plus a few related ones) was to optimize elasticity and price performance – especially for dynamic and storage-bound workloads. And we finally hit a nice milestone along that journey: the release of ScyllaDB X Cloud. You can read about the release in our earlier blog post. Here, we wanted to share the engineering perspective on these architectural shifts. Tim Koopmans recently sat down with Avi Kivity – ScyllaDB Co-Founder and CTO – to chat about the underlying motivation and design decisions. You can watch the complete video here. But if you prefer to read, we’re writing up the highlights. This is the first blog post in a three-part series. Why ScyllaDB X Cloud? For scaling large clusters Tim: Let’s start with a big picture. What really motivated the architectural evolution behind what we know as ScyllaDB X Cloud? Was this change inevitable? How did it come into place? Avi: It came from our experience managing clusters for our customers. With the existing architecture, things like scaling up the cluster in preparation for events like Black Friday could take a long time. Since ScyllaDB can manage very large nodes (e.g., nodes with 30TB of data), moving that data onto new nodes could take a long time, sometimes a day. Also, nodes had to be added one at a time. If you had a large cluster, scaling the cluster would be a nail-biting experience. So we decided to improve that experience and, along the way, we improved many parts of the architecture. Tim: Do you have any numbers around what it used to be like to scale a large cluster? Avi: One of our large clusters has 75 nodes, each of which has around 60TB. It’s a pretty hefty cluster. It’s nice watching clusters like that on our dashboards and seeing compactions at tens of gigabytes per second aggregate across the cluster. Those clusters are churning through large amounts of data per second and carrying a huge amount of data. Now, we can scale this amount of data in minutes, maybe an hour for the most extreme cases. So it’s quite a huge change. Why ScyllaDB addressed scaling with Tablets & Raft Tim: When you think about dynamic or storage-bound workloads today, what are other databases getting wrong in this space? How did that lead you to this new approach, with tablets? Avi: “Other databases” is a huge area – there are hundreds of databases. Let’s talk about our heritage. We came from the Cassandra model. And the basic problem there was the static distribution of data. The node layout determines how data is distributed, and as long as you don’t add or remove nodes, it remains static. That means you have no flexibility. Also, the focus on having availability over consistency led to no central point for managing the topology. Without a coordinating authority, you could make only one change at a time. One of the first changes that we made was to add a coordinating authority in the form of Raft. Before, we managed topology with Gossip, which really puts cluster management responsibility on the operator. We moved it to a Raft group to centralize the management. You’ve probably heard the old proverb that anything in computer science can be solved with another layer of indirection. We did that with tablets, more or less. We inserted a layer of indirection so that instead of having a static distribution of data to nodes, it goes through a translation table. Each range of rows is mapped to a different node in a tablets table. By manipulating the tablets table, we can redirect small packages of data (specifically, 5GB – that’s pretty small for us). We can redirect the granularity of 5GB to any node and any CPU on any node. We can move those packages around at will, and those packages are moved at the line rate, so it’s no problem to fire them away at gigabits per second across the cluster. And that gives us the ability to rebalance data on a cluster or add and remove nodes very quickly. Tim: So tablets are really a new ScyllaDB abstraction? Is it an abstraction that breaks those tables into independently managed units? And I think you said the size is 5GB – is that configurable? Avi: It’s configurable, but I don’t recommend playing with it. Normally, you stay between 2.6GB and 10GB. When it reaches 10GB, it triggers a split, which will bring it back to 5GB. So each tablet will be split into two. If it goes down to 2.5GB, it will trigger a merge, merging two tablets into one larger tablet – again, bringing it back to 5GB. Tim: So ensuring that things can be dynamically split…We can move data around, rebalance across the cluster…That gives us finer-grained load distribution as well as better scalability and perhaps a bit of separation between compute and storage, right? Because we’re not necessarily tied to the size of the compute node anymore. We can have different instance types in a cluster now, as an indirect result of this change. The tipping point Tim: Avi, you said that re-architecting around tablets has been a huge shift. So what was the tipping point? Was it just that vNodes didn’t work anymore in terms of how you organize data? What was your aha moment where you said, “Yeah, I think we need to do something different here”? Avi: It was a combination of things, and since this was such a major change, we needed a lot of motivation to do it. One part of it was the inability to perform topology changes that involve more than one node at a time. Another part was that the previous streaming mechanism was very slow. Yet another part is that, because the streaming mechanism was so slow, we had to scale well in advance of exhausting the storage on the node. That required us to leave a lot of free space on the node, and that’s wasteful. We took all of this into consideration, and that was enough motivation for us to take on a multi-year change. I think it was well worth it. Tim: Multiyear…So how long ago did you start workshopping different ideas to solve? Avi: The first phase was changing topology to be strongly consistent and having a central authority to coordinate it. I think it took around a couple of years to switch to Raft topology. Before that, we switched schema management to use Raft as well. That was a separate problem, but since those two problems had the same solution, we jumped on it. We’re still not completely done. There are still a few features that are not yet fully compatible with tablets – but we see the light at the end of the tunnel now. [Stay tuned for parts 2 and 2]Be Part of Something Big – Speak at Monster Scale Summit

Share your “extreme scale engineering” expertise with ~20K like-minded engineers Whether you’re designing, implementing, or optimizing systems that are pushed to their limits, we’d love to hear about your most impressive achievements and lessons learned – at Monster Scale Summit 2026. Become a Monster Scale Summit Speaker What’s Monster Scale Summit? Monster Scale Summit is a technical conference that connects the community of people working on performance-sensitive data-intensive applications. Engineers, architects, and SREs from gamechangers around the globe will be gathering virtually to explore “monster scale” challenges with respect to extreme levels of throughput, data, and global distribution. It’s a lot like P99 CONF (also hosted by ScyllaDB) – a two-day event that’s free, fully virtual, and highly interactive. The core difference is that it’s focused on extreme scale engineering vs. all things performance. Last time, we hosted industry giants like Kelsey Hightower, Martin Kleppmann, Discord, Slack, Canva… Browse past sessions Details please! When: March 11 + 12 Where: Wherever you’d like! It’s intentionally virtual, so you can present and interact with attendees from anywhere around the world. Topics: Core topics include distributed databases, streaming and real-time processing, intriguing system designs, methods for balancing latency/concurrency/throughput, SRE techniques proven at scale, and infrastructure built for unprecedented demands. What we’re looking for: We welcome a broad range of talks about tackling the challenges that arise in the most massive, demanding environments. The conference prioritizes technical talks sharing first-hand experiences. Sessions are just 18-20 minutes – so consider this your TED Talk debut! Share your ideasBeyond Apache Cassandra

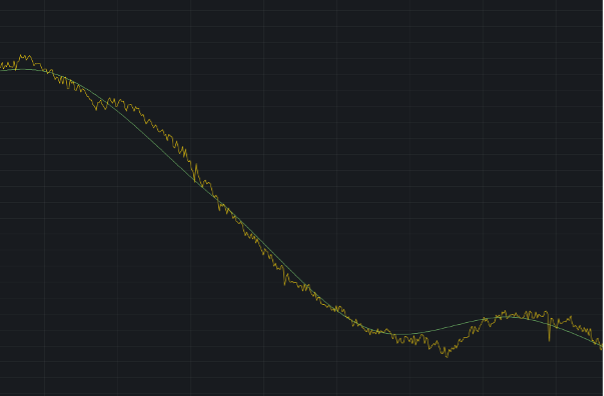

ScyllaDB is no longer “just” a faster Cassandra. In 2008, Apache Cassandra set a new standard for database scalability. Born to support Facebook’s Inbox Search, it has since been adopted by tech giants like Uber, Netflix, and Apple – where it’s run by experts who also serve as Cassandra contributors (alongside DataStax/IBM). And as its adoption scaled, Cassandra remained true to its core mission of scaling on commodity hardware with high availability. But what about performance? Simplicity? Efficiency? Elasticity? In 2015, ScyllaDB was born to go beyond Cassandra’s suboptimal resource utilization. Fresh from creating KVM and hacking the Linux kernel, the founders believed that their low-level engineering approach could squeeze considerably more power from the underlying infrastructure. The timing was ideal: just a year earlier, Netflix had published their numbers showing how to push Apache Cassandra to 1 million write RPS. This was an impressive feat, but one that required significant infrastructure investments and tuning efforts. The idea was quite simple (in theory, at least): take Apache Cassandra’s scalable architecture and reimplement it close to the metal while keeping wire protocol compatibility. Not relying on Java meant less latency variability (plus no stop the world pauses), while a unique shard-per-core architecture maximized servers’ throughput even under heavy system load. To prevent contention, everything was made asynchronous, and all these optimizations were paired with autonomous internal schedulers for minimal operational overhead. That was 10 years ago. While I can’t speak to Cassandra’s current direction, ScyllaDB evolved quite significantly since then – shifting from “just” a faster Cassandra alternative to a database with its own identity and unique feature set. Spoiler: In this video, I walk you through some key differences between ScyllaDB and how it differs from Apache Cassandra. I discuss the differences in performance, elasticity, and capabilities such as workload prioritization. You can see how ScyllaDB maps data per CPU core, scales in parallel, and de-risks topology changes—allowing it to handle millions of OPS with predictable low latencies (and without constant tuning and babysitting). ScyllaDB’s Evolution The first generation of ScyllaDB was all about raw performance. That’s when we introduced the shard-per-core asynchronous architecture, row-based cache, and advanced schedulers that achieve predictable low latencies. ScyllaDB’s second generation aimed for feature parity with Cassandra, but we actually went beyond that. For example, we introduced our Materialized views and production-ready Global Secondary Indexes (something that Cassandra still flags as experimental). Likewise, ScyllaDB also introduced support for local secondary indexes in that same year; those were just introduced in Cassandra 5 (after at least three different indexing implementations). Moreover, our Paxos implementation for lightweight transactions eliminated much of the overhead and limitations in Cassandra’s alternative implementation. The third generation marked our shift to the cloud, along with continued innovation. This is when ScyllaDB Alternator—our DynamoDB-compatible API—was introduced. We added support for ZSTD compression in 2020 (Cassandra only adopted it late in 2021). During this period, we dramatically improved repair speeds with row-level repair and introduced workload prioritization (more on this in the next section). The fourth generation of ScyllaDB emerged around the time AWS announced their i3en instance family, with high-density nodes holding up to 60TB of data (something Cassandra still struggles to handle effectively). During this period, we introduced the Incremental Compaction Strategy (ICS), allowing users to utilize up to 70% of their storage before scaling out. This later evolved into a hybrid compaction strategy (and we now support 90% storage utilization). We also introduced Change Data Capture (CDC) with a fundamentally different approach from Cassandra’s. And we significantly extended the CQL protocol with concepts such as shard-awareness, BYPASS CACHE, per-query configurable TIMEOUTs, and much more. Finally, we arrive at the fifth generation of ScyllaDB, which is still unfolding. This phase represents our path toward strong consistency and elasticity with Raft and Tablets. For more about the significance of this, read on… Capabilities That Set ScyllaDB Apart Our engineers have introduced lots of interesting features over the past decade. Based on my interactions with former Cassandra users, I think these are the most interesting to discuss here. Tablets Data Distribution Each ScyllaDB table is split into smaller fragments (“tablets”) to evenly distribute data and load across the system. Tablets bring elasticity to ScyllaDB, allowing you to instantly double, triple, or even 10x your cluster size to accommodate unpredictable traffic surges. They also enable more efficient use of storage, reaching up to 90% utilization. Since teams can quickly scale out in response to traffic spikes, they can satisfy latency SLAs without needing to overprovision “just in case.” Raft-based Strong Consistency for Metadata Raft introduces strong consistency to ScyllaDB’s metadata. Gone are the days when a schema change could push your cluster into disagreement or you’d lose access because you forgot to update the replication factor of your authentication keyspace (issues that still plague Cassandra). Workload Prioritization Workload prioritization allows you to consolidate multiple workloads under a single cluster, each with its own SLA. Basically, it controls how different workloads compete for system resources. Teams use it to prioritize urgent application requests that require immediate response times versus others that can tolerate slighter delays (e.g., large scans). Common use cases include balancing real-time vs batch processing, splitting writes from reads, and workload/infrastructure consolidation. Repair-based Operations Repair-based operations ensure your cluster data stays in sync, even during topology changes. This addresses a long-standing data consistency flaw in Apache Cassandra, where operations like replacing failed nodes can result in data loss. ScyllaDB also fully eliminates the problem of data resurrection, thanks to repair-based tombstone garbage collection. Incremental Compaction Incremental compaction (ICS) has been the default compaction strategy in ScyllaDB for over five years. ICS greatly reduces the temporary space amplification, resulting in more disk space being available for storing user data – and that eliminates the typical requirement of 50% free space in your drive. There is no comparable Cassandra feature. Cassandra just recently introduced Unified Compaction, which has yet to prove itself. Row-based Cache ScyllaDB’s row-based cache is also unique. It is enabled by default and requires no manual tuning. With the BYPASS CACHE extension, you can prevent cache pollution by keeping important items from being invalidated. Additionally, SSTable index caching significantly reduces I/O access time when fetching data from disk. Per-shard Concurrency Limits and Rate Limiters ScyllaDB includes per-shard concurrency limits and rate limiters per partition to protect against unexpected spikes. Whether dealing with a misbehaving client or a flood of requests to a specific key, ScyllaDB ensures resilience where Cassandra often falls short. DynamoDB Compatibility ScyllaDB also offers a DynamoDB-compatible layer, further distancing itself from its Apache Cassandra origins. This lets teams run their DynamoDB workloads on any cloud or on-prem – without code changes, and with 50% lower cost. This has helped quite a few teams consolidate multiple workloads on ScyllaDB. What’s Next? At the recent Monster SCALE Summit, CEO/co-founder Dor Laor shared a peek at what’s next for ScyllaDB. A few highlights… Ready now (see this blog post and product page for details): The ability to safely run at 90% storage utilization Support for clusters with mixed instance type nodes Dynamic provisioning and flex credit Short-term: Vector search Strongly consistent tables Fault injection service Transparent repairs Object and tiered storage Raft for strongly consistent tables Longer-term Multi-key transactions Analytics and transformations with UDFs Automated large partition balancing Immutable infrastructure for greater stability and reliability A replication mode for more flexible and efficient infrastructure changes For details, watch the complete talk here: To close, ScyllaDB is faster than Cassandra (I’ll share our latest benchmark results here soon). But both ScyllaDB and Cassandra have evolved to the point that ScyllaDB is no longer “just” a faster Cassandra. We’ve evolved beyond Cassandra. If your project needs more predictable performance – and/or could benefit from the elasticity, efficiency, and simplicity optimizations we’ve been focusing on for years now – you might also want to consider evolving beyond Cassandra.We Built a Tool to Diagnose ScyllaDB Kubernetes Issues

Introducing Scylla Operator Analyze, a tool to help platform engineers and administrators deploy ScyllaDB clusters running on Kubernetes Imagine it’s a Friday afternoon. Your company is migrating all the data to ScyllaDB and you’re in the middle of setting up the cluster on Kubernetes. Then, something goes wrong. Your time today is limited, but the sheer volume of ScyllaDB configuration feels endless. To help you detect problems in ScyllaDB deployments, we built Scylla Operator Analyze, a command-line tool designed to automatically analyze Kubernetes-based ScyllaDB clusters, identify potential misconfigurations, and offer actionable diagnostics. In modern infrastructure management, Kubernetes has revolutionized how we orchestrate containers and manage distributed systems. However, debugging complex Kubernetes deployments remains a significant challenge, especially in production-grade, high-performance environments like those powered by ScyllaDB. In this blog post, we’ll explain what Scylla Operator Analyze is, how it works, and how it may help platform engineers and administrators deploy ScyllaDB clusters running on Kubernetes. The repo we’ve been working on is available here. It’s a fork of Scylla Operator, but the project hasn’t been merged upstream (it’s highly experimental). What is Scylla Operator Analyze? Scylla Operator Analyze is a Go-based command-line utility that extends Scylla Operator by introducing a diagnostic command. Its goal is straightforward: automatically inspect a given Kubernetes deployment and report problems it identified in the deployment configuration. We designed our tool to help ScyllaDB’s technical support staff to quickly diagnose known issues reported by our clients, both by providing solutions for simple problems, and helpful insights in more complex cases. However, it’s also freely available as a subcommand of the Scylla Operator binary. The next few sections share how we implemented the tool. If you want to go straight to example usage, skip to the Making a diagnosis section. Capturing the cluster state Kubernetes deployments consist of many components with various functions. Collectively, they are called resources. The Kubernetes API presents them to the client as objects containing fields with information about their configuration and current state. Two modes of operation Scylla Operator Analyze supports two ways of collecting these data: Live Cluster Connection The tool can connect directly to a Kubernetes cluster using the client-go API. Once connected, it retrieves data from Kubernetes resources and compiles it into an internal representation. Archive-Based Analysis (Must-Gather) Alternatively, the tool can analyze archived cluster states created using a utility calledmust-gather. These archives

contain YAML descriptions of resources, allowing offline analysis.

Diagnosis by analyzing symptoms Symptoms are high-level objects

representing certain issues that could occur while deploying a

ScyllaDB cluster. A symptom contains the diagnosis of the problem

and a suggestion on how to fix it, as well as a method for checking

if the problem occurs in a given deployment (we cover this in the

section about selectors). In order to create objects representing

more complex problems, symptoms can be used to create tree-like

structures. For example, a problem that could manifest itself in a

few different ways could be represented by many symptoms checking

for all the different spots the problem could affect. Those

symptoms would be connected to one root symptom, describing the

cause of the problem. This way, if any of the sub-symptoms report

that their condition is met, the tool can display the root cause

instead of one specific manifestation of that problem. Example

of a symptom and the workflow used to detect it. In this

example, let’s assume that the driver is unable to provide storage,

but NodeConfig does not report a nonexistent device. When checking

if the symptom occurs, the tool will perform the following steps.

Check if the NodeConfig reports a nonexistent device – no Check if

the driver is unable to provide storage – yes. At this point we

know the symptom occurs, so we don’t need to check for any more

subsymptoms. Since one of the subsymptoms occurs, the main symptom

(NodeConfig configured with nonexistent volume) is reported to the

user. Deployment condition description Resources As described

earlier, Kubernetes deployments can be considered collections of

many interconnected resources. All resources are described using

so-called fields. Fields contain information identifying resources,

deployment configuration and descriptions of past and current

states. Together, these data give the controllers all the

information they need to supervise the deployment. Because of that,

they are very useful for debugging issues and are the main source

of information for our tool. Resources’ fields contain a special

kind field, which describes what the resource is and

indicates what other fields are available. Some fundamental

Kubernetes resource kinds include Pods, Services, etc. Those can

also be extended with custom ones, such as the

ScyllaCluster resource kind defined by the Scylla

Operator. This provides the most basic kind of grouping of

resources in Kubernetes. Other fields are grouped in sections

called Metadata, which provide identifying information,

Spec, which contain configuration and Status,

which contain current status. Such a description in YAML format may

look something like this: apiVersion: v1 kind: Pod metadata:

creationTimestamp: "2024-12-03T17:47:06Z" labels: scylla/cluster:

scylla scylla/datacenter: us-east-1 scylla/scylla-version: 6.2.0

name: scylla-us-east-1-us-east-1a-0 namespace: scylla spec:

volumes: - name: data persistentVolumeClaim: claimName:

data-scylla-us-east-1-us-east-1a-0 status: conditions: -

lastTransitionTime: "2024-12-03T17:47:06Z" message: '0/1 nodes are

available: pod has unbound immediate PersistentVolumeClaims.

preemption: 0/1 nodes are available: 1 Preemption is not helpful

for scheduling.' reason: Unschedulable status: "False" type:

PodScheduled phase: Pending Selectors An accurate

description of symptoms (presented in the previous section)

requires a method for describing conditions in the deployment using

information contained in the resources’ fields. Moreover, because

of the distributed nature of both Kubernetes deployments and

ScyllaDB, these descriptions must also specify how the resources

are related to one another. Our tool comes with a package providing

selectors. They offer a simple, yet powerful, way to

describe deployment conditions using Kubernetes objects in a way

that’s flexible and allows for automatic processing using the

provided selection engine. A selector can be thought of as a query

because it specifies the kinds of resources to select and criteria

which they should satisfy. Selectors are constructed using four

main methods of the selector structure builder. First, the

developer specifies resources to be selected with the

Select method by specifying their kind and a predicate

which should be true for the selected resources. The predicate is

provided as a standard Go closure to allow for complex conditions

if needed. Next, the developer may call the Relate method

to define a relationship between two kinds of resources. This is

again defined using a Go closure as a predicate, which must hold

for the two objects to be considered in the same result set. This

can establish a context within which an issue should be inspected

(for example: connecting a Pod to relevant Storage resources).

Finally, constraints for individual resources in the result set can

be specified with the Where method, similarly to how it is

done in the Select method. This method is mainly meant to

be used with the SelectWithNil method. The

SelectWithNil method is the same as the Select

method; the only difference is that it allows returning a special

nil value instead of a resource instance. This

nil value signifies that no resources of a given

kind match all the other resources in the resulting set. Thanks to

this, selectors can also be used to detect a scenario where a

resource is missing just by examining the context of related

resources. An example selector — shortened for brevity — may look

something like this: selector. New(). Select("scylla-pod",

selector.Type[*v1.Pod](), func(p *v1.Pod) (bool, error) { /* ... */

}). SelectWithNil("storage-class",

selector.Type[*storagev1.StorageClass](), nil). Select("pod-pvc",

selector.Type[*v1.PersistentVolumeClaim](), nil).

Relate("scylla-pod", "pod-pvc", func(p *v1.Pod, pvc

*v1.PersistentVolumeClaim) (bool, error) { for _, volume := range

p.Spec.Volumes { vPvc := volume.PersistentVolumeClaim if vPvc !=

nil && (*vPvc).ClaimName == pvc.Name { return true, nil } }

return false, nil }). Relate("pod-pvc", "storage-class", /* ...

*/). Where("storage-class", func(sc *storagev1.StorageClass) (bool,

error) { return sc == nil, nil }) In symptom definitions,

selectors for a corresponding condition are used and are usually

constructed alongside them. Such a selector provides a description

of a faulty condition. This means that if there is a matching set

of resources, it can be inferred that the symptom occurs. Finally,

the selector can then be used, given all the deployments resources,

to construct an iterator-like object that provides a list of all

the sets of resources that match the selector. Symptoms can then

use those results to detect issues and generate diagnoses

containing useful debugging information. Making a diagnosis When a

symptom relating to a problematic condition is detected, a

diagnosis for a user is generated. Diagnoses are

automatically generated report objects summarizing the problem and

providing additional information. A diagnosis consists of

an issue description, identifiers of resources related to the

fault, and hints for the user (when available). Hints may contain,

for example, a description of steps to remedy the issue or a

reference to a bug tracker. In the final stage of analysis, those

diagnoses are presented to the user and the output may look

something like this: Diagnoses: scylladb-local-xfs StorageClass

used by a ScyllaCluster is missing Suggestions: deploy

scylladb-local-xfs StorageClass (or change StorageClass) Resources

GVK: /v1.PersistentVolumeClaim,

scylla/data-scylla-us-east-1-us-east-1a-0 (4…)

scylla.scylladb.com/v1.ScyllaCluster, scylla/scylla

(b6343b79-4887-497b…) /v1.Pod, scylla/scylla-us-east-1-us-east-1a-0

(0e716c3f-6432-4eeb-b5ff-…) Learn more As we suggested, Kubernetes

deployments of ScyllaDB involve many interacting components, each

of which has its own quirks. Here are a few strategies to help in

diagnosing the problems you encounter: Run

Scylla Doctor Check our troubleshooting

guide Look for open

issues on our GitHub Check our forum Ask us on Slack Learn more about

ScyllaDB at ScyllaDB

University Good luck, fellow troubleshooter! Building easy-cass-mcp: An MCP Server for Cassandra Operations

I’ve started working on a new project that I’d like to share, easy-cass-mcp, an MCP (Model Context Protocol) server specifically designed to assist Apache Cassandra operators.

After spending over a decade optimizing Cassandra clusters in production environments, I’ve seen teams consistently struggle with how to interpret system metrics, configuration settings, schema design, and system configuration, and most importantly, how to understand how they all impact each other. While many teams have solid monitoring through JMX-based collectors, extracting and contextualizing specific operational metrics for troubleshooting or optimization can still be cumbersome. The good news is that we now have the infrastructure to make all this operational knowledge accessible through conversational AI.

How GE Healthcare Took DynamoDB on Prem for Its AI Platform

How GE Healthcare moved a DynamoDB‑powered AI platform to hospital data centers, without rewriting the app How do you move a DynamoDB‑powered AI platform from AWS to hospital data centers without rewriting the app? That’s the challenge that Sandeep Lakshmipathy (Director of Engineering at GE Healthcare) decided to share with the ScyllaDB community a few years back. We noticed an uptick in people viewing this video recently, so we thought we’d share it here, in blog form. Watch or read, your choice. Intro Hi, I’m Sandeep Lakshmipathy, the Director of Engineering for the Edison AI group at GE Healthcare. I have about 20 years of experience in the software industry, working predominantly in product and platform development. For the last seven years I’ve been in the healthcare domain at GE, rolling out solutions for our products. Let me start by setting some context with respect to the healthcare challenges that we face today. Roughly 130M babies are born every year; about 350K every single day. There’s a 40% shortage of healthcare workers to help bring these babies into the world. Ultrasound scans help ensure the babies are healthy, but those scans are user‑dependent, repetitive, and manual. Plus, clinical training is often neglected. Why am I talking about this? Because AI solutions can really help in this specific use case and make a big difference. Now, consider this matrix of opportunities that AI presents. Every single tiny dot within each cell is an opportunity in itself. The newborn‑baby challenge I just highlighted is one tiny speck in this giant matrix. It shows what an infinite space this is, and how AI can address each challenge in a unique way. GE Healthcare is tackling these opportunities through a platform approach. Edison AI Workbench (cloud) We ingest data from many devices and customers: scanners, research networks, and more. Data is then annotated and used to train models. Once the models are trained, we deploy them onto devices. The Edison AI Workbench helps data scientists view and annotate data, train models, and package them for deployment. The whole Edison AI Workbench runs in AWS and uses AWS resources to provide a seamless experience to the data scientists and annotators who are building AI solutions for our customers. Bringing Edison AI Workbench on‑prem When we showed this solution to our research customers, they said, “Great, we really like the features and the tools….but can we have Edison AI Workbench on‑prem?” So, we started thinking: How do we take something that lives in the AWS cloud, uses all those resources, and relies heavily on AWS services – and move it onto an on‑prem server while still giving our research customers the same experience? That’s when we began exploring different options. Since DynamoDB was one of the main things tying us to the AWS cloud, we started looking for a way to replace it in the on‑prem world. After some research, we saw that ScyllaDB was a good DynamoDB replacement because it provides API compatibility with DynamoDB. Without changing much code and keeping all our interfaces the same, we migrated the Workbench to on‑prem and quickly delivered what our research customers asked for. Why ScyllaDB Alternator (DynmamoDB-Compatible API)? Moving cloud assets on‑prem is not trivial; expertise, time‑to‑market, service parity, and scalability all matter. We also wanted to keep our release cycles short: in the cloud we can push features every sprint; on‑prem, we still need regular updates. Keeping the database layer similar across cloud and on‑prem minimized rework. Quick proofs of concept confirmed that ScyllaDB + Alternator met our needs, and using Kubernetes on‑prem let us port microservices comfortably. The ScyllaDB team has always been available with respect to developer‑level interactions, quick fixes in nightly builds, and constant touch‑points with technical and marketing teams. All of this helped us move fast. For example, DynamoDB Streams wasn’t yet in ScyllaDB when we adopted it (back in 2020), but the team provided work‑arounds until the feature became available. They also worked with us on licensing to match our needs. This partnership was crucial to the solution’s evolution. By partnering with the ScyllaDB team, we could take a cloud‑native Workbench to our on‑prem research customers in healthcare. Final thoughts Any AI solution rollout depends on having the right data volume and balance. It’s all the annotations that drive model quality. Otherwise, the model will be brittle, and it won’t have the necessary diversity. Supporting all these on‑prem Workbench use cases helps because it takes the tools to where the data is. The cloud workbench handles data in the cloud data lake. But at the same time, our research customers who are partnering with us can use this on-prem, taking the tools to where the data is: in their hospital network.Real-Time Database Read Heavy Workloads: Considerations and Best Practices

Explore the challenges associated with real-time read-heavy database workloads and get tips for addressing them Reading and writing are distinctly different beasts. This is true with reading/writing words, reading/writing code, and also when we’re talking about reading/writing data to a database. So, when it comes to optimizing database performance, your read:write ratio really does matter. We recently wrote about performance considerations that are important for write-heavy workloads – covering factors like LSM tree vs B-tree engines, payload size, compression, compaction, and batching. But read-heavy database workloads bring a different set of challenges; for example: Scaling a cache: Many teams try to speed up reads by adding a cache in front of their database, but the cost and complexity can become prohibitive as the workload grows. Competing workloads: Things might work well initially, but as new use cases are added, a single workload can end up bottlenecking all the others. Constant change: As your dataset grows or user behaviors shift, hotspots might surface. In this article, we explore high-level considerations to keep in mind when you have a latency-sensitive read-heavy workload. Then, we’ll introduce a few ScyllaDB capabilities and best practices that are particularly helpful for read-heavy workloads. What Do We Mean by “a Real-Time Read Heavy Workload”? First, let’s clarify what we mean by a “real-time read-heavy” workload. We’re talking about workloads that: Involve a large amount of sustained traffic (e.g., over 50K OPS) Involve more reads than writes Are bound by strict latency SLAs (e.g., single digit millisecond P99 latency) Here are a few examples of how they manifest themselves in the wild: Betting: Everyone betting on a given event is constantly checking individual player, team, and game stats as the match progresses. Social networks: A small subset of people are actually posting new content, while the vast majority of users are typically just browsing through their feeds and timelines. Product Catalogs: As with social media, there’s a lot more browsing than actual updating. Considerations Next, let’s look at key considerations that impact read performance in real-time database systems. The Database’s Read Path To understand how databases like ScyllaDB process read operations, let’s recap its read path. When you submit a read (a SELECT statement), the database first checks for the requested data in memtables, which are in-memory data structures that temporarily hold your recent writes. Additionally, the database checks whether the data is present in the cache. Why is this extra step necessary? Because the memtable may not always hold the latest data. Sometimes data could be written out-of-order, especially if applications consume data from unordered sources. As the protocol allows for clients to manipulate record timestamps to prevent correct ordering, checking both the memtable and the cache is necessary to ensure that the latest write takes gets returned. Then, the database takes one of two actions: If the data is stored on the disk, the database populates the cache to speed up subsequent reads. If the data doesn’t exist on disk, the database notes this absence in the cache – avoiding further unnecessary lookups there. As memtables flush to disk, the data also gets merged with the cache. That way, the cache ends up reflecting the latest on-disk data. Hot vs. Cold Reads Reading from cache is always faster than reading from disk. The more data your database can serve directly from cache, the better its performance (since reading data from memory has a practically unlimited fetch ceiling). But how can you tell whether your reads are going to cache or disk? Monitoring. You can use tools such as the ScyllaDB Monitoring stack to learn all about your cache hits and misses. The fewer cache misses, the better your read latencies. ScyllaDB uses a Least Recently Used (LRU) caching strategy, similar to Redis and Memcached. When the cache gets full, the least-accessed data is evicted to make room for new entries. With this LRU approach, you need to be mindful about your reads. You want to avoid situations where a few “expensive” reads end up evicting important items from your cache. If you don’t optimize cache usage, you might encounter a phenomenon called “cache thrashing.” That’s what happens when you’re continuously evicting and replacing items in your cache, essentially rendering the cache ineffective. For instance, full table scans can create significant cache pressure, particularly when your working set size is larger than your available caching space. During a scan, if a competing workload relies on reading frequently cached data, its read latency will momentarily increase because those items were evicted. To prevent this situation, expensive reads should specify options like ScyllaDB’s BYPASS_CACHE to prevent its results from evicting important items. Paging Paging is another important factor to consider. It’s designed to prevent the database from running out of memory when scanning through large results. Basically, rows get split into pages as defined by your page size, and selecting an appropriate page size is essential for minimizing end-to-end latency. For example, assume you have a quorum read request in a 3-node cluster. Two replicas must respond for the request to be successful. Each replica computes a single page, which then gets reconciled by the coordinator before returning data back to the client. Note that: ScyllaDB latencies are reported per page. If your application latencies are high, but low on the database side, it is an indication that your clients may be often paging. Smaller page sizes increase the number of client-server roundtrips. For example, retrieving 1,000 rows with a page size of 10 requires 100 client-server round trips, impacting latency. Testing various page sizes helps finding the optimal balance. Most drivers default to 5,000 rows per page, which works well in most cases, but you may want to increase from the defaults when scanning through wide rows, or during full scans – at the expense of letting the database work more before receiving a response. Sometimes trial and error is needed to get the page size nicely tuned for your application. Tombstones In Log-Structured Merge-tree (LSM-tree) databases like ScyllaDB, handling tombstones (markers for deleted data) is also important for read performance. Tombstones ensure that deletions are properly propagated across replicas to avoid deleted data from being “resurrected.” They’re critical for maintaining correctness. However, read-heavy workloads with frequent deletions may have to process lots of tombstones to return a single page of live data. This can really impact latency. For example, consider this extreme example. Here, tracing data shows that a simple select query took a whopping 6 seconds to process a single row because it had to go through 10 million tombstones. There are a couple ways to avoid this: tuning compaction strategies, such as the more aggressive LeveledCompactionStrategy, or using ICS Space Amplification Goal, or optimizing your access patterns to scanning through fewer dead rows on every point query. Optimizing Read-Heavy Workloads with ScyllaDB While ScyllaDB’s LSM tree storage engine makes it quite well-suited for write-heavy workloads, our engineers have introduced a variety of features that optimize it for ultra-low latency reads as well. ScyllaDB Cache One of ScyllaDB’s key components for achieving low latency is its unique caching mechanism. Many databases rely on the operating system’s page cache, which can be inefficient and doesn’t provide the level of control needed for predictable low latency. The OS cache lacks workload-specific context, making it difficult to prioritize which items should remain in memory and which can be safely evicted. At ScyllaDB, our engineering team addressed this by implementing our own unified internal cache. When ScyllaDB starts, it locks most of the server’s memory and directly manages it, bypassing the OS cache. Additionally, ScyllaDB’s cache uses a shared-nothing approach, giving each shard/vCPU its own cache, memtable, and SSTable. This eliminates the need for concurrency locks and reduces context switching, further maximizing performance. You can read more about that unified cache in this engineering blog post. SSTable Index Caching Another performance-focused feature of ScyllaDB is its ability to cache SSTable indexes in memory. Since working sets often exceed the memory available, reads sometimes go to disk. However, disk access is costly. By caching SSTable indexes, ScyllaDB reduces disk IO costs by up to 3x. This significantly improves read performance – particularly during cache misses. ScyllaDB’s index caching is demand-driven: entries are cached upon access and evicted on demand. If your workload reads heavily from disk, it’s often helpful to increase the size of this index cache. Workload Prioritization Competing workloads can lead to latency issues, as we mentioned at the beginning of this article. ScyllaDB provides a solution for this: its Workload Prioritization feature, which allows you to assign priority levels to different workloads. This is particularly useful if you have workloads with varying latency requirements, as it lets you prioritize latency-sensitive queries over others. You assign service levels to each workload, then ScyllaDB’s internal scheduler handles query prioritization according to those predefined levels. To learn more, see my recent talk from ScyllaDB Summit. Heat-Weighted Load Balancing (HWLB) Heat-Weighted Load Balancing (HWLB) is a powerful ScyllaDB feature that’s commonly overlooked. HWLB mitigates performance issues that can arise when a replica node restarts with a cold cache, like after a rolling restart for a configuration change or an upgrade. In such cases, other nodes notice that the replica’s cache is cold and gradually start directing requests to the restarted node until its cache eventually warms up. The HWLB algorithm controls how requests are routed to a cold replica. The mathematical formula behind this gradual allocation is shown above – it explains the pacing of requests sent to a node as it warms up. HWLB ensures that nodes with a cold cache do not immediately receive full traffic, in turn preventing abrupt latency spikes. When restarting ScyllaDB replicas, pay attention to the Reciprocal Miss Rate (HWLB) panel within the ScyllaDB Monitoring. Nodes with a higher ratio will serve more reads compared to other nodes. Prepared statements with ScyllaDB’s shard-aware drivers On the client side, using prepared statements is a critical best practice. A prepared statement is a query parsed by ScyllaDB and then saved for later use. Prepared statements allow ScyllaDB to route queries directly to replica nodes and shards that hold the requested data. Without prepared statements, a query may be routed to a node without the required data – resulting in extra round trips. With prepared statements, queries are always routed efficiently, minimizing network overhead and improving response times. Try it out: This ScyllaDB University lesson walks you through prepared statements. High concurrency Perhaps the most important tip here is to remember that ScyllaDB loves concurrency… but only up to a certain point. If you send too few requests to the database, you won’t be able to fully maximize its potential. However, if you have unbounded concurrency – you send too many requests to the database – that excessive concurrency can cause performance degradation. To find the sweet spot, apply this formula: *Concurrency = Throughput × Latency*. For example, if you want to run 200K operations per second with an average latency of 1ms, you would aim for a concurrency level of 200. Using this calculation, adjust your driver settings – setting the number of connections and maximum in-flight requests per connection to meet your target concurrency. If your driver settings yield a concurrency higher than needed, reduce them. If it’s lower, increase them accordingly. Wrapping Up As we’ve discussed, there are a lot of ways you can keep latencies low with read-heavy workloads – even on databases such as ScyllaDB which are also optimized for write-heavy workloads. In fact, ScyllaDB performance is comparable to dedicated caching solutions like Memcached for certain workloads. If you want to learn more, here are some firsthand perspectives from teams who tackled some interesting read-heavy challenges: Discord: With millions of users actively reading and searching chat history, Discord needs ultra-low-latency reads and high throughput to maintain real-time interactions at scale. Epic Games: To support Unreal Engine Cloud, Epic Games needed a high-speed, scalable metadata store that could handle rapid cache invalidation and support metadata storage for game assets. Zeroflucs: To power their sports betting application, ZeroFlucs had to process requests in near real-time, constantly, and in a region local to both the customer and the data. Also, take a look at the following video, where we go into even greater depth on these read-heavy challenges and also walk you through what these workloads look like on ScyllaDB.easy-cass-stress Joins the Apache Cassandra Project

I’m taking a quick break from my series on Cassandra node density to share some news with the Cassandra community: easy-cass-stress has officially been donated to the Apache Software Foundation and is now part of the Apache Cassandra project ecosystem as cassandra-easy-stress.

Why This Matters

Over the past decade, I’ve worked with countless teams struggling with Cassandra performance testing and benchmarking. The reality is that stress testing distributed systems requires tools that can accurately simulate real-world workloads. Many tools make this difficult by requiring the end user to learn complex configurations and nuance. While consulting at The Last Pickle, I set out to create an easy to use tool that lets people get up and running in just a few minutes

Azure fault domains vs availability zones: Achieving zero downtime migrations

The challenges of operating production-ready enterprise systems in the cloud are ensuring applications remain up to date, secure and benefit from the latest features. This can include operating system or application version upgrades, but it is not limited to advancements in cloud provider offerings or the retirement of older ones. Recently, NetApp Instaclustr undertook a migration activity for (almost) all our Azure fault domain customers to availability zones and Basic SKU IP addresses.

Understanding Azure fault domains vs availability zones

“Azure fault domain vs availability zone” reflects a critical distinction in ensuring high availability and fault tolerance. Fault domains offer physical separation within a data center, while availability zones expand on this by distributing workloads across data centers within a region. This enhances resiliency against failures, making availability zones a clear step forward.

The need for migrating from fault domains to availability zones

NetApp Instaclustr has supported Azure as a cloud provider for our Managed open source offerings since 2016. Originally this offering was distributed across fault domains to ensure high availability using “Basic SKU public IP Addresses”, but this solution had some drawbacks when performing particular types of maintenance. Once released by Azure in several regions we extended our Azure support to availability zones which have a number of benefits including more explicit placement of additional resources, and we leveraged “Standard SKU Public IP’s” as part of this deployment.

When we introduced availability zones, we encouraged customers to provision new workloads in them. We also supported migrating workloads to availability zones, but we had not pushed existing deployments to do the migration. This was initially due to the reduced number of regions that supported availability zones.

In early 2024, we were notified that Azure would be retiring support for Basic SKU public IP addresses in September 2025. Notably, no new Basic SKU public IPs would be created after March 1, 2025. For us and our customers, this had the potential to impact cluster availability and stability – as we would be unable to add nodes, and some replacement operations would fail.

Very quickly we identified that we needed to migrate all customer deployments from Basic SKU to Standard SKU public IPs. Unfortunately, this operation involves node-level downtime as we needed to stop each individual virtual machine, detach the IP address, upgrade the IP address to the new SKU, and then reattach and start the instance. For customers who are operating their applications in line with our recommendations, node-level downtime does not have an impact on overall application availability, however it can increase strain on the remaining nodes.

Given that we needed to perform this potentially disruptive maintenance by a specific date, we decided to evaluate the migration of existing customers to Azure availability zones.

Key migration consideration for Cassandra clusters

As with any migration, we were looking at performing this with zero application downtime, minimal additional infrastructure costs, and as safe as possible. For some customers, we also needed to ensure that we do not change the contact IP addresses of the deployment, as this may require application updates from their side. We quickly worked out several ways to achieve this migration, each with its own set of pros and cons.

For our Cassandra customers, our go to method for changing cluster topology is through a data center migration. This is our zero-downtime migration method that we have completed hundreds of times, and have vast experience in executing. The benefit here is that we can be extremely confident of application uptime through the entire operation and be confident in the ability to pause and reverse the migration if issues are encountered. The major drawback to a data center migration is the increased infrastructure cost during the migration period – as you effectively need to have both your source and destination data centers running simultaneously throughout the operation. The other item of note, is that you will need to update your cluster contact points to the new data center.

For clusters running other applications, or customers who are more cost conscious, we evaluated doing a “node by node” migration from Basic SKU IP addresses in fault domains, to Standard SKU IP addresses in availability zones. This does not have any short-term increased infrastructure cost, however the upgrade from Basic SKU public IP to Standard SKU is irreversible, and different types of public IPs cannot coexist within the same fault domain. Additionally, this method comes with reduced rollback abilities. Therefore, we needed to devise a plan to minimize risks for our customers and ensure a seamless migration.

Developing a zero-downtime node-by-node migration strategy

To achieve a zero-downtime “node by node” migration, we explored several options, one of which involved building tooling to migrate the instances in the cloud provider but preserve all existing configurations. The tooling automates the migration process as follows:

- Begin with stopping the first VM in the cluster. For cluster availability, ensure that only 1 VM is stopped at any time.

- Create an OS disk snapshot and verify its success, then do the same for data disks

- Ensure all snapshots are created and generate new disks from snapshots

- Create a new network interface card (NIC) and confirm its status is green

- Create a new VM and attach the disks, confirming that the new VM is up and running

- Update the private IP address and verify the change

- The public IP SKU will then be upgraded, making sure this operation is successful

- The public IP will then be reattached to the VM

- Start the VM

Even though the disks are created from snapshots of the original disks, we encountered several discrepancies in our testing, with settings between the original VM and the new VM. For instance, certain configurations, such as caching policies, did not automatically carry over, requiring manual adjustments to align with our managed standards.

Recognizing these challenges, we decided to extend our existing node replacement mechanism to streamline our migration process. This is done so that a new instance is provisioned with a new OS disk with the same IP and application data. The new node is configured by the Instaclustr Managed Platform to be the same as the original node.

The next challenge: our existing solution is built so that the replaced node was provisioned to be the exact same as the original. However, for this operation we needed the new node to be placed in an availability zone instead of the same fault domain. This required us to extend the replacement operation so that when we triggered the replacement, the new node was placed in the desired availability zone. Once this operation completed, we had a replacement tool that ensured that the new instance was correctly provisioned in the availability zone, with a Standard SKU, and without data loss.

Now that we had two very viable options, we went back to our existing Azure customers to outline the problem space, and the operations that needed to be completed. We worked with all impacted customers on the best migration path for their specific use case or application and worked out the best time to complete the migration. Where possible, we first performed the migration on any test or QA environments before moving onto production environments.

Collaborative customer migration success

Some of our Cassandra customers opted to perform the migration using our data center migration path, however most customers opted for the node-by-node method. We successfully migrated the existing Azure fault domain clusters over to the Availability Zone that we were targeting, with only a very small number of clusters remaining. These clusters are operating in Azure regions which do not yet support availability zones, but we were able to successfully upgrade their public IP from Basic SKUs that are set for retirement to Standard SKUs.

No matter what provider you use, the pace of development in cloud computing can require significant effort to support ongoing maintenance and feature adoption to take advantage of new opportunities. For business-critical applications, being able to migrate to new infrastructure and leverage these opportunities while understanding the limitations and impact they have on other services is essential.

NetApp Instaclustr has a depth of experience in supporting business critical applications in the cloud. You can read more about another large-scale migration we completed The worlds Largest Apache Kafka and Apache Cassandra Migration or head over to our console for a free trial of the Instaclustr Managed Platform.

The post Azure fault domains vs availability zones: Achieving zero downtime migrations appeared first on Instaclustr.

Integrating support for AWS PrivateLink with Apache Cassandra® on the NetApp Instaclustr Managed Platform

Discover how NetApp Instaclustr leverages AWS PrivateLink for secure and seamless connectivity with Apache Cassandra®. This post explores the technical implementation, challenges faced, and the innovative solutions we developed to provide a robust, scalable platform for your data needs.

Last year, NetApp achieved a significant milestone by fully integrating AWS PrivateLink support for Apache Cassandra® into the NetApp Instaclustr Managed Platform. Read our AWS PrivateLink support for Apache Cassandra General Availability announcement here. Our Product Engineering team made remarkable progress in incorporating this feature into various NetApp Instaclustr application offerings. NetApp now offers AWS PrivateLink support as an Enterprise Feature add-on for the Instaclustr Managed Platform for Cassandra, Kafka®, OpenSearch®, Cadence®, and Valkey™.

The journey to support AWS PrivateLink for Cassandra involved considerable engineering effort and numerous development cycles to create a solution tailored to the unique interaction between the Cassandra application and its client driver. After extensive development and testing, our product engineering team successfully implemented an enterprise ready solution. Read on for detailed insights into the technical implementation of our solution.

What is AWS PrivateLink?

PrivateLink is a networking solution from AWS that provides private connectivity between Virtual Private Clouds (VPCs) without exposing any traffic to the public internet. This solution is ideal for customers who require a unidirectional network connection (often due to compliance concerns), ensuring that connections can only be initiated from the source VPC to the destination VPC. Additionally, PrivateLink simplifies network management by eliminating the need to manage overlapping CIDRs between VPCs. The one-way connection allows connections to be initiated only from the source VPC to the managed cluster hosted in our platform (target VPC)—and not the other way around.

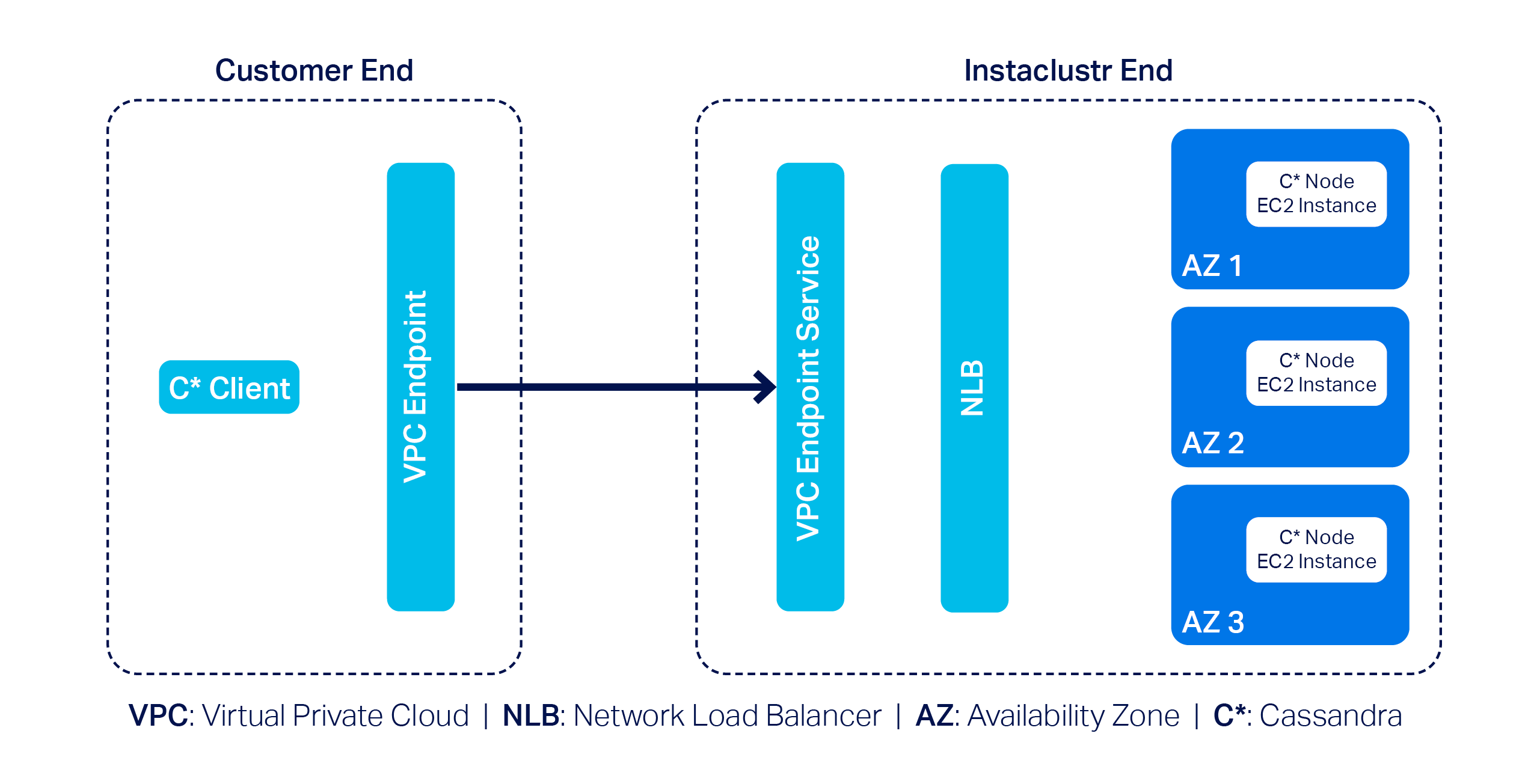

To get an idea of what major building blocks are involved in making up an end-to-end AWS PrivateLink solution for Cassandra, take a look at the following diagram—it’s a simplified representation of the infrastructure used to support a PrivateLink cluster:

In this example, we have a 3-node Cassandra cluster at the far right with one Cassandra node per Availability Zone (or AZ). Next, we have the VPC Endpoint Service and a Network Load Balancer (NLB). The Endpoint Service is essentially the AWS PrivateLink, and by design AWS needs it to be backed by an NLB–that’s pretty much what we have to manage on our side.

On the customer side, they must create a VPC Endpoint that enables them to privately connect to the AWS PrivateLink on our end; naturally, customers will also have to use a Cassandra client(s) to connect to the cluster.

AWS PrivateLink support with Instaclustr for Apache Cassandra

To incorporate AWS PrivateLink support with Instaclustr for Apache Cassandra on our platform, we came across a few technical challenges. First and foremost, the primary challenge was relatively straightforward: Cassandra clients need to talk to each individual node in a cluster.

However, the problem is that nodes in an AWS PrivateLink cluster are only assigned private IPs; that is what the nodes would announce by default when Cassandra clients attempt to discover the topology of the cluster. Cassandra clients cannot do much with the received private IPs as they cannot be used to connect to the nodes directly in an AWS PrivateLink setup.

We devised a plan of attack to get around this problem:

- Make each individual Cassandra node listen for CQL queries on unique ports.

- Configure the NLB so it can route traffic to the appropriate node based on the relevant unique port.

- Let clients implement the AddressTranslator interface from the Cassandra driver. The custom address translator will need to translate the received private IPs to one of the VPC Endpoint Elastic Network Interface (or ENI) IPs without altering the corresponding unique ports.

To understand this approach better, consider the following example:

Suppose we have a 3-node Cassandra cluster. According to the proposed approach we will need to do the followings:

- Let the nodes listen on ports 172.16.0.1:6001 (in AZ1), 172.16.0.2: 6002 (in AZ2) and 172.16.0.3: 6003 (in AZ3)

- Configure the NLB to listen on the same set of ports

- Define and associate target groups based on the port. For instance, the listener on port 6002 will be associated with a target group containing only the node that is listening on port 6002.

- As for how the custom address translator is expected to work,

let’s assume the VPC Endpoint ENI IPs are 192.168.0.1 (in AZ1),

192.168.0.2 (in AZ2) and 192.168.0.3 (in AZ3). The address

translator should translate received addresses like so:

- 172.16.0.1:6001 --> 192.168.0.1:6001 - 172.16.0.2:6002 --> 192.168.0.2:6002 - 172.16.0.3:6003 --> 192.168.0.3:6003

The proposed approach not only solves the connectivity problem but also allows for connecting to appropriate nodes based on query plans generated by load balancing policies.

Around the same time, we came up with a slightly modified approach as well: we realized the need for address translation can be mostly mitigated if we make the Cassandra nodes return the VPC Endpoint ENI IPs in the first place.

But the excitement did not last for long! Why? Because we quickly discovered a key problem: there is a limit to the number of listeners that can be added to any given AWS NLB of just 50.

While 50 is certainly a decent limit, the way we designed our solution meant we wouldn’t be able to provision a cluster with more than 50 nodes. This was quickly deemed to be an unacceptable limitation as it is not uncommon for a cluster to have more than 50 nodes; many Cassandra clusters in our fleet have hundreds of nodes. We had to abandon the idea of address translation and started thinking about alternative solution approaches.

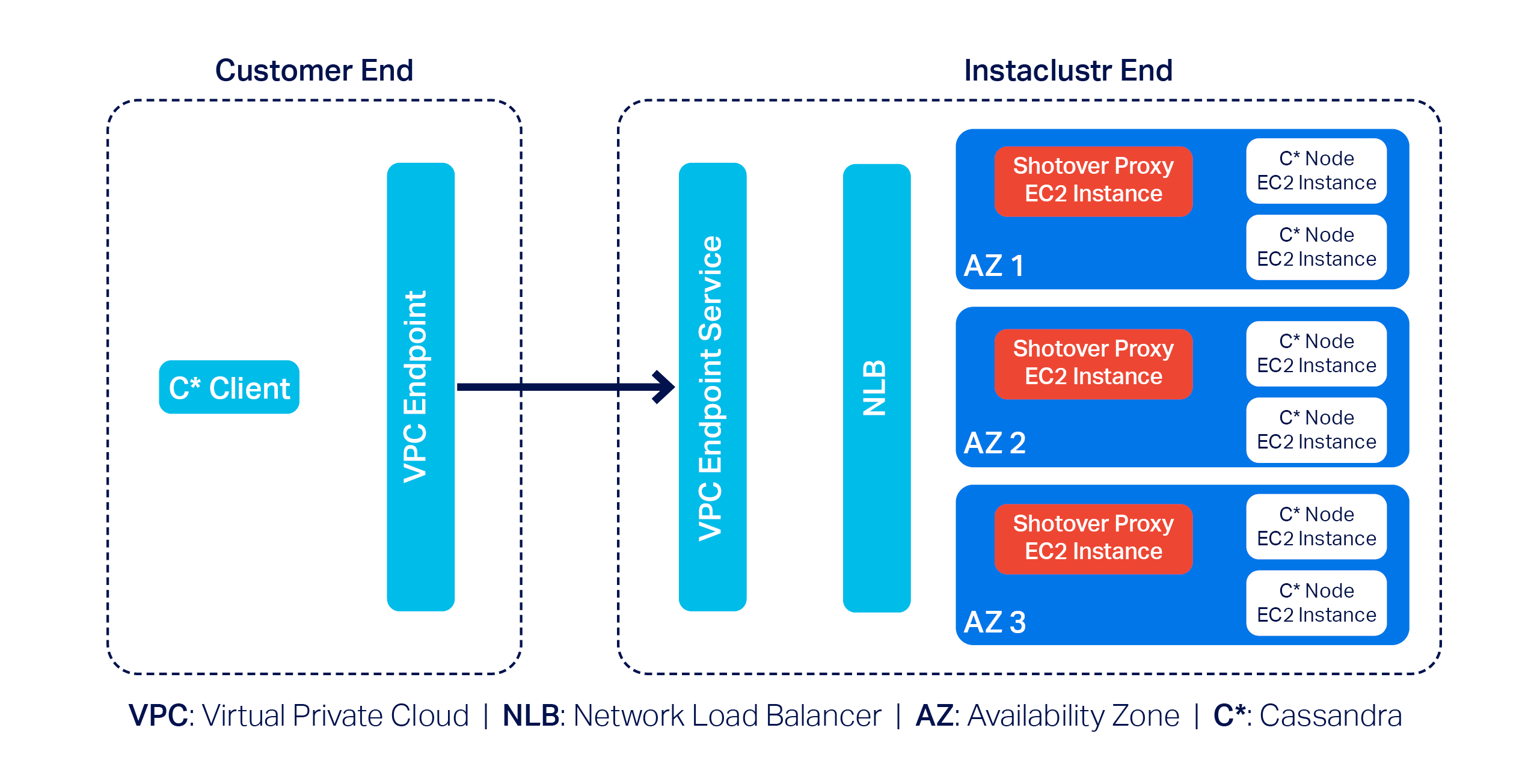

Introducing Shotover Proxy

We were disappointed but did not lose hope. Soon after, we devised a practical solution centred around using one of our open source products: Shotover Proxy.

Shotover Proxy is used with Cassandra clusters to support AWS PrivateLink on the Instaclustr Managed Platform. What is Shotover Proxy, you ask? Shotover is a layer 7 database proxy built to allow developers, admins, DBAs, and operators to modify in-flight database requests. By managing database requests in transit, Shotover gives NetApp Instaclustr customers AWS PrivateLink’s simple and secure network setup with the many benefits of Cassandra.

Below is an updated version of the previous diagram that introduces some Shotover nodes in the mix:

As you can see, each AZ now has a dedicated Shotover proxy node.

In the above diagram, we have a 6-node Cassandra cluster. The Cassandra cluster sitting behind the Shotover nodes is an ordinary Private Network Cluster. The role of the Shotover nodes is to manage client requests to the Cassandra nodes while masking the real Cassandra nodes behind them. To the Cassandra client, the Shotover nodes appear to be Cassandra nodes, and it is only them that make up the entire cluster! This is the secret recipe for AWS PrivateLink for Instaclustr for Apache Cassandra that enabled us to get past the challenges discussed earlier.

So how is this model made to work?

Shotover can alter certain requests from—and responses to—the client. It can examine the tokens allocated to the Cassandra nodes in its own AZ (aka rack) and claim to be the owner of all those tokens. This essentially makes them appear to be an aggregation of the nodes in its own rack.

Given the purposely crafted topology and token allocation metadata, while the client directs queries to the Shotover node, the Shotover node in turn can pass them on to the appropriate Cassandra node and then transparently send responses back. It is worth noting that the Shotover nodes themselves do not store any data.

Because we only have 1 Shotover node per AZ in this design and there may be at most about 5 AZs per region, we only need that many listeners in the NLB to make this mechanism work. As such, the 50-listener limit on the NLB was no longer a problem.

The use of Shotover to manage client driver and cluster interoperability may sound straight forward to implement, but developing it was a year-long undertaking. As described above, the initial months of development were devoted to engineering CQL queries on unique ports and the AddressTranslator interface from the Cassandra driver to gracefully manage client connections to the Cassandra cluster. While this solution did successfully provide support for AWS PrivateLink with a Cassandra cluster, we knew that the 50-listener limit on the NLB was a barrier for use and wanted to provide our customers with a solution that could be used for any Cassandra cluster, regardless of node count.

The next few months of engineering were then devoted to the Proof of Concept of an alternative solution with the goal to investigate how Shotover could manage client requests for a Cassandra cluster with any number of nodes. And so, after a solution to support a cluster with any number of nodes was successfully proved, subsequent effort was then devoted to work through stability testing the new solution, the results of that engineering being the stable solution described above.

We have also conducted performance testing to evaluate the relative performance of a PrivateLink-enabled Cassandra cluster compared to its non-PrivateLink counterpart. Multiple iterations of performance testing were executed as some adjustments to Shotover were identified from test cases and resulted in the PrivateLink-enabled Cassandra cluster throughput and latency measuring near to a standard Cassandra cluster throughput and latency.

Related content: Read more about creating an AWS PrivateLink-enabled Cassandra cluster on the Instaclustr Managed Platform

The following was our experimental setup for identifying the max throughput in terms of Operations per second of a Cassandra PrivateLink cluster in comparison to a non-Cassandra PrivateLink cluster

- Baseline node size:

i3en.xlarge - Shotover Proxy node size on Cassandra Cluster:

CSO-PRD-c6gd.medium-54 - Cassandra version:

4.1.3 - Shotover Proxy version:

0.2.0 - Other configuration: Repair and backup disabled, Client Encryption disabled

Throughput results

| Operation | Operation rate with PrivateLink and Shotover | Operation rate without PrivateLink |

| Mixed-small (3 Nodes) | 16608 | 16206 |

| Mixed-small (6 Nodes) | 33585 | 33598 |

| Mixed-small (9 Nodes) | 51792 | 51798 |

Across different cluster sizes, we observed no significant difference in operation throughput between PrivateLink and non-PrivateLink configurations.

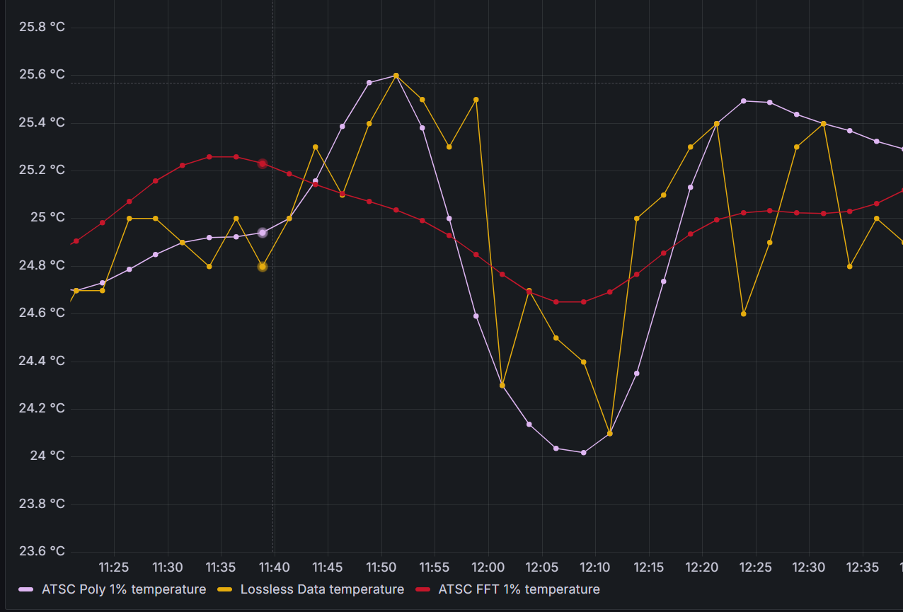

Latency results

Latency benchmarks were conducted at ~70% of the observed peak throughput (as above) to simulate realistic production traffic.

| Operation | Ops/second | Setup | Mean Latency (ms) | Median Latency (ms) | P95 Latency (ms) | P99 Latency (ms) |

| Mixed-small (3 Nodes) | 11630 | Non-PrivateLink | 9.90 | 3.2 | 53.7 | 119.4 |

| PrivateLink | 9.50 | 3.6 | 48.4 | 118.8 | ||

| Mixed-small (6 Nodes) | 23510 | Non-PrivateLink | 6 | 2.3 | 27.2 | 79.4 |

| PrivateLink | 9.10 | 3.4 | 45.4 | 104.9 | ||

| Mixed-small (9 Nodes) | 36255 | Non-PrivateLink | 5.5 | 2.4 | 21.8 | 67.6 |

| PrivateLink | 11.9 | 2.7 | 77.1 | 141.2 |

Results indicate that for lower to mid-tier throughput levels, AWS PrivateLink introduced minimal to negligible overhead. However, at higher operation rates, we observed increased latency, most notably at the p99 mark—likely due to network level factors or Shotover.

The increase in latency is expected as AWS PrivateLink introduces an additional hop to route traffic securely, which can impact latencies, particularly under heavy load. For the vast majority of applications, the observed latencies remain within acceptable ranges. However, for latency-sensitive workloads, we recommend adding more nodes (for high load cases) to help mitigate the impact of the additional network hop introduced by PrivateLink.

As with any generic benchmarking results, performance may vary depending on specific data model, workload characteristics, and environment. The results presented here are based on specific experimental setup using standard configurations and should primarily be used to compare the relative performance of PrivateLink vs. Non-PrivateLink networking under similar conditions.

Why choose AWS PrivateLink with NetApp Instaclustr?

NetApp’s commitment to innovation means you benefit from cutting-edge technology combined with ease of use. With AWS PrivateLink support on our platform, customers gain:

- Enhanced security: All traffic stays private, never touching the internet.

- Simplified networking: No need to manage complex CIDR overlaps.

- Enterprise scalability: Handles sizable clusters effortlessly.

By addressing challenges, such as the NLB listener cap and private-to-VPC IP translation, we’ve created a solution that balances efficiency, security, and scalability.

Experience PrivateLink today

The integration of AWS PrivateLink with Apache Cassandra® is now generally available with production-ready SLAs for our customers. Log in to the Console to create a Cassandra cluster with support for AWS PrivateLink with just a few clicks today. Whether you’re managing sensitive workloads or demanding performance at scale, this feature delivers unmatched value.

Want to see it in action? Book a free demo today and experience the Shotover-powered magic of AWS PrivateLink firsthand.

Resources

- Getting started: Visit the documentation to learn how to create an AWS PrivateLink-enabled Apache Cassandra cluster on the Instaclustr Managed Platform.

- Connecting clients: Already created a Cassandra cluster with AWS PrivateLink? Click here to read about how to connect Cassandra clients in one VPC to an AWS PrivateLink-enabled Cassandra cluster on the Instaclustr Platform.

- General availability announcement: For more details, read our General Availability announcement on AWS PrivateLink support for Cassandra.

The post Integrating support for AWS PrivateLink with Apache Cassandra® on the NetApp Instaclustr Managed Platform appeared first on Instaclustr.

Compaction Strategies, Performance, and Their Impact on Cassandra Node Density

This is the third post in my series on optimizing Apache Cassandra for maximum cost efficiency through increased node density. In the first post, I examined how streaming operations impact node density and laid out the groundwork for understanding why higher node density leads to significant cost savings. In the second post, I discussed how compaction throughput is critical to node density and introduced the optimizations we implemented in CASSANDRA-15452 to improve throughput on disaggregated storage like EBS.

Cassandra Compaction Throughput Performance Explained

This is the second post in my series on improving node density and lowering costs with Apache Cassandra. In the previous post, I examined how streaming performance impacts node density and operational costs. In this post, I’ll focus on compaction throughput, and a recent optimization in Cassandra 5.0.4 that significantly improves it, CASSANDRA-15452.

This post assumes some familiarity with Apache Cassandra storage engine fundamentals. The documentation has a nice section covering the storage engine if you’d like to brush up before reading this post.

CEP-24 Behind the scenes: Developing Apache Cassandra®’s password validator and generator

Introduction: The need for an Apache Cassandra® password validator and generator

Here’s the problem: while users have always had the ability to create whatever password they wanted in Cassandra–from straightforward to incredibly complex and everything in between–this ultimately created a noticeable security vulnerability.

While organizations might have internal processes for generating secure passwords that adhere to their own security policies, Cassandra itself did not have the means to enforce these standards. To make the security vulnerability worse, if a password initially met internal security guidelines, users could later downgrade their password to a less secure option simply by using “ALTER ROLE” statements.

When internal password requirements are enforced for an individual, users face the additional burden of creating compliant passwords. This inevitably involved lots of trial-and-error in attempting to create a compliant password that satisfied complex security roles.

But what if there was a way to have Cassandra automatically create passwords that meet all bespoke security requirements–but without requiring manual effort from users or system operators?

That’s why we developed CEP-24: Password validation/generation. We recognized that the complexity of secure password management could be significantly reduced (or eliminated entirely) with the right approach–and improving both security and user experience at the same time.

The Goals of CEP-24

A Cassandra Enhancement Proposal (or CEP) is a structured process for proposing, creating, and ultimately implementing new features for the Cassandra project. All CEPs are thoroughly vetted among the Cassandra community before they are officially integrated into the project.

These were the key goals we established for CEP-24:

- Introduce a way to enforce password strength upon role creation or role alteration.

- Implement a reference implementation of a password validator which adheres to a recommended password strength policy, to be used for Cassandra users out of the box.

- Emit a warning (and proceed) or just reject “create role” and “alter role” statements when the provided password does not meet a certain security level, based on user configuration of Cassandra.

- To be able to implement a custom password validator with its own policy, whatever it might be, and provide a modular/pluggable mechanism to do so.

- Provide a way for Cassandra to generate a password which would pass the subsequent validation for use by the user.

The Cassandra Password Validator and Generator builds upon an established framework in Cassandra called Guardrails, which was originally implemented under CEP-3 (more details here).

The password validator implements a custom guardrail introduced

as part of

CEP-24. A custom guardrail can validate and generate values of

arbitrary types when properly implemented. In the CEP-24 context,

the password guardrail provides

CassandraPasswordValidator by extending

ValueValidator, while passwords are generated by

CassandraPasswordGenerator by extending

ValueGenerator. Both components work with passwords as

String type values.

Password validation and generation are configured in the

cassandra.yaml file under the

password_validator section. Let’s explore the key

configuration properties available. First, the

class_name and generator_class_name

parameters specify which validator and generator classes will be

used to validate and generate passwords respectively.

Cassandra

ships CassandraPasswordValidator and CassandraPasswordGenerator out

of the box. However, if a particular enterprise decides that they

need something very custom, they are free to implement their own

validators, put it on Cassandra’s class path and reference it in

the configuration behind class_name parameter. Same for the

validator.

CEP-24 provides implementations of the validator and generator that the Cassandra team believes will satisfy the requirements of most users. These default implementations address common password security needs. However, the framework is designed with flexibility in mind, allowing organizations to implement custom validation and generation rules that align with their specific security policies and business requirements.