AxonOps Review - An Operations Platform for Apache Cassandra

Note: Before we dive into this review of AxonOps and their offerings, it’s important to note that this blog post is part of a paid engagement in which I provided product feedback. AxonOps had no influence or say over the content of this post and did not have access to it prior to publishing.

In the ever-evolving landscape of data management, companies are constantly seeking solutions that can simplify the complexities of database operations. One such player in the market is AxonOps, a company that specializes in providing tooling for operating Apache Cassandra.

Benchmarking Apache Cassandra with tlp-stress

This post will introduce you to tlp-stress, a tool for benchmarking Apache Cassandra. I started tlp-stress back when I was working at The Last Pickle. At the time, I was spending a lot of time helping teams identify the root cause of performance issues and needed a way of benchmarking. I found cassandra-stress to be difficult to use and configure, so I ended up writing my own tool that worked in a manner that I found to be more useful. If you’re looking for a tool to assist you in benchmarking Cassandra, and you’re looking to get started quickly, this might be the right tool for you.

Back to Consulting!

Saying “it’s been a while since I wrote anything here” would be an understatement, but I’m back, with a lot to talk about in the upcoming months.

First off - if you’re not aware, I continued writing, but on The Last Pickle blog. There’s quite a few posts there, here are the most interesting ones:

- 14 Things To Do When Setting Up a New Cassandra Cluster

- Apache Cassandra Performance Tuning - Compression with Mixed Workloads

- Garbage Collection Tuning for Apache Cassandra

- Analyzing Cassandra Performance with Flame Graphs

- Cassandra Time Series Data Modeling For Massive Scale

Now the fun part - I’ve spent the last 3 years at Apple, then Netflix, neither of which gave me much time to continue my writing. As of this month, I’m officially no longer at Netflix and have started Rustyrazorblade Consulting!

Building a 100% ScyllaDB Shard-Aware Application Using Rust

Building a 100% ScyllaDB Shard-Aware Application Using Rust

I wrote a web transcript of the talk I gave with my colleagues Joseph and Yassir at [Scylla Su...

Learning Rust the hard way for a production Kafka+ScyllaDB pipeline

Learning Rust the hard way for a production Kafka+ScyllaDB pipeline

This is the web version of the talk I gave at [Scylla Summit 2022](https://www.scyllad...

On Scylla Manager Suspend & Resume feature

On Scylla Manager Suspend & Resume feature

!!! warning "Disclaimer" This blog post is neither a rant nor intended to undermine the great work that...

Renaming and reshaping Scylla tables using scylla-migrator

We have recently faced a problem where some of the first Scylla tables we created on our main production cluster were not in line any more with the evolved s...

Python scylla-driver: how we unleashed the Scylla monster's performance

At Scylla summit 2019 I had the chance to meet Israel Fruchter and we dreamed of working on adding **shard...

Scylla Summit 2019

I've had the pleasure to attend again and present at the Scylla Summit in San Francisco and the honor to be awarded the...

A Small Utility to Help With Extracting Code Snippets

It’s been a while since I’ve written anything here. Part of the reason has been due to the writing I’ve done over on the blog at The Last Pickle. In the lsat few years, I’ve written about our tlp-stress tool, tips for new Cassandra clusters, and a variety of performance posts related to Compaction, Compression, and GC Tuning.

The other reason is the eight blog posts I’ve got in the draft folder. One of the reasons why there are so many is the way I write. If the post is programming related, I usually start with the post, then start coding, pull snippets out, learn more, rework the post, then rework snippets. It’s an annoying, manual process. The posts sitting in my draft folder have incomplete code, and reworking the code is a tedious process that I get annoyed with, leading to abandoned posts.

Scylla: four ways to optimize your disk space consumption

We recently had to face free disk space outages on some of our scylla clusters and we learnt some very interesting things while outlining some improvements t...

Scylla Summit 2018 write-up

It's been almost one month since I had the chance to attend and speak at Scylla Summit 2018 so I'm reliev...

Authenticating and connecting to a SSL enabled Scylla cluster using Spark 2

This quick article is a wrap up for reference on how to connect to ScyllaDB using Spark 2 when authentication and SSL are enforced for the clients on the...

A botspot story

I felt like sharing a recent story that allowed us identify a bot in a haystack thanks to Scylla.

...

Evaluating ScyllaDB for production 2/2

In my previous blog post, I shared [7 lessons on our experience in evaluating Scylla](https://www.ultrabug.fr...

Accessing Private Variables in the JVM

In this I’ll discuss a uncommonly used but useful technique of accessing variables and methods which have been declared as private in the JVM, using the Apache Commons Lang library to work around the restriction. The description from the project page reads:

The standard Java libraries fail to provide enough methods for manipulation of its core classes. Apache Commons Lang provides these extra methods.

A couple weeks ago I was working on a project that required

parsing some CQL statements. There isn’t a standard parser separate

from the Cassandra project at the moment, so I decided to pull in

the entirety of cassandra-all from maven central. The parser in Cassandra isn’t

really designed to be used as a library. In particular, the

org.apache.cassandra.cql3.QueryProcessor has a

parseStatement(String) call, but the

ParsedStatement that’s returned doesn’t expose any of

the private variables via getters. I felt particularly determined

for some reason, so I decided to investigate a workaround.

Migration to Hugo

After almost five years of using Pelican as my static site generator, I’ve migrated to the Hugo tool. While I enjoyed Pelican and it’s flexibility, it’s performance started to bother me when building a site from scratch. Depending on what else was running on my laptop, a full build could take 15-20 seconds. This isn’t the end of the world, but in comparison Hugo takes less than 100 milliseconds.

If it was simply a matter of build time, I may not have really cared that much, but I’ve been using Hugo to build the site and documentation for Reaper, the open source repair tool we maintain at The Last Pickle.

Evaluating ScyllaDB for production 1/2

I have recently been conducting a quite deep evaluation of ScyllaDB to find out if we could benefit from this database in some of...

Working with gRPC, Kotlin and Gradle

Edit: The source code for this post is located on GitHub

Sometimes when I travel I end up trying to learn something completely new. For a while I was playing with Rust, Capn Proto, Scala, or I’d start a throwaway project at an airport and just tinker.

My passion is and has always been databases. I’ve maintained this blog for roughly a decade, starting with MySQL for the first part of my career but moving to Apache Cassandra several years ago, and am now a committer and member of the PMC.

I Am Still Writing!

If you were to take a look at my blog, you’d think I’d flipped a table and left the tech industry. Not the case at all. I’m still writing, but less frequently, and on the TLP blog. I intend to start writing here again, but the material will likely focus around topics other than Cassandra, since I’m already writing about it elsewhere. Here are the posts I’ve authored in the last 6 months or so:

Instaclustr Now Supporting Apache Cassandra 3.7 as LTS

Instacluster announced on the Apache Cassandra user list that they are making their supported branch of the Cassandra 3.7 tick tock release publicly available (see GitHub repo). Bug fixes that go into 3.8, 3.9, etc will be back ported to the Instacluster LTS. You can read the blog post about the decision.

Some people I’ve talked to are concerned about having different commercial entities doing long term supported releases, and this concern is understandable. The obvious preference is for the project maintainers to handle this and make an official LTS available. The big concern here is that third party LTS could fracture the project in the long term.

Rustyrazorblade Radio, A Distributed System Podcast

I haven’t blogged in a while, which is a bummer because I was determined to write an article a week for the entire year. I haven’t even come remotely close to that goal.

I’ve recently switched jobs from DataStax to Consulting with The Last Pickle, which has been pretty hectic. Add to that 3 presentations at the Cassandra Summit and the end result is very little time for personal projects.

Working Relationally With Cassandra

I’ve spent the last 4 years working in the big data world with Cassandra because it’s the only practical solution if you have a requirement to scale out, uptime is a priority, and you need predictable performance. I’ve heard different ways of describing where Cassandra fits in your architecture, but I think the best way to think of it is close to your customer. Think of the servers your mobile apps communicate with or what holds your product inventory.

Cassandra Dataset Manager Preview 1 Released

One of the problems of learning a new database is getting used to a new way of data modeling. PostgreSQL looks different from Redis, which is different from a graph, and is different from Cassandra.

Cassandra Dataset Manager aims to reduce the time spent in a frustrating trial and error process trying to learn proper data modeling techniques for Apache Cassandra and Datastax Enterprise by providing curated data models which have been designed by professionals with years of experience. Think of it as a package manager for Cassandra data models and sample data.

Cassandra Dataset Manager Video Preview

I posted a short preview showing off some of the work I’ve been doing recently on Cassandra Dataset Manager, a tool to help new Cassandra users learn how to create proper data models.

There’s documentation, but it’s still under heavy development.

Cassandra 3.3 Released

Apache Cassandra 3.3 was released last week. As per the Tick Tock release schedule, this release is focused on bug fixes and no new features were introduced. For practical purposes, consider this a bug fix release to Cassandra 3.2. All told there were almost 50 bugs fixed in this release. Many of the bugs fixed in this version also applied to Cassandra 3.0.3, which was also released last week.

Cassandra Secondary Index Preview #1

If you’ve looked into using Cassandra at all, you probably have heard plenty of warnings about its secondary indexes. If you’ve come from a relational background, you may have been surprised when you were told to create multiple tables (materialized views) instead of relying on indexes. This is because Cassandra is a distributed database, and the impact of doing a query that hits your entire cluster is you lose your linear scalability. If you’re capped at 25K queries per second per server, it doesn’t matter if you have one or a thousand servers, you’re still only able to handle 25k queries per second, total.

Async Python and Cassandra with Gevent

Introduction

Building a web app relying on database calls with CPython (the standard Python distribution) is pretty easy, but can suffer from performance problems. Python itself isn’t particularly fast, and in 2.x, it’s concurrency story is especially weak.

For starters, there’s the dreaded GIL. The GIL prevents us from taking advantage of multi core systems, so even if we use try to use threads we’re missing out on their main performance benefit, which is parallel computation.

Cassandra 3.2 Overview

The 3.0 release of Apache Cassandra marked an important milestone. One of the biggest updates was CASSANDRA-8099, the JIRA to modernize the storage engine. It was also the first release in the new Tick Tock cycle, which lands a new release of Cassandra every month. Even .x numbers (such as 3.2) are feature releases, and odd .x numbers (such as 3.1) are bug fix releases. Cassandra 3.2, released about a week ago, is the first feature release following 3.0. This post will briefly cover the changes.

Introducing KillrAnswers

The last few months have been a non stop whirlwind of traveling and speaking. I’ve been very fortunate to have spoken at Strata New York, give a couple sessions at the Cassandra Summit, and even had a few minutes on stage for the Cassandra Summit keynote (I’m at minute 22 with Luke Tillman). When I have time, I end up hacking on random projects. For example, a couple months ago I was working on a recommendation engine for KillrVideo. I also end up playing with bleeding edge builds of Cassandra and Spark.

Enhance Apache Cassandra Logging

Cassandra usually output all its logs in a system.log file. It

uses log4j old 1.2

version for cassandra 2.0, and since

2.1, logback, which of

course use different syntax :)

Logs can be enhanced with some configuration. These explanations

works with Cassandra 2.0.x and Cassandra 2.1.x, I haven’t tested

others versions yet.

I wanted to split logs in different files, depending on their “sources” (repair, compaction, tombstones etc), to ease debugging, while keeping the system.log as usual.

For example, to declare 2 new files to handle, say Repair and Tombstones logs :

Cassandra 2.0 :

You need to declare each new log files in log4j-server.properties file.

[...]

## Repair

log4j.appender.Repair=org.apache.log4j.RollingFileAppender

log4j.appender.Repair.maxFileSize=20MB

log4j.appender.Repair.maxBackupIndex=50

log4j.appender.Repair.layout=org.apache.log4j.PatternLayout

log4j.appender.Repair.layout.ConversionPattern=%5p [%t] %d{ISO8601} %F (line %L) %m%n

## Edit the next line to point to your logs directory

log4j.appender.Repair.File=/var/log/cassandra/repair.log

## Tombstones

log4j.appender.Tombstones=org.apache.log4j.RollingFileAppender

log4j.appender.Tombstones.maxFileSize=20MB

log4j.appender.Tombstones.maxBackupIndex=50

log4j.appender.Tombstones.layout=org.apache.log4j.PatternLayout

log4j.appender.Tombstones.layout.ConversionPattern=%5p [%t] %d{ISO8601} %F (line %L) %m%n

### Edit the next line to point to your logs directory

log4j.appender.Tombstones.File=/home/log/cassandra/tombstones.log

Cassandra 2.1 :

It is in the logback.xml file.

<appender name="Repair" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${cassandra.logdir}/repair.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.FixedWindowRollingPolicy">

<fileNamePattern>${cassandra.logdir}/system.log.%i.zip</fileNamePattern>

<minIndex>1</minIndex>

<maxIndex>20</maxIndex>

</rollingPolicy>

<triggeringPolicy class="ch.qos.logback.core.rolling.SizeBasedTriggeringPolicy">

<maxFileSize>20MB</maxFileSize>

</triggeringPolicy>

<encoder>

<pattern>%-5level [%thread] %date{ISO8601} %F:%L - %msg%n</pattern>

<!-- old-style log format

<pattern>%5level [%thread] %date{ISO8601} %F (line %L) %msg%n</pattern>

-->

</encoder>

</appender>

<appender name="Tombstones" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${cassandra.logdir}/tombstones.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.FixedWindowRollingPolicy">

<fileNamePattern>${cassandra.logdir}/tombstones.log.%i.zip</fileNamePattern>

<minIndex>1</minIndex>

<maxIndex>20</maxIndex>

</rollingPolicy>

<triggeringPolicy class="ch.qos.logback.core.rolling.SizeBasedTriggeringPolicy">

<maxFileSize>20MB</maxFileSize>

</triggeringPolicy>

<encoder>

<pattern>%-5level [%thread] %date{ISO8601} %F:%L - %msg%n</pattern>

<!-- old-style log format

<pattern>%5level [%thread] %date{ISO8601} %F (line %L) %msg%n</pattern>

-->

</encoder>

</appender>

Now that theses new files are declared, we need to fill them with logs. To do that, simply redirect some Java class to the good file. To redirect the class org.apache.cassandra.db.filter.SliceQueryFilter, loglevel WARN to the Tombstone file, simply add :

Cassandra 2.0 :

log4j.logger.org.apache.cassandra.db.filter.SliceQueryFilter=WARN,Tombstones

Cassandra 2.1 :

<logger name="org.apache.cassandra.db.filter.SliceQueryFilter" level="WARN">

<appender-ref ref="Tombstones"/>

</logger>

It’s a on-the-fly configuration, so no need to restart Cassandra

!

Now you will have dedicated files for each kind of logs.

A list of interesting Cassandra classes :

org.apache.cassandra.service.StorageService, WARN : Repair

org.apache.cassandra.net.OutboundTcpConnection, DEBUG : Repair (haha, theses fucking stuck repair)

org.apache.cassandra.repair, INFO : Repair

org.apache.cassandra.db.HintedHandOffManager, DEBUG : Repair

org.apache.cassandra.streaming.StreamResultFuture, DEBUG : Repair

org.apache.cassandra.cql3.statements.BatchStatement, WARN : Statements

org.apache.cassandra.db.filter.SliceQueryFilter, WARN : Tombstones

You can find from which java class a log message come from by adding “%c” in log4j/logback “ConversionPattern” :

org.apache.cassandra.db.ColumnFamilyStore INFO [BatchlogTasks:1] 2015-09-18 16:43:48,261 ColumnFamilyStore.java:939 - Enqueuing flush of batchlog: 226172 (0%) on-heap, 0 (0%) off-heap

org.apache.cassandra.db.Memtable INFO [MemtableFlushWriter:4213] 2015-09-18 16:43:48,262 Memtable.java:347 - Writing Memtable-batchlog@1145616338(195.566KiB serialized bytes, 205 ops, 0%/0% of on/off-heap limit)

org.apache.cassandra.db.Memtable INFO [MemtableFlushWriter:4213] 2015-09-18 16:43:48,264 Memtable.java:393 - Completed flushing /home/cassandra/data/system/batchlog/system-batchlog-tmp-ka-4267-Data.db; nothing needed to be retained. Commitlog position was ReplayPosition(segmentId=1442331704273, position=17281204)

You can disable “additivity” (i.e avoid adding messages in system.log for example) in log4j for a specific class by adding :

log4j.additivity.org.apache.cassandra.db.filter.SliceQueryFilter=false

For logback, you can add additivity=“false” to <logger .../> elements.

To migrate from log4j logs to logback.xml, you can look at http://logback.qos.ch/translator/

Sources :

- http://docs.datastax.com/en/cassandra/2.1/cassandra/configuration/configLoggingLevels_r.html

- http://docs.datastax.com/en/cassandra/2.0/cassandra/configuration/configLoggingLevels_t.html

- https://logging.apache.org/log4j/1.2/manual.html

- http://logback.qos.ch/manual/appenders.html

Note: you can add http://blog.alteroot.org/feed.cassandra.xml to your rss aggregator to follow all my Cassandra posts :)

Migrating from MySQL to Cassandra Using Spark

MySQL is a popular choice for new projects. It’s a flexible database that’s easy to set up and start querying. There’s loads of documentation, examples and frameworks it works with, such as Wordpress, Pandas, Ruby on Rails, and Django.

From the above paragraph it reads like a pretty fantastic database, and at small scale it can be great. The problem arises when you need to scale past a single server or have high availability needs. MySQL’s solution to both of these needs is replication. Replication is ok at handling read heavy workloads in a single datacenter, but it falls on it’s face under heavy writes or if you need multiple datacenters. Fortunately Cassandra excels at scalability and high availability. It’s a common story for people to migrate from a relational database to Cassandra for one or both of these reasons. (For further reading on choosing Cassandra even with small datasets read Matt Kennedy’s Little Big Data article)

Cassandra + PySpark DataFrames revisted

A little while back I wrote a

post on working with DataFrames from PySpark, using Cassandra

as a data source. DataFrames are, in my opinion, a fantastic,

flexible api that makes Spark roughly 14 orders of magnitude nicer

to work with as opposed to RDDs. When I wrote the original blog

post, the only way to work with DataFrames from PySpark was to get

an RDD and call toDF().

Sound freaking amazing - what’s the problem?

Joining DataFrames with Pandas

In this post I’ll walk through the process of reading in various plain text database files using Pandas, and then joining together the different DataFrames. All my work was done through an IPython notebook.

I decided to mess around with the labor statistics database that’s up on Amazon. My end goal was to save all the relevant information into Cassandra for future analysis with PySpark. If the files were bigger, I’d do all the initial loading with PySpark, but they’re pretty small and Pandas has a lot of functionality that’s still missing on the Spark side.

You're Already Eventually Consistent

New people to Apache Cassandra are often concerned about the phrase “eventual consistency.” It’s one of those things that seems so foreign, especially if you’re coming from a relational database. When I am with with my RDBMS I get wrapped in the sweet cocoon of ACID transactions!

Is the entire system really safe though? Are we perfectly ACID throughout our entire application? Probably not. Let’s see how it breaks down and where the tradeoffs are.

Spark Streaming With Python and Kafka

Last week I wrote about using PySpark with Cassandra, showing how we can take tables out of Cassandra and easily apply arbitrary filters using DataFrames. This is great if you want to do exploratory work or operate on large datasets. What if you’re interested in ingesting lots of data and getting near real time feedback into your application? Enter Spark Streaming.

Spark streaming is the process of ingesting and operating on data in microbatches, which are generated repeatedly on a fixed window of time. You can visualize it like this:

On The Bleeding Edge - PySpark, DataFrames, and Cassandra

A few months ago I wrote a post on Getting Started with Cassandra and Spark.

I’ve worked with Pandas for some small personal projects and found it very useful. The key feature is the data frame, which comes from R. Data Frames are new in Spark 1.3 and was covered in this blog post. Till now I’ve had to write Scala in order to use Spark. This has resulted in me spending a lot of time looking for libraries that would normally take me less than a second to recall the proper Python library (JSON being an example) since I don’t know Scala very well.

How to change Cassandra compaction strategy on a production cluster

I’ll talk about changing Cassandra CompactionStrategy on a live

production Cluster.

First of all, an extract of the

Cassandra documentation :

Periodic compaction is essential to a healthy Cassandra database because Cassandra does not insert/update in place. As inserts/updates occur, instead of overwriting the rows, Cassandra writes a new timestamped version of the inserted or updated data in another SSTable. Cassandra manages the accumulation of SSTables on disk using compaction. Cassandra also does not delete in place because the SSTable is immutable. Instead, Cassandra marks data to be deleted using a tombstone.

By default, Cassandra use SizeTieredCompactionStrategyi (STC). This strategy triggers a minor compaction when there are a number of similar sized SSTables on disk as configured by the table subproperty, 4 by default.

Another compaction strategy available since

Cassandra 1.0 is

LeveledCompactionStrategy (LCS) based on LevelDB.

Since 2.0.11, DateTieredCompactionStrategy

is also available.

Depending on your needs, you may need to change the compaction strategy on a running cluster. Change this setting involves rewrite ALL sstables to the new strategy, which may take long time and can be cpu / i/o intensive.

I needed to change the compaction strategy on my production

cluster to LeveledCompactionStrategy because of our workflow : lot

of updates and deletes, wide rows etc.

Moreover, with the default STC, progressively the largest SSTable

that is created will not be compacted until the amount of actual

data increases four-fold. So it can take long time before old data

are really deleted !

Note: You can test a new compactionStrategy on one new node with the write_survey bootstrap option. See the datastax blogpost about it.

The basic procedure to change the CompactionStrategy is to alter the table via cql :

cqlsh> ALTER TABLE mykeyspace.mytable WITH compaction = { 'class' : 'LeveledCompactionStrategy' };

If you run alter table to change to LCS like that, all nodes will recompact data at the same time, so performances problems can occurs for hours/days…

A better solution is to migrate nodes by nodes !

You need to change the compaction locally on-the-fly, via the

JMX, like in write_survey mode.

I use jmxterm

for that. I think I’ll write articles about all theses jmx things

:)

For example, to change to LCS on mytable table with

jmxterm :

~ java -jar jmxterm-1.0-alpha-4-uber.jar --url instance1:7199

Welcome to JMX terminal. Type "help" for available commands.

$>domain org.apache.cassandra.db

#domain is set to org.apache.cassandra.db

$>bean org.apache.cassandra.db:columnfamily=mytable,keyspace=mykeyspace,type=ColumnFamilies

#bean is set to org.apache.cassandra.db:columnfamily=mytable,keyspace=mykeyspace,type=ColumnFamilies

$>get CompactionStrategyClass

#mbean = org.apache.cassandra.db:columnfamily=mytable,keyspace=mykeyspace,type=ColumnFamilies:

CompactionStrategyClass = org.apache.cassandra.db.compaction.SizeTieredCompactionStrategy;

$>set CompactionStrategyClass "org.apache.cassandra.db.compaction.LeveledCompactionStrategy"

#Value of attribute CompactionStrategyClass is set to "org.apache.cassandra.db.compaction.LeveledCompactionStrategy"

A nice one-liner :

~ echo "set -b org.apache.cassandra.db:columnfamily=mytable,keyspace=mykeyspace,type=ColumnFamilies CompactionStrategyClass org.apache.cassandra.db.compaction.LeveledCompactionStrategy" | java -jar jmxterm-1.0-alpha-4-uber.jar --url instance1:7199

On next commitlog flush, the node will start it compaction to rewrite all it mytable sstables to the new strategy.

You can see the progression with nodetool :

~ nodetool compactionstats

pending tasks: 48

compaction type keyspace table completed total unit progress

Compaction mykeyspace mytable 4204151584 25676012644 bytes 16.37%

Active compaction remaining time : 0h23m30s

You need to wait for the node to recompact all it sstables, then

change the strategy to instance2, etc.

The transition will be done in multiple compactions if you have

lots of data. By default new sstables will be 160MB large.

you can monitor you table with nodetool cfstats too :

~ nodetool cfstats mykeyspace.mytable

[...]

Pending Tasks: 0

Table: sort

SSTable count: 31

SSTables in each level: [31/4, 0, 0, 0, 0, 0, 0, 0, 0]

[...]

You can see the 31/4 : it means that there is 31 sstables in L0, whereas cassandra try to have only 4 in L0.

Taken from the code ( src/java/org/apache/cassandra/db/compaction/LeveledManifest.java )

[...]

// L0: 988 [ideal: 4]

// L1: 117 [ideal: 10]

// L2: 12 [ideal: 100]

[...]

When all nodes have the new strategy, let’s go for the global alter table. /!\ If a node restart before the final alter table, it will recompact to default strategy (SizeTiered)!

~ cqlsh

cqlsh> ALTER TABLE mykeyspace.mytable WITH compaction = { 'class' : 'LeveledCompactionStrategy' };

Et voilà, I hope this article will help you :)

My latest Cassandra blogpost was one year ago… I have several in mind (jmx things !) so stay tuned !

Introduction to Spark & Cassandra

I’ve been messing with Apache Spark quite a bit lately. If you aren’t familiar, Spark is a general purpose engine for large scale data processing. Initially it comes across as simply a replacement for Hadoop, but that would be selling it short. Big time. In addition to bulk processing (goodbye MapReduce!), Spark includes:

- SQL engine

- Stream processing via Kafka, Flume, ZeroMQ

- Machine Learning

- Graph Processing

Sounds awesome, right? That’s because it is, babaganoush. The next question is where do we store our data? Spark works with a number of projects, but my database of choice these days is Apache Cassandra. Easy scale out and always up. It’s approximately this epic:

Diagnosing Problems in Production Webinar Posted

The webinar from Nov 18, Diagnosing Problems in Production, has been posted to YouTube. I’ve embedded it at the bottom of this post.

The webinar is an extended version of the talk I gave at the Cassandra Summit with Blake Eggleston, which I recapped in my blog as well. I had almost double the time to talk in the webinar and so I was able to go into more detail

Getting Started With Pandas and HDF5

Yesterday I was pulling down some stock data from Yahoo, with the goal of building out a machine learning training set using Spark and Cassandra. If you haven’t tried Cassandra yet, it’s a database built for high availability and linear scalability. I’ve got a intro talk up here. Spark is another apache project that kicks Cassandra into overdrive by providing a framework for batch analytics, streaming, and machine learning. On the way is support for graph operations which makes me giddy.

Cassandra Summit Recap: Diagnosing Problems in Production

Introduction

Last week at the Cassandra Summit I gave a talk with Blake Eggleston on diagnosing performance problems in production. We spoke to about 300 people for about 25 minutes followed by a healthy Q&A session. I’ve expanded on our presentation to include a few extra tools, screenshots, and more clarity on our talking points.

There’s finally a lot of material available for someone looking to get started with Cassandra. There’s several introductory videos on YouTube by both me and Patrick McFadin as well as videos on time series data modeling. I’ve posted videos for my own project, cqlengine, (intro & advanced), and plenty more on the PlanetCassandra channel. There’s also a boatload of getting started material on PlanetCassandra written by Rebecca Mills.

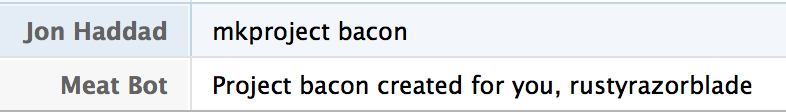

Say Hello to Meatbot

What is Meatbot?

Meatbot is a HipChat bot for managing status updates for our growing team of Evangelists at DataStax. It’s built in Python 2.7, utilizing the Will library. The status updates are stored in Cassandra using cqlengine. Yep, it’s up on github.

There’s a few simple commands. First, you tell Meatbot about each project you work on.

Once you’ve got your projects, you can list them with

lsproject or delete them with

rmproject.

CQLEngine now using the Python Native Driver

I’m happy to announce that cqlengine is now using the Python Native Driver. For the most part, this should be a trivial upgrade. See the notes below on upgrading.

The Good News

- Significantly less code to maintain in cqlengine itself. We no longer need to maintain connection pools, deal with fail over, dead servers, server discovery, server removal

- Native driver multiplexes queries over each socket, so less sockets stay open

- Notifications can be sent back to the client from the server. An example of this is a schema modification or when a new server is added.

- You can now use the policies for load balancing and failover. See the policies api of the native driver for more information.

Upgrading

If you’re using an earlier version of cqlengine, there are a few caveats to upgrading.

No Downtime Database Migrations

Introduction

Back at my last job, we successfully migrated from MongoDB to Cassandra without any downtime. We did two webinars with Datastax at the time (I am now a Datastax employee). Our first webinar was a general overview on the migration. The second, we covered some of the lessons we learned after being in production with Cassandra for a while. We touched on our migration process, but didn’t get deep into the details. This post will discuss the strategy, it’s goals, and what we learned along the way. The strategy applies to any database migration, and is not scoped only to moving between databases either.

Replace a dead node in Cassandra

Note (June 2020): this article is old and not really revelant

anymore. If you use a modern version of cassandra, look at

-Dcassandra.replace_address_first_boot option

!

I want to share some tips about my experimentations with Cassandra (version 2.0.x).

I found some documentations on datastax website about replacing a dead node, but it is not suitable for our needs, because in case of hardware crash, we will set up a new node with exactly the same IP (replace “in place”). Update : the documentation in now up to date on datastax !

If you try to start the new node with the same IP, cassandra doesn’t start with :

java.lang.RuntimeException: A node with address /10.20.10.2 already exists, cancelling join. Use cassandra.replace_address if you want to replace this node.

So, we need to use the “cassandra.replace_address” directive (which is not really documented ? :() See this commit and this bug report, available since 1.2.11/2.0.0, it’s an easier solution and it works.

+ - New replace_address to supplant the (now removed) replace_token and

+ replace_node workflows to replace a dead node in place. Works like the

+ old options, but takes the IP address of the node to be replaced.

It’s a JVM directive, so we can add it at the end of /etc/cassandra/cassandra-env.sh (debian package), for example:

JVM_OPTS="$JVM_OPTS -Dcassandra.replace_address=10.20.10.2"

Of course, 10.20.10.2 = ip of your dead/new node.

Now, start cassandra, and in logs you will see :

INFO [main] 2014-03-10 14:58:17,804 StorageService.java (line 941) JOINING: schema complete, ready to bootstrap

INFO [main] 2014-03-10 14:58:17,805 StorageService.java (line 941) JOINING: waiting for pending range calculation

INFO [main] 2014-03-10 14:58:17,805 StorageService.java (line 941) JOINING: calculation complete, ready to bootstrap

INFO [main] 2014-03-10 14:58:17,805 StorageService.java (line 941) JOINING: Replacing a node with token(s): [...]

[...]

INFO [main] 2014-03-10 14:58:17,844 StorageService.java (line 941) JOINING: Starting to bootstrap...

INFO [main] 2014-03-10 14:58:18,551 StreamResultFuture.java (line 82) [Stream #effef960-6efe-11e3-9a75-3f94ec5476e9] Executing streaming plan for Bootstrap

Node is in boostraping mode and will retrieve data from cluster.

This may take lots of time.

If the node is a seed node, a warning will indicate that the node

did not auto bootstrap. This is normal, you need to run a

nodetool repair on the node.

On the new node :

# nodetools netstats

Mode: JOINING

Bootstrap effef960-6efe-11e3-9a75-3f94ec5476e9

/10.20.10.1

Receiving 102 files, 17467071157 bytes total

[...]

After some time, you will see some informations on logs !

On the new node :

INFO [STREAM-IN-/10.20.10.1] 2014-03-10 15:15:40,363 StreamResultFuture.java (line 215) [Stream #effef960-6efe-11e3-9a75-3f94ec5476e9] All sessions completed

INFO [main] 2014-03-10 15:15:40,366 StorageService.java (line 970) Bootstrap completed! for the tokens [...]

[...]

INFO [main] 2014-03-10 15:15:40,412 StorageService.java (line 1371) Node /10.20.10.2 state jump to normal

WARN [main] 2014-03-10 15:15:40,413 StorageService.java (line 1378) Not updating token metadata for /10.20.30.51 because I am replacing it

INFO [main] 2014-03-10 15:15:40,419 StorageService.java (line 821) Startup completed! Now serving reads.

And on other nodes :

INFO [GossipStage:1] 2014-03-10 15:15:40,625 StorageService.java (line 1371) Node /10.20.10.2 state jump to normal

Et voilà, dead node has been replaced !

Don’t forget to REMOVE modifications on

cassandra-env.sh after the complete bootstrap !

Enjoy !

Cassandra FAQ: Can I start with a Single Node?

A frequently asked question on the mailing list by developers new to Cassandra is if it’s possible to start with a single node and scale up as their needs grow. This seems to come most often from people familiar with MySQL, Mongo, or another database which uses replication to scale reads.

The short answer to this question is yes, you can absolutely run a one node cluster. However, it’s important to understand the caveats of doing so. Cassandra was built with the intention of running in a cluster. This means that there are several reasonable defaults for a cluster either aren’t practical or don’t apply with a single node.

What's new in cqlengine 0.7

Recently we released version 0.7 of cqlengine, the Python object mapper for CQL3. We’ve been steadily moving towards full support of all of CQL3 for both queries and for table configuration. This post will outline the new features and provide examples on how to use them.

Counters

With counter support finally included it’s now possible to create and use tables with counter columns. They are exposed to the Python application as simple integers, and changes to their values will be sent as deltas to Cassandra. Let’s take a look at an example. I’ll assume you already have Cassandra running locally.

Cassandra, CQL3, and Time Series Data with timeuuid

Cassandra is a BigTable inspired database created at Facebook. It was open sourced several years ago and is now an Apache project.

In cassandra, a row can be very wide and is identified by a key. Think of it as more like a giant array. The data is stored on disk sorted by the key you pick, meaning if you pick the right sort option and key you can have some really fast queries. Here we’ll go over a time series.

Setting up RAID0 in Ubuntu 12.04 in AWS High I/O

Amazon announced high I/O instances today. This is huge for anyone with a database larger than available memory, as it’s been a complete nightmare dealing with EBS up till now. Now your Cassandra, MongoDB, MySQL, or whatever your using should be able to perform well without requiring keeping your entire dataset in memory.

With each instance you get 2x1TB of disk. In this tutorial I’ll be setting it up as a RAID0 to get a single 2TB disk which should deliver excellent performance.