Cassandra + PySpark DataFrames revisted

A little while back I wrote a

post on working with DataFrames from PySpark, using Cassandra

as a data source. DataFrames are, in my opinion, a fantastic,

flexible api that makes Spark roughly 14 orders of magnitude nicer

to work with as opposed to RDDs. When I wrote the original blog

post, the only way to work with DataFrames from PySpark was to get

an RDD and call toDF().

Sound freaking amazing - what’s the problem?

Joining DataFrames with Pandas

In this post I’ll walk through the process of reading in various plain text database files using Pandas, and then joining together the different DataFrames. All my work was done through an IPython notebook.

I decided to mess around with the labor statistics database that’s up on Amazon. My end goal was to save all the relevant information into Cassandra for future analysis with PySpark. If the files were bigger, I’d do all the initial loading with PySpark, but they’re pretty small and Pandas has a lot of functionality that’s still missing on the Spark side.

You're Already Eventually Consistent

New people to Apache Cassandra are often concerned about the phrase “eventual consistency.” It’s one of those things that seems so foreign, especially if you’re coming from a relational database. When I am with with my RDBMS I get wrapped in the sweet cocoon of ACID transactions!

Is the entire system really safe though? Are we perfectly ACID throughout our entire application? Probably not. Let’s see how it breaks down and where the tradeoffs are.

Spark Streaming With Python and Kafka

Last week I wrote about using PySpark with Cassandra, showing how we can take tables out of Cassandra and easily apply arbitrary filters using DataFrames. This is great if you want to do exploratory work or operate on large datasets. What if you’re interested in ingesting lots of data and getting near real time feedback into your application? Enter Spark Streaming.

Spark streaming is the process of ingesting and operating on data in microbatches, which are generated repeatedly on a fixed window of time. You can visualize it like this:

On The Bleeding Edge - PySpark, DataFrames, and Cassandra

A few months ago I wrote a post on Getting Started with Cassandra and Spark.

I’ve worked with Pandas for some small personal projects and found it very useful. The key feature is the data frame, which comes from R. Data Frames are new in Spark 1.3 and was covered in this blog post. Till now I’ve had to write Scala in order to use Spark. This has resulted in me spending a lot of time looking for libraries that would normally take me less than a second to recall the proper Python library (JSON being an example) since I don’t know Scala very well.

How to change Cassandra compaction strategy on a production cluster

I’ll talk about changing Cassandra CompactionStrategy on a live

production Cluster.

First of all, an extract of the

Cassandra documentation :

Periodic compaction is essential to a healthy Cassandra database because Cassandra does not insert/update in place. As inserts/updates occur, instead of overwriting the rows, Cassandra writes a new timestamped version of the inserted or updated data in another SSTable. Cassandra manages the accumulation of SSTables on disk using compaction. Cassandra also does not delete in place because the SSTable is immutable. Instead, Cassandra marks data to be deleted using a tombstone.

By default, Cassandra use SizeTieredCompactionStrategyi (STC). This strategy triggers a minor compaction when there are a number of similar sized SSTables on disk as configured by the table subproperty, 4 by default.

Another compaction strategy available since

Cassandra 1.0 is

LeveledCompactionStrategy (LCS) based on LevelDB.

Since 2.0.11, DateTieredCompactionStrategy

is also available.

Depending on your needs, you may need to change the compaction strategy on a running cluster. Change this setting involves rewrite ALL sstables to the new strategy, which may take long time and can be cpu / i/o intensive.

I needed to change the compaction strategy on my production

cluster to LeveledCompactionStrategy because of our workflow : lot

of updates and deletes, wide rows etc.

Moreover, with the default STC, progressively the largest SSTable

that is created will not be compacted until the amount of actual

data increases four-fold. So it can take long time before old data

are really deleted !

Note: You can test a new compactionStrategy on one new node with the write_survey bootstrap option. See the datastax blogpost about it.

The basic procedure to change the CompactionStrategy is to alter the table via cql :

cqlsh> ALTER TABLE mykeyspace.mytable WITH compaction = { 'class' : 'LeveledCompactionStrategy' };

If you run alter table to change to LCS like that, all nodes will recompact data at the same time, so performances problems can occurs for hours/days…

A better solution is to migrate nodes by nodes !

You need to change the compaction locally on-the-fly, via the

JMX, like in write_survey mode.

I use jmxterm

for that. I think I’ll write articles about all theses jmx things

:)

For example, to change to LCS on mytable table with

jmxterm :

~ java -jar jmxterm-1.0-alpha-4-uber.jar --url instance1:7199

Welcome to JMX terminal. Type "help" for available commands.

$>domain org.apache.cassandra.db

#domain is set to org.apache.cassandra.db

$>bean org.apache.cassandra.db:columnfamily=mytable,keyspace=mykeyspace,type=ColumnFamilies

#bean is set to org.apache.cassandra.db:columnfamily=mytable,keyspace=mykeyspace,type=ColumnFamilies

$>get CompactionStrategyClass

#mbean = org.apache.cassandra.db:columnfamily=mytable,keyspace=mykeyspace,type=ColumnFamilies:

CompactionStrategyClass = org.apache.cassandra.db.compaction.SizeTieredCompactionStrategy;

$>set CompactionStrategyClass "org.apache.cassandra.db.compaction.LeveledCompactionStrategy"

#Value of attribute CompactionStrategyClass is set to "org.apache.cassandra.db.compaction.LeveledCompactionStrategy"

A nice one-liner :

~ echo "set -b org.apache.cassandra.db:columnfamily=mytable,keyspace=mykeyspace,type=ColumnFamilies CompactionStrategyClass org.apache.cassandra.db.compaction.LeveledCompactionStrategy" | java -jar jmxterm-1.0-alpha-4-uber.jar --url instance1:7199

On next commitlog flush, the node will start it compaction to rewrite all it mytable sstables to the new strategy.

You can see the progression with nodetool :

~ nodetool compactionstats

pending tasks: 48

compaction type keyspace table completed total unit progress

Compaction mykeyspace mytable 4204151584 25676012644 bytes 16.37%

Active compaction remaining time : 0h23m30s

You need to wait for the node to recompact all it sstables, then

change the strategy to instance2, etc.

The transition will be done in multiple compactions if you have

lots of data. By default new sstables will be 160MB large.

you can monitor you table with nodetool cfstats too :

~ nodetool cfstats mykeyspace.mytable

[...]

Pending Tasks: 0

Table: sort

SSTable count: 31

SSTables in each level: [31/4, 0, 0, 0, 0, 0, 0, 0, 0]

[...]

You can see the 31/4 : it means that there is 31 sstables in L0, whereas cassandra try to have only 4 in L0.

Taken from the code ( src/java/org/apache/cassandra/db/compaction/LeveledManifest.java )

[...]

// L0: 988 [ideal: 4]

// L1: 117 [ideal: 10]

// L2: 12 [ideal: 100]

[...]

When all nodes have the new strategy, let’s go for the global alter table. /!\ If a node restart before the final alter table, it will recompact to default strategy (SizeTiered)!

~ cqlsh

cqlsh> ALTER TABLE mykeyspace.mytable WITH compaction = { 'class' : 'LeveledCompactionStrategy' };

Et voilà, I hope this article will help you :)

My latest Cassandra blogpost was one year ago… I have several in mind (jmx things !) so stay tuned !

Introduction to Spark & Cassandra

I’ve been messing with Apache Spark quite a bit lately. If you aren’t familiar, Spark is a general purpose engine for large scale data processing. Initially it comes across as simply a replacement for Hadoop, but that would be selling it short. Big time. In addition to bulk processing (goodbye MapReduce!), Spark includes:

- SQL engine

- Stream processing via Kafka, Flume, ZeroMQ

- Machine Learning

- Graph Processing

Sounds awesome, right? That’s because it is, babaganoush. The next question is where do we store our data? Spark works with a number of projects, but my database of choice these days is Apache Cassandra. Easy scale out and always up. It’s approximately this epic:

Diagnosing Problems in Production Webinar Posted

The webinar from Nov 18, Diagnosing Problems in Production, has been posted to YouTube. I’ve embedded it at the bottom of this post.

The webinar is an extended version of the talk I gave at the Cassandra Summit with Blake Eggleston, which I recapped in my blog as well. I had almost double the time to talk in the webinar and so I was able to go into more detail

Getting Started With Pandas and HDF5

Yesterday I was pulling down some stock data from Yahoo, with the goal of building out a machine learning training set using Spark and Cassandra. If you haven’t tried Cassandra yet, it’s a database built for high availability and linear scalability. I’ve got a intro talk up here. Spark is another apache project that kicks Cassandra into overdrive by providing a framework for batch analytics, streaming, and machine learning. On the way is support for graph operations which makes me giddy.

Cassandra Summit Recap: Diagnosing Problems in Production

Introduction

Last week at the Cassandra Summit I gave a talk with Blake Eggleston on diagnosing performance problems in production. We spoke to about 300 people for about 25 minutes followed by a healthy Q&A session. I’ve expanded on our presentation to include a few extra tools, screenshots, and more clarity on our talking points.

There’s finally a lot of material available for someone looking to get started with Cassandra. There’s several introductory videos on YouTube by both me and Patrick McFadin as well as videos on time series data modeling. I’ve posted videos for my own project, cqlengine, (intro & advanced), and plenty more on the PlanetCassandra channel. There’s also a boatload of getting started material on PlanetCassandra written by Rebecca Mills.

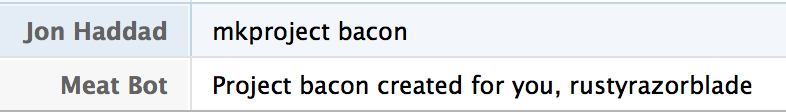

Say Hello to Meatbot

What is Meatbot?

Meatbot is a HipChat bot for managing status updates for our growing team of Evangelists at DataStax. It’s built in Python 2.7, utilizing the Will library. The status updates are stored in Cassandra using cqlengine. Yep, it’s up on github.

There’s a few simple commands. First, you tell Meatbot about each project you work on.

Once you’ve got your projects, you can list them with

lsproject or delete them with

rmproject.

CQLEngine now using the Python Native Driver

I’m happy to announce that cqlengine is now using the Python Native Driver. For the most part, this should be a trivial upgrade. See the notes below on upgrading.

The Good News

- Significantly less code to maintain in cqlengine itself. We no longer need to maintain connection pools, deal with fail over, dead servers, server discovery, server removal

- Native driver multiplexes queries over each socket, so less sockets stay open

- Notifications can be sent back to the client from the server. An example of this is a schema modification or when a new server is added.

- You can now use the policies for load balancing and failover. See the policies api of the native driver for more information.

Upgrading

If you’re using an earlier version of cqlengine, there are a few caveats to upgrading.

No Downtime Database Migrations

Introduction

Back at my last job, we successfully migrated from MongoDB to Cassandra without any downtime. We did two webinars with Datastax at the time (I am now a Datastax employee). Our first webinar was a general overview on the migration. The second, we covered some of the lessons we learned after being in production with Cassandra for a while. We touched on our migration process, but didn’t get deep into the details. This post will discuss the strategy, it’s goals, and what we learned along the way. The strategy applies to any database migration, and is not scoped only to moving between databases either.

Replace a dead node in Cassandra

Note (June 2020): this article is old and not really revelant

anymore. If you use a modern version of cassandra, look at

-Dcassandra.replace_address_first_boot option

!

I want to share some tips about my experimentations with Cassandra (version 2.0.x).

I found some documentations on datastax website about replacing a dead node, but it is not suitable for our needs, because in case of hardware crash, we will set up a new node with exactly the same IP (replace “in place”). Update : the documentation in now up to date on datastax !

If you try to start the new node with the same IP, cassandra doesn’t start with :

java.lang.RuntimeException: A node with address /10.20.10.2 already exists, cancelling join. Use cassandra.replace_address if you want to replace this node.

So, we need to use the “cassandra.replace_address” directive (which is not really documented ? :() See this commit and this bug report, available since 1.2.11/2.0.0, it’s an easier solution and it works.

+ - New replace_address to supplant the (now removed) replace_token and

+ replace_node workflows to replace a dead node in place. Works like the

+ old options, but takes the IP address of the node to be replaced.

It’s a JVM directive, so we can add it at the end of /etc/cassandra/cassandra-env.sh (debian package), for example:

JVM_OPTS="$JVM_OPTS -Dcassandra.replace_address=10.20.10.2"

Of course, 10.20.10.2 = ip of your dead/new node.

Now, start cassandra, and in logs you will see :

INFO [main] 2014-03-10 14:58:17,804 StorageService.java (line 941) JOINING: schema complete, ready to bootstrap

INFO [main] 2014-03-10 14:58:17,805 StorageService.java (line 941) JOINING: waiting for pending range calculation

INFO [main] 2014-03-10 14:58:17,805 StorageService.java (line 941) JOINING: calculation complete, ready to bootstrap

INFO [main] 2014-03-10 14:58:17,805 StorageService.java (line 941) JOINING: Replacing a node with token(s): [...]

[...]

INFO [main] 2014-03-10 14:58:17,844 StorageService.java (line 941) JOINING: Starting to bootstrap...

INFO [main] 2014-03-10 14:58:18,551 StreamResultFuture.java (line 82) [Stream #effef960-6efe-11e3-9a75-3f94ec5476e9] Executing streaming plan for Bootstrap

Node is in boostraping mode and will retrieve data from cluster.

This may take lots of time.

If the node is a seed node, a warning will indicate that the node

did not auto bootstrap. This is normal, you need to run a

nodetool repair on the node.

On the new node :

# nodetools netstats

Mode: JOINING

Bootstrap effef960-6efe-11e3-9a75-3f94ec5476e9

/10.20.10.1

Receiving 102 files, 17467071157 bytes total

[...]

After some time, you will see some informations on logs !

On the new node :

INFO [STREAM-IN-/10.20.10.1] 2014-03-10 15:15:40,363 StreamResultFuture.java (line 215) [Stream #effef960-6efe-11e3-9a75-3f94ec5476e9] All sessions completed

INFO [main] 2014-03-10 15:15:40,366 StorageService.java (line 970) Bootstrap completed! for the tokens [...]

[...]

INFO [main] 2014-03-10 15:15:40,412 StorageService.java (line 1371) Node /10.20.10.2 state jump to normal

WARN [main] 2014-03-10 15:15:40,413 StorageService.java (line 1378) Not updating token metadata for /10.20.30.51 because I am replacing it

INFO [main] 2014-03-10 15:15:40,419 StorageService.java (line 821) Startup completed! Now serving reads.

And on other nodes :

INFO [GossipStage:1] 2014-03-10 15:15:40,625 StorageService.java (line 1371) Node /10.20.10.2 state jump to normal

Et voilà, dead node has been replaced !

Don’t forget to REMOVE modifications on

cassandra-env.sh after the complete bootstrap !

Enjoy !

Cassandra FAQ: Can I start with a Single Node?

A frequently asked question on the mailing list by developers new to Cassandra is if it’s possible to start with a single node and scale up as their needs grow. This seems to come most often from people familiar with MySQL, Mongo, or another database which uses replication to scale reads.

The short answer to this question is yes, you can absolutely run a one node cluster. However, it’s important to understand the caveats of doing so. Cassandra was built with the intention of running in a cluster. This means that there are several reasonable defaults for a cluster either aren’t practical or don’t apply with a single node.

What's new in cqlengine 0.7

Recently we released version 0.7 of cqlengine, the Python object mapper for CQL3. We’ve been steadily moving towards full support of all of CQL3 for both queries and for table configuration. This post will outline the new features and provide examples on how to use them.

Counters

With counter support finally included it’s now possible to create and use tables with counter columns. They are exposed to the Python application as simple integers, and changes to their values will be sent as deltas to Cassandra. Let’s take a look at an example. I’ll assume you already have Cassandra running locally.

Cassandra, CQL3, and Time Series Data with timeuuid

Cassandra is a BigTable inspired database created at Facebook. It was open sourced several years ago and is now an Apache project.

In cassandra, a row can be very wide and is identified by a key. Think of it as more like a giant array. The data is stored on disk sorted by the key you pick, meaning if you pick the right sort option and key you can have some really fast queries. Here we’ll go over a time series.

Setting up RAID0 in Ubuntu 12.04 in AWS High I/O

Amazon announced high I/O instances today. This is huge for anyone with a database larger than available memory, as it’s been a complete nightmare dealing with EBS up till now. Now your Cassandra, MongoDB, MySQL, or whatever your using should be able to perform well without requiring keeping your entire dataset in memory.

With each instance you get 2x1TB of disk. In this tutorial I’ll be setting it up as a RAID0 to get a single 2TB disk which should deliver excellent performance.