Getting Started With Pandas and HDF5

Yesterday I was pulling down some stock data from Yahoo, with the goal of building out a machine learning training set using Spark and Cassandra. If you haven’t tried Cassandra yet, it’s a database built for high availability and linear scalability. I’ve got a intro talk up here. Spark is another apache project that kicks Cassandra into overdrive by providing a framework for batch analytics, streaming, and machine learning. On the way is support for graph operations which makes me giddy.

Cassandra Summit Recap: Diagnosing Problems in Production

Introduction

Last week at the Cassandra Summit I gave a talk with Blake Eggleston on diagnosing performance problems in production. We spoke to about 300 people for about 25 minutes followed by a healthy Q&A session. I’ve expanded on our presentation to include a few extra tools, screenshots, and more clarity on our talking points.

There’s finally a lot of material available for someone looking to get started with Cassandra. There’s several introductory videos on YouTube by both me and Patrick McFadin as well as videos on time series data modeling. I’ve posted videos for my own project, cqlengine, (intro & advanced), and plenty more on the PlanetCassandra channel. There’s also a boatload of getting started material on PlanetCassandra written by Rebecca Mills.

Say Hello to Meatbot

What is Meatbot?

Meatbot is a HipChat bot for managing status updates for our growing team of Evangelists at DataStax. It’s built in Python 2.7, utilizing the Will library. The status updates are stored in Cassandra using cqlengine. Yep, it’s up on github.

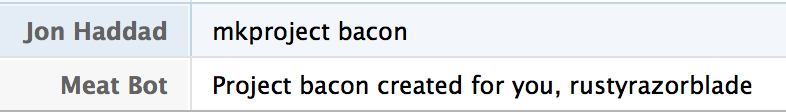

There’s a few simple commands. First, you tell Meatbot about each project you work on.

Once you’ve got your projects, you can list them with

lsproject or delete them with

rmproject.

CQLEngine now using the Python Native Driver

I’m happy to announce that cqlengine is now using the Python Native Driver. For the most part, this should be a trivial upgrade. See the notes below on upgrading.

The Good News

- Significantly less code to maintain in cqlengine itself. We no longer need to maintain connection pools, deal with fail over, dead servers, server discovery, server removal

- Native driver multiplexes queries over each socket, so less sockets stay open

- Notifications can be sent back to the client from the server. An example of this is a schema modification or when a new server is added.

- You can now use the policies for load balancing and failover. See the policies api of the native driver for more information.

Upgrading

If you’re using an earlier version of cqlengine, there are a few caveats to upgrading.

No Downtime Database Migrations

Introduction

Back at my last job, we successfully migrated from MongoDB to Cassandra without any downtime. We did two webinars with Datastax at the time (I am now a Datastax employee). Our first webinar was a general overview on the migration. The second, we covered some of the lessons we learned after being in production with Cassandra for a while. We touched on our migration process, but didn’t get deep into the details. This post will discuss the strategy, it’s goals, and what we learned along the way. The strategy applies to any database migration, and is not scoped only to moving between databases either.

Replace a dead node in Cassandra

Note (June 2020): this article is old and not really revelant

anymore. If you use a modern version of cassandra, look at

-Dcassandra.replace_address_first_boot option

!

I want to share some tips about my experimentations with Cassandra (version 2.0.x).

I found some documentations on datastax website about replacing a dead node, but it is not suitable for our needs, because in case of hardware crash, we will set up a new node with exactly the same IP (replace “in place”). Update : the documentation in now up to date on datastax !

If you try to start the new node with the same IP, cassandra doesn’t start with :

java.lang.RuntimeException: A node with address /10.20.10.2 already exists, cancelling join. Use cassandra.replace_address if you want to replace this node.

So, we need to use the “cassandra.replace_address” directive (which is not really documented ? :() See this commit and this bug report, available since 1.2.11/2.0.0, it’s an easier solution and it works.

+ - New replace_address to supplant the (now removed) replace_token and

+ replace_node workflows to replace a dead node in place. Works like the

+ old options, but takes the IP address of the node to be replaced.

It’s a JVM directive, so we can add it at the end of /etc/cassandra/cassandra-env.sh (debian package), for example:

JVM_OPTS="$JVM_OPTS -Dcassandra.replace_address=10.20.10.2"

Of course, 10.20.10.2 = ip of your dead/new node.

Now, start cassandra, and in logs you will see :

INFO [main] 2014-03-10 14:58:17,804 StorageService.java (line 941) JOINING: schema complete, ready to bootstrap

INFO [main] 2014-03-10 14:58:17,805 StorageService.java (line 941) JOINING: waiting for pending range calculation

INFO [main] 2014-03-10 14:58:17,805 StorageService.java (line 941) JOINING: calculation complete, ready to bootstrap

INFO [main] 2014-03-10 14:58:17,805 StorageService.java (line 941) JOINING: Replacing a node with token(s): [...]

[...]

INFO [main] 2014-03-10 14:58:17,844 StorageService.java (line 941) JOINING: Starting to bootstrap...

INFO [main] 2014-03-10 14:58:18,551 StreamResultFuture.java (line 82) [Stream #effef960-6efe-11e3-9a75-3f94ec5476e9] Executing streaming plan for Bootstrap

Node is in boostraping mode and will retrieve data from cluster.

This may take lots of time.

If the node is a seed node, a warning will indicate that the node

did not auto bootstrap. This is normal, you need to run a

nodetool repair on the node.

On the new node :

# nodetools netstats

Mode: JOINING

Bootstrap effef960-6efe-11e3-9a75-3f94ec5476e9

/10.20.10.1

Receiving 102 files, 17467071157 bytes total

[...]

After some time, you will see some informations on logs !

On the new node :

INFO [STREAM-IN-/10.20.10.1] 2014-03-10 15:15:40,363 StreamResultFuture.java (line 215) [Stream #effef960-6efe-11e3-9a75-3f94ec5476e9] All sessions completed

INFO [main] 2014-03-10 15:15:40,366 StorageService.java (line 970) Bootstrap completed! for the tokens [...]

[...]

INFO [main] 2014-03-10 15:15:40,412 StorageService.java (line 1371) Node /10.20.10.2 state jump to normal

WARN [main] 2014-03-10 15:15:40,413 StorageService.java (line 1378) Not updating token metadata for /10.20.30.51 because I am replacing it

INFO [main] 2014-03-10 15:15:40,419 StorageService.java (line 821) Startup completed! Now serving reads.

And on other nodes :

INFO [GossipStage:1] 2014-03-10 15:15:40,625 StorageService.java (line 1371) Node /10.20.10.2 state jump to normal

Et voilà, dead node has been replaced !

Don’t forget to REMOVE modifications on

cassandra-env.sh after the complete bootstrap !

Enjoy !

Cassandra FAQ: Can I start with a Single Node?

A frequently asked question on the mailing list by developers new to Cassandra is if it’s possible to start with a single node and scale up as their needs grow. This seems to come most often from people familiar with MySQL, Mongo, or another database which uses replication to scale reads.

The short answer to this question is yes, you can absolutely run a one node cluster. However, it’s important to understand the caveats of doing so. Cassandra was built with the intention of running in a cluster. This means that there are several reasonable defaults for a cluster either aren’t practical or don’t apply with a single node.

What's new in cqlengine 0.7

Recently we released version 0.7 of cqlengine, the Python object mapper for CQL3. We’ve been steadily moving towards full support of all of CQL3 for both queries and for table configuration. This post will outline the new features and provide examples on how to use them.

Counters

With counter support finally included it’s now possible to create and use tables with counter columns. They are exposed to the Python application as simple integers, and changes to their values will be sent as deltas to Cassandra. Let’s take a look at an example. I’ll assume you already have Cassandra running locally.

Cassandra, CQL3, and Time Series Data with timeuuid

Cassandra is a BigTable inspired database created at Facebook. It was open sourced several years ago and is now an Apache project.

In cassandra, a row can be very wide and is identified by a key. Think of it as more like a giant array. The data is stored on disk sorted by the key you pick, meaning if you pick the right sort option and key you can have some really fast queries. Here we’ll go over a time series.

Setting up RAID0 in Ubuntu 12.04 in AWS High I/O

Amazon announced high I/O instances today. This is huge for anyone with a database larger than available memory, as it’s been a complete nightmare dealing with EBS up till now. Now your Cassandra, MongoDB, MySQL, or whatever your using should be able to perform well without requiring keeping your entire dataset in memory.

With each instance you get 2x1TB of disk. In this tutorial I’ll be setting it up as a RAID0 to get a single 2TB disk which should deliver excellent performance.