Integrating support for AWS PrivateLink with Apache Cassandra® on the NetApp Instaclustr Managed Platform

Discover how NetApp Instaclustr leverages AWS PrivateLink for secure and seamless connectivity with Apache Cassandra®. This post explores the technical implementation, challenges faced, and the innovative solutions we developed to provide a robust, scalable platform for your data needs.

Last year, NetApp achieved a significant milestone by fully

integrating AWS PrivateLink support for Apache Cassandra® into the

NetApp Instaclustr Managed Platform. Read our AWS PrivateLink

support for Apache Cassandra General Availability announcement

here. Our Product Engineering team made remarkable progress in

incorporating this feature into various NetApp Instaclustr

application offerings. NetApp now offers AWS PrivateLink support as

an Enterprise Feature add-on for the Instaclustr Managed Platform

for

Cassandra,

Kafka®,

OpenSearch®,

Cadence®, and

Valkey .

.

The journey to support AWS PrivateLink for Cassandra involved considerable engineering effort and numerous development cycles to create a solution tailored to the unique interaction between the Cassandra application and its client driver. After extensive development and testing, our product engineering team successfully implemented an enterprise ready solution. Read on for detailed insights into the technical implementation of our solution.

What is AWS PrivateLink?PrivateLink is a networking solution from AWS that provides private connectivity between Virtual Private Clouds (VPCs) without exposing any traffic to the public internet. This solution is ideal for customers who require a unidirectional network connection (often due to compliance concerns), ensuring that connections can only be initiated from the source VPC to the destination VPC. Additionally, PrivateLink simplifies network management by eliminating the need to manage overlapping CIDRs between VPCs. The one-way connection allows connections to be initiated only from the source VPC to the managed cluster hosted in our platform (target VPC)—and not the other way around.

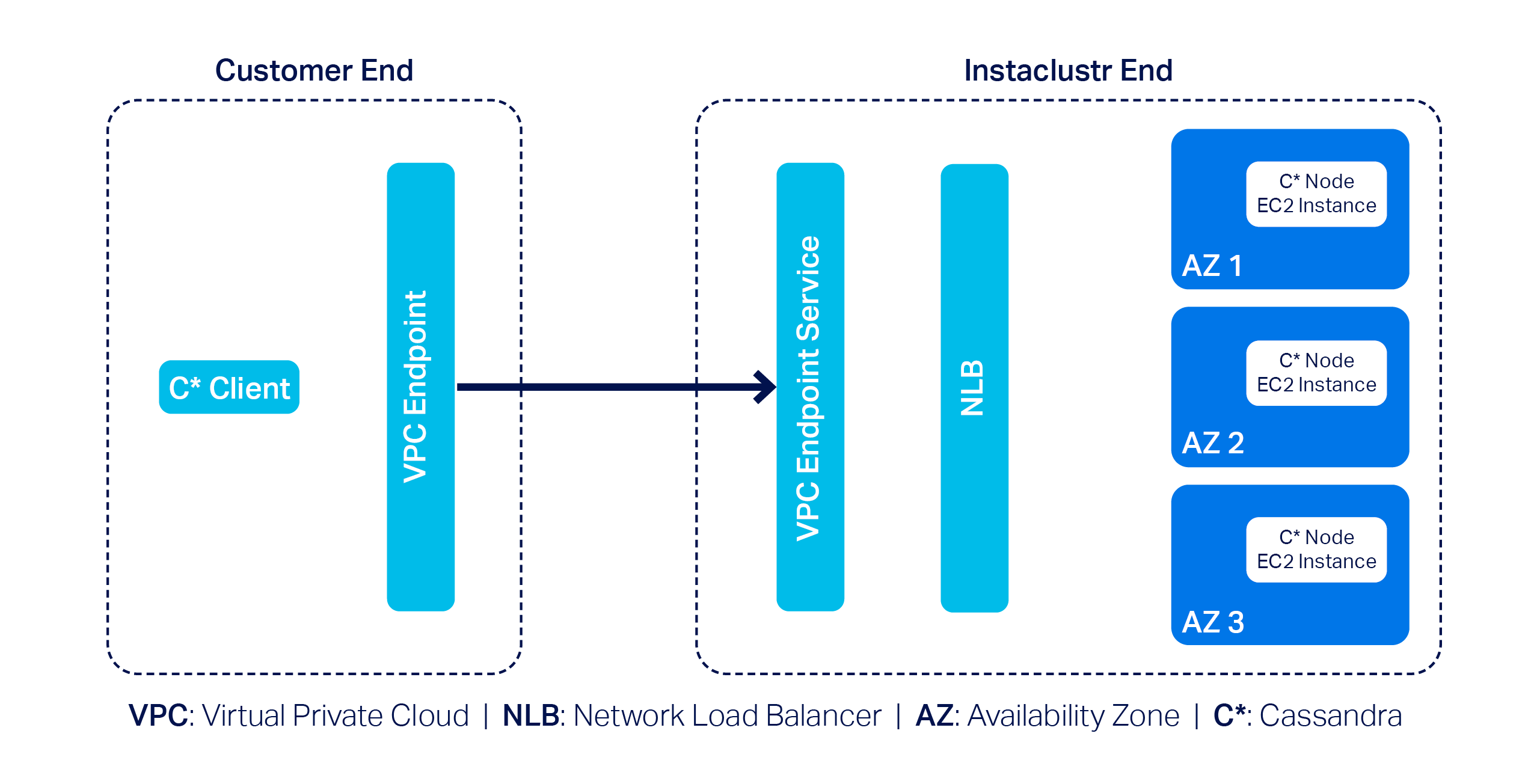

To get an idea of what major building blocks are involved in making up an end-to-end AWS PrivateLink solution for Cassandra, take a look at the following diagram—it’s a simplified representation of the infrastructure used to support a PrivateLink cluster:

In this example, we have a 3-node Cassandra cluster at the far right with one Cassandra node per Availability Zone (or AZ). Next, we have the VPC Endpoint Service and a Network Load Balancer (NLB). The Endpoint Service is essentially the AWS PrivateLink, and by design AWS needs it to be backed by an NLB–that’s pretty much what we have to manage on our side.

On the customer side, they must create a VPC Endpoint that enables them to privately connect to the AWS PrivateLink on our end; naturally, customers will also have to use a Cassandra client(s) to connect to the cluster.

AWS PrivateLink support with Instaclustr for Apache CassandraTo incorporate AWS PrivateLink support with Instaclustr for Apache Cassandra on our platform, we came across a few technical challenges. First and foremost, the primary challenge was relatively straightforward: Cassandra clients need to talk to each individual node in a cluster.

However, the problem is that nodes in an AWS PrivateLink cluster are only assigned private IPs; that is what the nodes would announce by default when Cassandra clients attempt to discover the topology of the cluster. Cassandra clients cannot do much with the received private IPs as they cannot be used to connect to the nodes directly in an AWS PrivateLink setup.

We devised a plan of attack to get around this problem:

- Make each individual Cassandra node listen for CQL queries on unique ports.

- Configure the NLB so it can route traffic to the appropriate node based on the relevant unique port.

- Let clients implement the AddressTranslator interface from the Cassandra driver. The custom address translator will need to translate the received private IPs to one of the VPC Endpoint Elastic Network Interface (or ENI) IPs without altering the corresponding unique ports.

To understand this approach better, consider the following example:

Suppose we have a 3-node Cassandra cluster. According to the proposed approach we will need to do the followings:

- Let the nodes listen on ports 172.16.0.1:6001 (in AZ1), 172.16.0.2: 6002 (in AZ2) and 172.16.0.3: 6003 (in AZ3)

- Configure the NLB to listen on the same set of ports

- Define and associate target groups based on the port. For instance, the listener on port 6002 will be associated with a target group containing only the node that is listening on port 6002.

- As for how the custom address translator is expected to work,

let’s assume the VPC Endpoint ENI IPs are 192.168.0.1 (in AZ1),

192.168.0.2 (in AZ2) and 192.168.0.3 (in AZ3). The address

translator should translate received addresses like so:

- 172.16.0.1:6001 --> 192.168.0.1:6001 - 172.16.0.2:6002 --> 192.168.0.2:6002 - 172.16.0.3:6003 --> 192.168.0.3:6003

The proposed approach not only solves the connectivity problem but also allows for connecting to appropriate nodes based on query plans generated by load balancing policies.

Around the same time, we came up with a slightly modified approach as well: we realized the need for address translation can be mostly mitigated if we make the Cassandra nodes return the VPC Endpoint ENI IPs in the first place.

But the excitement did not last for long! Why? Because we quickly discovered a key problem: there is a limit to the number of listeners that can be added to any given AWS NLB of just 50.

While 50 is certainly a decent limit, the way we designed our solution meant we wouldn’t be able to provision a cluster with more than 50 nodes. This was quickly deemed to be an unacceptable limitation as it is not uncommon for a cluster to have more than 50 nodes; many Cassandra clusters in our fleet have hundreds of nodes. We had to abandon the idea of address translation and started thinking about alternative solution approaches.

Introducing Shotover ProxyWe were disappointed but did not lose hope. Soon after, we devised a practical solution centred around using one of our open source products: Shotover Proxy.

Shotover Proxy is used with Cassandra clusters to support AWS PrivateLink on the Instaclustr Managed Platform. What is Shotover Proxy, you ask? Shotover is a layer 7 database proxy built to allow developers, admins, DBAs, and operators to modify in-flight database requests. By managing database requests in transit, Shotover gives NetApp Instaclustr customers AWS PrivateLink’s simple and secure network setup with the many benefits of Cassandra.

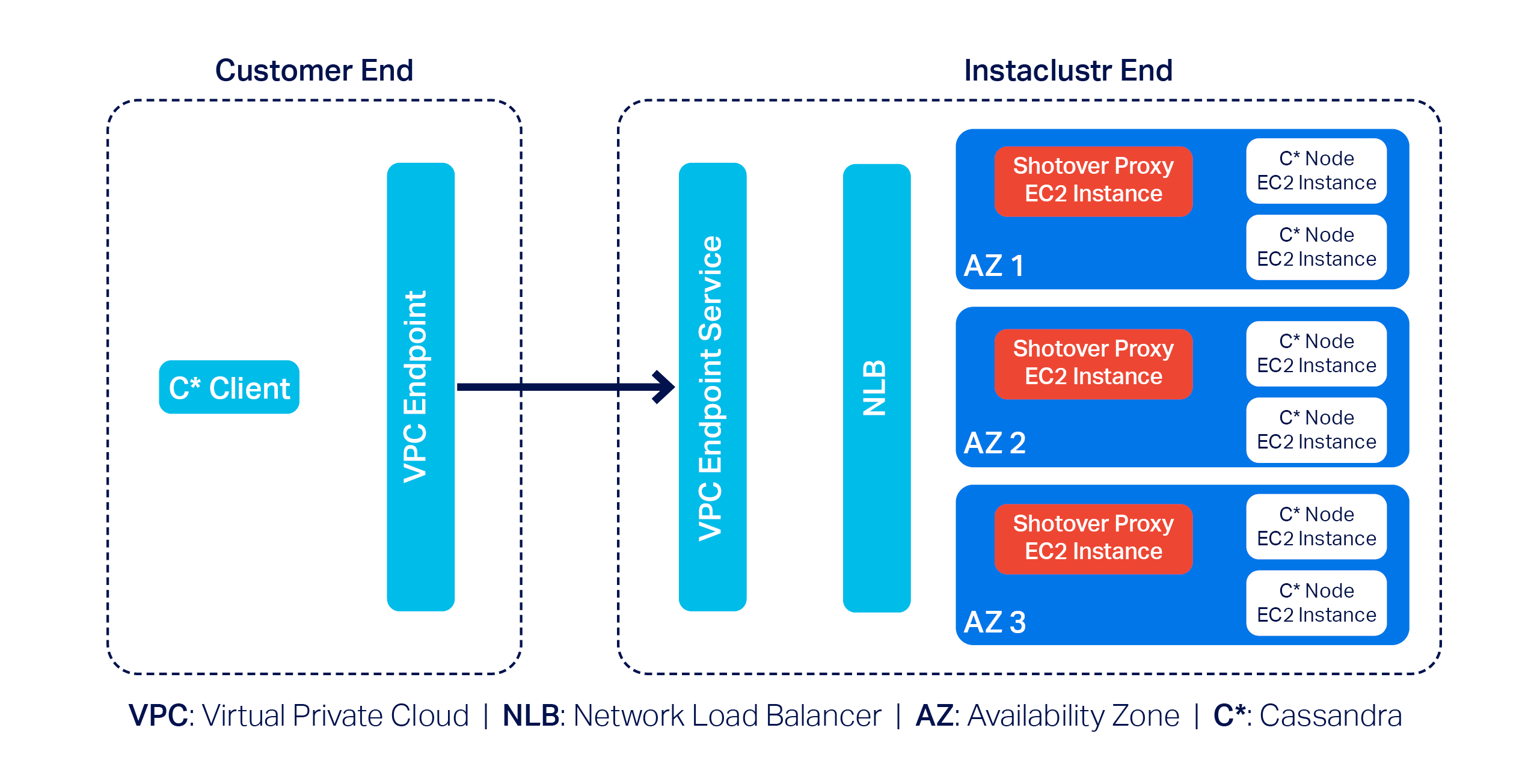

Below is an updated version of the previous diagram that introduces some Shotover nodes in the mix:

As you can see, each AZ now has a dedicated Shotover proxy node.

In the above diagram, we have a 6-node Cassandra cluster. The Cassandra cluster sitting behind the Shotover nodes is an ordinary Private Network Cluster. The role of the Shotover nodes is to manage client requests to the Cassandra nodes while masking the real Cassandra nodes behind them. To the Cassandra client, the Shotover nodes appear to be Cassandra nodes, and it is only them that make up the entire cluster! This is the secret recipe for AWS PrivateLink for Instaclustr for Apache Cassandra that enabled us to get past the challenges discussed earlier.

So how is this model made to work?

Shotover can alter certain requests from—and responses to—the client. It can examine the tokens allocated to the Cassandra nodes in its own AZ (aka rack) and claim to be the owner of all those tokens. This essentially makes them appear to be an aggregation of the nodes in its own rack.

Given the purposely crafted topology and token allocation metadata, while the client directs queries to the Shotover node, the Shotover node in turn can pass them on to the appropriate Cassandra node and then transparently send responses back. It is worth noting that the Shotover nodes themselves do not store any data.

Because we only have 1 Shotover node per AZ in this design and there may be at most about 5 AZs per region, we only need that many listeners in the NLB to make this mechanism work. As such, the 50-listener limit on the NLB was no longer a problem.

The use of Shotover to manage client driver and cluster interoperability may sound straight forward to implement, but developing it was a year-long undertaking. As described above, the initial months of development were devoted to engineering CQL queries on unique ports and the AddressTranslator interface from the Cassandra driver to gracefully manage client connections to the Cassandra cluster. While this solution did successfully provide support for AWS PrivateLink with a Cassandra cluster, we knew that the 50-listener limit on the NLB was a barrier for use and wanted to provide our customers with a solution that could be used for any Cassandra cluster, regardless of node count.

The next few months of engineering were then devoted to the Proof of Concept of an alternative solution with the goal to investigate how Shotover could manage client requests for a Cassandra cluster with any number of nodes. And so, after a solution to support a cluster with any number of nodes was successfully proved, subsequent effort was then devoted to work through stability testing the new solution, the results of that engineering being the stable solution described above.

We have also conducted performance testing to evaluate the relative performance of a PrivateLink-enabled Cassandra cluster compared to its non-PrivateLink counterpart. Multiple iterations of performance testing were executed as some adjustments to Shotover were identified from test cases and resulted in the PrivateLink-enabled Cassandra cluster throughput and latency measuring near to a standard Cassandra cluster throughput and latency.

Related content: Read more about creating an AWS PrivateLink-enabled Cassandra cluster on the Instaclustr Managed Platform

The following was our experimental setup for identifying the max throughput in terms of Operations per second of a Cassandra PrivateLink cluster in comparison to a non-Cassandra PrivateLink cluster

- Baseline node size:

i3en.xlarge - Shotover Proxy node size on Cassandra Cluster:

CSO-PRD-c6gd.medium-54 - Cassandra version:

4.1.3 - Shotover Proxy version:

0.2.0 - Other configuration: Repair and backup disabled, Client Encryption disabled

Across different cluster sizes, we observed no significant difference in operation throughput between PrivateLink and non-PrivateLink configurations.

Latency resultsLatency benchmarks were conducted at ~70% of the observed peak throughput (as above) to simulate realistic production traffic.

Operation Ops/second Setup Mean Latency (ms) Median Latency (ms) P95 Latency (ms) P99 Latency (ms) Mixed-small (3 Nodes) 11630 Non-PrivateLink 9.90 3.2 53.7 119.4 PrivateLink 9.50 3.6 48.4 118.8 Mixed-small (6 Nodes) 23510 Non-PrivateLink 6 2.3 27.2 79.4 PrivateLink 9.10 3.4 45.4 104.9 Mixed-small (9 Nodes) 36255 Non-PrivateLink 5.5 2.4 21.8 67.6 PrivateLink 11.9 2.7 77.1 141.2Results indicate that for lower to mid-tier throughput levels, AWS PrivateLink introduced minimal to negligible overhead. However, at higher operation rates, we observed increased latency, most notably at the p99 mark—likely due to network level factors or Shotover.

The increase in latency is expected as AWS PrivateLink introduces an additional hop to route traffic securely, which can impact latencies, particularly under heavy load. For the vast majority of applications, the observed latencies remain within acceptable ranges. However, for latency-sensitive workloads, we recommend adding more nodes (for high load cases) to help mitigate the impact of the additional network hop introduced by PrivateLink.

As with any generic benchmarking results, performance may vary depending on specific data model, workload characteristics, and environment. The results presented here are based on specific experimental setup using standard configurations and should primarily be used to compare the relative performance of PrivateLink vs. Non-PrivateLink networking under similar conditions.

Why choose AWS PrivateLink with NetApp Instaclustr?NetApp’s commitment to innovation means you benefit from cutting-edge technology combined with ease of use. With AWS PrivateLink support on our platform, customers gain:

- Enhanced security: All traffic stays private, never touching the internet.

- Simplified networking: No need to manage complex CIDR overlaps.

- Enterprise scalability: Handles sizable clusters effortlessly.

By addressing challenges, such as the NLB listener cap and private-to-VPC IP translation, we’ve created a solution that balances efficiency, security, and scalability.

Experience PrivateLink todayThe integration of AWS PrivateLink with Apache Cassandra® is now generally available with production-ready SLAs for our customers. Log in to the Console to create a Cassandra cluster with support for AWS PrivateLink with just a few clicks today. Whether you’re managing sensitive workloads or demanding performance at scale, this feature delivers unmatched value.

Want to see it in action? Book a free demo today and experience the Shotover-powered magic of AWS PrivateLink firsthand.

Resources- Getting started: Visit the documentation to learn how to create an AWS PrivateLink-enabled Apache Cassandra cluster on the Instaclustr Managed Platform.

- Connecting clients: Already created a Cassandra cluster with AWS PrivateLink? Click here to read about how to connect Cassandra clients in one VPC to an AWS PrivateLink-enabled Cassandra cluster on the Instaclustr Platform.

- General availability announcement: For more details, read our General Availability announcement on AWS PrivateLink support for Cassandra.

The post Integrating support for AWS PrivateLink with Apache Cassandra® on the NetApp Instaclustr Managed Platform appeared first on Instaclustr.

Compaction Strategies, Performance, and Their Impact on Cassandra Node Density

This is the third post in my series on optimizing Apache Cassandra for maximum cost efficiency through increased node density. In the first post, I examined how streaming operations impact node density and laid out the groundwork for understanding why higher node density leads to significant cost savings. In the second post, I discussed how compaction throughput is critical to node density and introduced the optimizations we implemented in CASSANDRA-15452 to improve throughput on disaggregated storage like EBS.

Cassandra Compaction Throughput Performance Explained

This is the second post in my series on improving node density and lowering costs with Apache Cassandra. In the previous post, I examined how streaming performance impacts node density and operational costs. In this post, I’ll focus on compaction throughput, and a recent optimization in Cassandra 5.0.4 that significantly improves it, CASSANDRA-15452.

This post assumes some familiarity with Apache Cassandra storage engine fundamentals. The documentation has a nice section covering the storage engine if you’d like to brush up before reading this post.

CEP-24 Behind the scenes: Developing Apache Cassandra®’s password validator and generator

Introduction: The need for an Apache Cassandra® password validator and generatorHere’s the problem: while users have always had the ability to create whatever password they wanted in Cassandra–from straightforward to incredibly complex and everything in between–this ultimately created a noticeable security vulnerability.

While organizations might have internal processes for generating secure passwords that adhere to their own security policies, Cassandra itself did not have the means to enforce these standards. To make the security vulnerability worse, if a password initially met internal security guidelines, users could later downgrade their password to a less secure option simply by using “ALTER ROLE” statements.

When internal password requirements are enforced for an individual, users face the additional burden of creating compliant passwords. This inevitably involved lots of trial-and-error in attempting to create a compliant password that satisfied complex security roles.

But what if there was a way to have Cassandra automatically create passwords that meet all bespoke security requirements–but without requiring manual effort from users or system operators?

That’s why we developed CEP-24: Password validation/generation. We recognized that the complexity of secure password management could be significantly reduced (or eliminated entirely) with the right approach–and improving both security and user experience at the same time.

The Goals of CEP-24A Cassandra Enhancement Proposal (or CEP) is a structured process for proposing, creating, and ultimately implementing new features for the Cassandra project. All CEPs are thoroughly vetted among the Cassandra community before they are officially integrated into the project.

These were the key goals we established for CEP-24:

- Introduce a way to enforce password strength upon role creation or role alteration.

- Implement a reference implementation of a password validator which adheres to a recommended password strength policy, to be used for Cassandra users out of the box.

- Emit a warning (and proceed) or just reject “create role” and “alter role” statements when the provided password does not meet a certain security level, based on user configuration of Cassandra.

- To be able to implement a custom password validator with its own policy, whatever it might be, and provide a modular/pluggable mechanism to do so.

- Provide a way for Cassandra to generate a password which would pass the subsequent validation for use by the user.

The Cassandra Password Validator and Generator builds upon an established framework in Cassandra called Guardrails, which was originally implemented under CEP-3 (more details here).

The password validator implements a custom guardrail introduced

as part of

CEP-24. A custom guardrail can validate and generate values of

arbitrary types when properly implemented. In the CEP-24 context,

the password guardrail provides

CassandraPasswordValidator by extending

ValueValidator, while passwords are generated by

CassandraPasswordGenerator by extending

ValueGenerator. Both components work with passwords as

String type values.

Password validation and generation are configured in the

cassandra.yaml file under the

password_validator section. Let’s explore the key

configuration properties available. First, the

class_name and generator_class_name

parameters specify which validator and generator classes will be

used to validate and generate passwords respectively.

Cassandra

ships CassandraPasswordValidator and CassandraPasswordGenerator out

of the box. However, if a particular enterprise decides that they

need something very custom, they are free to implement their own

validators, put it on Cassandra’s class path and reference it in

the configuration behind class_name parameter. Same for the

validator.

CEP-24 provides implementations of the validator and generator that the Cassandra team believes will satisfy the requirements of most users. These default implementations address common password security needs. However, the framework is designed with flexibility in mind, allowing organizations to implement custom validation and generation rules that align with their specific security policies and business requirements.

password_validator: # Implementation class of a validator. When not in form of FQCN, the # package name org.apache.cassandra.db.guardrails.validators is prepended. # By default, there is no validator. class_name: CassandraPasswordValidator # Implementation class of related generator which generates values which are valid when # tested against this validator. When not in form of FQCN, the # package name org.apache.cassandra.db.guardrails.generators is prepended. # By default, there is no generator. generator_class_name: CassandraPasswordGenerator

Password quality might be looked at as the number of characteristics a password satisfies. There are two levels for any password to be evaluated – warning level and failure level. Warning and failure levels nicely fit into how Guardrails act. Every guardrail has warning and failure thresholds. Based on what value a specific guardrail evaluates, it will either emit a warning to a user that its usage is discouraged (but ultimately allowed) or it will fail to be set altogether.

This same principle applies to password evaluation – each password is assessed against both warning and failure thresholds. These thresholds are determined by counting the characteristics present in the password. The system evaluates five key characteristics: the password’s overall length, the number of uppercase characters, the number of lowercase characters, the number of special characters, and the number of digits. A comprehensive password security policy can be enforced by configuring minimum requirements for each of these characteristics.

# There are four characteristics: # upper-case, lower-case, special character and digit. # If this value is set e.g. to 3, a password has to # consist of 3 out of 4 characteristics. # For example, it has to contain at least 2 upper-case characters, # 2 lower-case, and 2 digits to pass, # but it does not have to contain any special characters. # If the number of characteristics found in the password is # less than or equal to this number, it will emit a warning. characteristic_warn: 3 # If the number of characteristics found in the password is #less than or equal to this number, it will emit a failure. characteristic_fail: 2

Next, there are configuration parameters for each characteristic which count towards warning or failure:

# If the password is shorter than this value, # the validator will emit a warning. length_warn: 12 # If a password is shorter than this value, # the validator will emit a failure. length_fail: 8 # If a password does not contain at least n # upper-case characters, the validator will emit a warning. upper_case_warn: 2 # If a password does not contain at least # n upper-case characters, the validator will emit a failure. upper_case_fail: 1 # If a password does not contain at least # n lower-case characters, the validator will emit a warning. lower_case_warn: 2 # If a password does not contain at least # n lower-case characters, the validator will emit a failure. lower_case_fail: 1 # If a password does not contain at least # n digits, the validator will emit a warning. digit_warn: 2 # If a password does not contain at least # n digits, the validator will emit a failure. digit_fail: 1 # If a password does not contain at least # n special characters, the validator will emit a warning. special_warn: 2 # If a password does not contain at least # n special characters, the validator will emit a failure. special_fail: 1

It is also possible to say that illegal sequences of certain length found in a password will be forbidden:

# If a password contains illegal sequences that are at least this long, it is invalid. # Illegal sequences might be either alphabetical (form 'abcde'), # numerical (form '34567'), or US qwerty (form 'asdfg') as well # as sequences from supported character sets. # The minimum value for this property is 3, # by default it is set to 5. illegal_sequence_length: 5

Lastly, it is also possible to configure a dictionary of passwords to check against. That way, we will be checking against password dictionary attacks. It is up to the operator of a cluster to configure the password dictionary:

# Dictionary to check the passwords against. Defaults to no dictionary. # Whole dictionary is cached into memory. Use with caution with relatively big dictionaries. # Entries in a dictionary, one per line, have to be sorted per String's compareTo contract. dictionary: /path/to/dictionary/file

Now that we have gone over all the configuration parameters, let’s take a look at an example of how password validation and generation look in practice.

Consider a scenario where a Cassandra super-user (such as the default ‘cassandra’ role) attempts to create a new role named ‘alice’.

cassandra@cqlsh> CREATE ROLE alice WITH PASSWORD = 'cassandraisadatabase' AND LOGIN = true; InvalidRequest: Error from server: code=2200 [Invalid query] message="Password was not set as it violated configured password strength policy. To fix this error, the following has to be resolved: Password contains the dictionary word 'cassandraisadatabase'. You may also use 'GENERATED PASSWORD' upon role creation or alteration."

The password is not found in the dictionary, but it is not long enough. When an operator sees this, they will try to fix it by making the password longer:

cassandra@cqlsh> CREATE ROLE alice WITH PASSWORD = 'T8aum3?' AND LOGIN = true; InvalidRequest: Error from server: code=2200 [Invalid query] message="Password was not set as it violated configured password strength policy. To fix this error, the following has to be resolved: Password must be 8 or more characters in length. You may also use 'GENERATED PASSWORD' upon role creation or alteration."

The password is finally set, but it is not completely secure. It satisfies the minimum requirements but our validator identified that not all characteristics were met.

cassandra@cqlsh> CREATE ROLE alice WITH PASSWORD = 'mYAtt3mp' AND LOGIN = true; Warnings: Guardrail password violated: Password was set, however it might not be strong enough according to the configured password strength policy. To fix this warning, the following has to be resolved: Password must be 12 or more characters in length. Passwords must contain 2 or more digit characters. Password must contain 2 or more special characters. Password matches 2 of 4 character rules, but 4 are required. You may also use 'GENERATED PASSWORD' upon role creation or alteration.

The password is finally set, but it is not completely secure. It satisfies the minimum requirements but our validator identified that not all characteristics were met.

When an operator saw this, they noticed the note about the ‘GENERATED PASSWORD’ clause which will generate a password automatically without an operator needing to invent it on their own. This is a lot of times, as shown, a cumbersome process better to be left on a machine. Making it also more efficient and reliable.

cassandra@cqlsh> ALTER ROLE alice WITH GENERATED PASSWORD; generated_password ------------------ R7tb33?.mcAX

The generated password shown above will satisfy all the rules we have configured in the cassandra.yaml automatically. Every generated password will satisfy all of the rules. This is clearly an advantage over manual password generation.

When the CQL statement is executed, it will be visible in the CQLSH history (HISTORY command or in cqlsh_history file) but the password will not be logged, hence it cannot leak. It will also not appear in any auditing logs. Previously, Cassandra had to obfuscate such statements. This is not necessary anymore.

We can create a role with generated password like this:

cassandra@cqlsh> CREATE ROLE alice WITH GENERATED PASSWORD AND LOGIN = true; or by CREATE USER: cassandra@cqlsh> CREATE USER alice WITH GENERATED PASSWORD;

When a password is generated for alice (out of scope of this documentation), she can log in:

$ cqlsh -u alice -p R7tb33?.mcAX ... alice@cqlsh>

Note: It is recommended to save password to ~/.cassandra/credentials, for example:

[PlainTextAuthProvider] username = cassandra password = R7tb33?.mcAX

and by setting auth_provider in ~/.cassandra/cqlshrc

[auth_provider] module = cassandra.auth classname = PlainTextAuthProvider

It is also possible to configure password validators in such a way that a user does not see why a password failed. This is driven by configuration property for password_validator called detailed_messages. When set to false, the violations will be very brief:

alice@cqlsh> ALTER ROLE alice WITH PASSWORD = 'myattempt'; InvalidRequest: Error from server: code=2200 [Invalid query] message="Password was not set as it violated configured password strength policy. You may also use 'GENERATED PASSWORD' upon role creation or alteration."

The following command will automatically generate a new password that meets all configured security requirements.

alice@cqlsh> ALTER ROLE alice WITH GENERATED PASSWORD;

Several potential enhancements to password generation and validation could be implemented in future releases. One promising extension would be validating new passwords against previous values. This would prevent users from reusing passwords until after they’ve created a specified number of different passwords. A related enhancement could include restricting how frequently users can change their passwords, preventing rapid cycling through passwords to circumvent history-based restrictions.

These features, while valuable for comprehensive password security, were considered beyond the scope of the initial implementation and may be addressed in future updates.

Final thoughts and next stepsThe Cassandra Password Validator and Generator implemented under CEP-24 represents a significant improvement in Cassandra’s security posture.

By providing robust, configurable password policies with built-in enforcement mechanisms and convenient password generation capabilities, organizations can now ensure compliance with their security standards directly at the database level. This not only strengthens overall system security but also improves the user experience by eliminating guesswork around password requirements.

As Cassandra continues to evolve as an enterprise-ready database solution, these security enhancements demonstrate a commitment to meeting the demanding security requirements of modern applications while maintaining the flexibility that makes Cassandra so powerful.

Ready to experience CEP-24 yourself? Try it out on the Instaclustr Managed Platform and spin up your first Cassandra cluster for free.

CEP-24 is just our latest contribution to open source. Check out everything else we’re working on here.

The post CEP-24 Behind the scenes: Developing Apache Cassandra®’s password validator and generator appeared first on Instaclustr.

How Cassandra Streaming, Performance, Node Density, and Cost are All related

This is the first post of several I have planned on optimizing Apache Cassandra for maximum cost efficiency. I’ve spent over a decade working with Cassandra and have spent tens of thousands of hours data modeling, fixing issues, writing tools for it, and analyzing it’s performance. I’ve always been fascinated by database performance tuning, even before Cassandra.

A decade ago I filed one of my first issues with the project, where I laid out my target goal of 20TB of data per node. This wasn’t possible for most workloads at the time, but I’ve kept this target in my sights.

Cassandra 5 Released! What's New and How to Try it

Apache Cassandra 5.0 has officially landed! This highly anticipated release brings a range of new features and performance improvements to one of the most popular NoSQL databases in the world. Having recently hosted a webinar covering the major features of Cassandra 5.0, I’m excited to give a brief overview of the key updates and show you how to easily get hands-on with the latest release using easy-cass-lab.

You can grab the latest release on the Cassandra download page.

easy-cass-lab v5 released

I’ve got some fun news to start the week off for users of easy-cass-lab: I’ve just released version 5. There are a number of nice improvements and bug fixes in here that should make it more enjoyable, more useful, and lay groundwork for some future enhancements.

- When the cluster starts, we wait for the storage service to

reach NORMAL state, then move to the next node. This is in contrast

to the previous behavior where we waited for 2 minutes after

starting a node. This queries JMX directly using Swiss Java Knife

and is more reliable than the 2-minute method. Please see

packer/bin-cassandra/wait-for-up-normalto read through the implementation. - Trunk now works correctly. Unfortunately, AxonOps doesn’t support trunk (5.1) yet, and using the agent was causing a startup error. You can test trunk out, but for now the AxonOps integration is disabled.

- Added a new repl mode. This saves keystrokes and provides some

auto-complete functionality and keeps SSH connections open. If

you’re going to do a lot of work with ECL this will help you be a

little more efficient. You can try this out with

ecl repl. - Power user feature: Initial support for profiles in AWS regions

other than

us-west-2. We only provide AMIs forus-west-2, but you can now set up a profile in an alternate region, and build the required AMIs usingeasy-cass-lab build-image. This feature is still under development and requires using aneasy-cass-labbuild from source. Credit to Jordan West for contributing this work. - Power user feature: Support for multiple profiles. Setting the

EASY_CASS_LAB_PROFILEenvironment variable allows you to configure alternate profiles. This is handy if you want to use multiple regions or have multiple organizations. - The project now uses Kotlin instead of Groovy for Gradle configuration.

- Updated Gradle to 8.9.

- When using the list command, don’t show the alias “current”.

- Project cleanup, remove old unused pssh, cassandra build, and async profiler subprojects.

The release has been released to the project’s GitHub page and to homebrew. The project is largely driven by my own consulting needs and for my training. If you’re looking to have some features prioritized please reach out, and we can discuss a consulting engagement.

easy-cass-lab updated with Cassandra 5.0 RC-1 Support

I’m excited to announce that the latest version of easy-cass-lab now supports Cassandra 5.0 RC-1, which was just made available last week! This update marks a significant milestone, providing users with the ability to test and experiment with the newest Cassandra 5.0 features in a simplified manner. This post will walk you through how to set up a cluster, SSH in, and run your first stress test.

For those new to easy-cass-lab, it’s a tool designed to streamline the setup and management of Cassandra clusters in AWS, making it accessible for both new and experienced users. Whether you’re running tests, developing new features, or just exploring Cassandra, easy-cass-lab is your go-to tool.

easy-cass-lab now available in Homebrew

I’m happy to share some exciting news for all Cassandra enthusiasts! My open source project, easy-cass-lab, is now installable via a homebrew tap. This powerful tool is designed to make testing any major version of Cassandra (or even builds that haven’t been released yet) a breeze, using AWS. A big thank-you to Jordan West who took the time to make this happen!

What is easy-cass-lab?

easy-cass-lab is a versatile testing tool for Apache Cassandra. Whether you’re dealing with the latest stable releases or experimenting with unreleased builds, easy-cass-lab provides a seamless way to test and validate your applications. With easy-cass-lab, you can ensure compatibility and performance across different Cassandra versions, making it an essential tool for developers and system administrators. easy-cass-lab is used extensively for my consulting engagements, my training program, and to evaluate performance patches destined for open source Cassandra. Here are a few examples:

Cassandra Training Signups For July and August Are Open!

I’m pleased to announce that I’ve opened training signups for Operator Excellence to the public for July and August. If you’re interested in stepping up your game as a Cassandra operator, this course is for you. Head over to the training page to find out more and sign up for the course.

Streaming My Sessions With Cassandra 5.0

As a long time participant with the Cassandra project, I’ve witnessed firsthand the evolution of this incredible database. From its early days to the present, our journey has been marked by continuous innovation, challenges, and a relentless pursuit of excellence. I’m thrilled to share that I’ll be streaming several working sessions over the next several weeks as I evaluate the latest builds and test out new features as we move toward the 5.0 release.

Streaming Cassandra Workloads and Experiments

Streaming

In the world of software engineering, especially within the realm of distributed systems, continuous learning and experimentation are not just beneficial; they’re essential. As a software engineer with a focus on distributed systems, particularly Apache Cassandra, I’ve taken this ethos to heart. My journey has led me to not only explore the intricacies of Cassandra’s distributed architecture but also to share my experiences and findings with a broader audience. This is why my YouTube channel has become an active platform where I stream at least once a week, engaging with viewers through coding sessions, trying new approaches, and benchmarking different Cassandra workloads.

Live Streaming On Tuesdays

As I promised in December, I redid my presentation from the Cassandra Summit 2023 on a live stream. You can check it out at the bottom of this post.

Going forward, I’ll be live-streaming on Tuesdays at 10AM Pacific on my YouTube channel.

Next week I’ll be taking a look at tlp-stress, which is used by the teams at some of the biggest Cassandra deployments in the world to benchmark their clusters. You can find that here.

Cassandra Summit Recap: Performance Tuning and Cassandra Training

Hello, friends in the Apache Cassandra community!

I recently had the pleasure of speaking at the Cassandra Summit in San Jose. Unfortunately, we ran into an issue with my screen refusing to cooperate with the projector, so my slides were pretty distorted and hard to read. While the talk is online, I think it would be better to have a version with the right slides as well as a little more time. I’ve decided to redo the entire talk via a live stream on YouTube. I’m scheduling this for 10am PST on Wednesday, January 17 on my YouTube channel. My original talk was done in 30 minute slot, this will be a full hour, giving plenty of time for Q&A.

Cassandra Summit, YouTube, and a Mailing List

I am thrilled to share some significant updates and exciting plans with my readers and the Cassandra community. As we draw closer to the end of the year, I’m preparing for an important speaking engagement and mapping out a year ahead filled with engaging and informative activities.

Cassandra Summit Presentation: Mastering Performance Tuning

I am honored to announce that I will be speaking at the upcoming Cassandra Summit. My talk, titled “Cassandra Performance Tuning Like You’ve Been Doing It for Ten Years,” is scheduled for December 13th, from 4:10 pm to 4:40 pm. This session aims to equip attendees with advanced insights and practical skills for optimizing Cassandra’s performance, drawing from a decade’s worth of experience in the field. Whether you’re new to Cassandra or a seasoned user, this talk will provide valuable insights to enhance your database management skills.

Uncover Cassandra's Throughput Boundaries with the New Adaptive Scheduler in tlp-stress

Introduction

Apache Cassandra remains the preferred choice for organizations seeking a massively scalable NoSQL database. To guarantee predictable performance, Cassandra administrators and developers rely on benchmarking tools like tlp-stress, nosqlbench, and ndbench to help them discover their cluster’s limits. In this post, we will explore the latest advancements in tlp-stress, highlighting the introduction of the new Adaptive Scheduler. This brand-new feature allows users to more easily uncover the throughput boundaries of Cassandra clusters while remaining within specific read and write latency targets. First though, we’ll take a brief look at the new workload designed to stress test the new Storage Attached Indexes feature coming in Cassandra 5.

AxonOps Review - An Operations Platform for Apache Cassandra

Note: Before we dive into this review of AxonOps and their offerings, it’s important to note that this blog post is part of a paid engagement in which I provided product feedback. AxonOps had no influence or say over the content of this post and did not have access to it prior to publishing.

In the ever-evolving landscape of data management, companies are constantly seeking solutions that can simplify the complexities of database operations. One such player in the market is AxonOps, a company that specializes in providing tooling for operating Apache Cassandra.

Benchmarking Apache Cassandra with tlp-stress

This post will introduce you to tlp-stress, a tool for benchmarking Apache Cassandra. I started tlp-stress back when I was working at The Last Pickle. At the time, I was spending a lot of time helping teams identify the root cause of performance issues and needed a way of benchmarking. I found cassandra-stress to be difficult to use and configure, so I ended up writing my own tool that worked in a manner that I found to be more useful. If you’re looking for a tool to assist you in benchmarking Cassandra, and you’re looking to get started quickly, this might be the right tool for you.

Back to Consulting!

Saying “it’s been a while since I wrote anything here” would be an understatement, but I’m back, with a lot to talk about in the upcoming months.

First off - if you’re not aware, I continued writing, but on The Last Pickle blog. There’s quite a few posts there, here are the most interesting ones:

- 14 Things To Do When Setting Up a New Cassandra Cluster

- Apache Cassandra Performance Tuning - Compression with Mixed Workloads

- Garbage Collection Tuning for Apache Cassandra

- Analyzing Cassandra Performance with Flame Graphs

- Cassandra Time Series Data Modeling For Massive Scale

Now the fun part - I’ve spent the last 3 years at Apple, then Netflix, neither of which gave me much time to continue my writing. As of this month, I’m officially no longer at Netflix and have started Rustyrazorblade Consulting!

Building a 100% ScyllaDB Shard-Aware Application Using Rust

Building a 100% ScyllaDB Shard-Aware Application Using Rust

I wrote a web transcript of the talk I gave with my colleagues Joseph and Yassir at [Scylla Su...

Learning Rust the hard way for a production Kafka+ScyllaDB pipeline

Learning Rust the hard way for a production Kafka+ScyllaDB pipeline

This is the web version of the talk I gave at [Scylla Summit 2022](https://www.scyllad...

On Scylla Manager Suspend & Resume feature

On Scylla Manager Suspend & Resume feature

!!! warning "Disclaimer" This blog post is neither a rant nor intended to undermine the great work that...

Renaming and reshaping Scylla tables using scylla-migrator

We have recently faced a problem where some of the first Scylla tables we created on our main production cluster were not in line any more with the evolved s...

Python scylla-driver: how we unleashed the Scylla monster's performance

At Scylla summit 2019 I had the chance to meet Israel Fruchter and we dreamed of working on adding **shard...

Scylla Summit 2019

I've had the pleasure to attend again and present at the Scylla Summit in San Francisco and the honor to be awarded the...

A Small Utility to Help With Extracting Code Snippets

It’s been a while since I’ve written anything here. Part of the reason has been due to the writing I’ve done over on the blog at The Last Pickle. In the lsat few years, I’ve written about our tlp-stress tool, tips for new Cassandra clusters, and a variety of performance posts related to Compaction, Compression, and GC Tuning.

The other reason is the eight blog posts I’ve got in the draft folder. One of the reasons why there are so many is the way I write. If the post is programming related, I usually start with the post, then start coding, pull snippets out, learn more, rework the post, then rework snippets. It’s an annoying, manual process. The posts sitting in my draft folder have incomplete code, and reworking the code is a tedious process that I get annoyed with, leading to abandoned posts.

Scylla: four ways to optimize your disk space consumption

We recently had to face free disk space outages on some of our scylla clusters and we learnt some very interesting things while outlining some improvements t...

Scylla Summit 2018 write-up

It's been almost one month since I had the chance to attend and speak at Scylla Summit 2018 so I'm reliev...

Authenticating and connecting to a SSL enabled Scylla cluster using Spark 2

This quick article is a wrap up for reference on how to connect to ScyllaDB using Spark 2 when authentication and SSL are enforced for the clients on the...

A botspot story

I felt like sharing a recent story that allowed us identify a bot in a haystack thanks to Scylla.

...

Evaluating ScyllaDB for production 2/2

In my previous blog post, I shared [7 lessons on our experience in evaluating Scylla](https://www.ultrabug.fr...

Accessing Private Variables in the JVM

In this I’ll discuss a uncommonly used but useful technique of accessing variables and methods which have been declared as private in the JVM, using the Apache Commons Lang library to work around the restriction. The description from the project page reads:

The standard Java libraries fail to provide enough methods for manipulation of its core classes. Apache Commons Lang provides these extra methods.

A couple weeks ago I was working on a project that required

parsing some CQL statements. There isn’t a standard parser separate

from the Cassandra project at the moment, so I decided to pull in

the entirety of cassandra-all from maven central. The parser in Cassandra isn’t

really designed to be used as a library. In particular, the

org.apache.cassandra.cql3.QueryProcessor has a

parseStatement(String) call, but the

ParsedStatement that’s returned doesn’t expose any of

the private variables via getters. I felt particularly determined

for some reason, so I decided to investigate a workaround.

Migration to Hugo

After almost five years of using Pelican as my static site generator, I’ve migrated to the Hugo tool. While I enjoyed Pelican and it’s flexibility, it’s performance started to bother me when building a site from scratch. Depending on what else was running on my laptop, a full build could take 15-20 seconds. This isn’t the end of the world, but in comparison Hugo takes less than 100 milliseconds.

If it was simply a matter of build time, I may not have really cared that much, but I’ve been using Hugo to build the site and documentation for Reaper, the open source repair tool we maintain at The Last Pickle.

Evaluating ScyllaDB for production 1/2

I have recently been conducting a quite deep evaluation of ScyllaDB to find out if we could benefit from this database in some of...

Working with gRPC, Kotlin and Gradle

Edit: The source code for this post is located on GitHub

Sometimes when I travel I end up trying to learn something completely new. For a while I was playing with Rust, Capn Proto, Scala, or I’d start a throwaway project at an airport and just tinker.

My passion is and has always been databases. I’ve maintained this blog for roughly a decade, starting with MySQL for the first part of my career but moving to Apache Cassandra several years ago, and am now a committer and member of the PMC.

I Am Still Writing!

If you were to take a look at my blog, you’d think I’d flipped a table and left the tech industry. Not the case at all. I’m still writing, but less frequently, and on the TLP blog. I intend to start writing here again, but the material will likely focus around topics other than Cassandra, since I’m already writing about it elsewhere. Here are the posts I’ve authored in the last 6 months or so:

Instaclustr Now Supporting Apache Cassandra 3.7 as LTS

Instacluster announced on the Apache Cassandra user list that they are making their supported branch of the Cassandra 3.7 tick tock release publicly available (see GitHub repo). Bug fixes that go into 3.8, 3.9, etc will be back ported to the Instacluster LTS. You can read the blog post about the decision.

Some people I’ve talked to are concerned about having different commercial entities doing long term supported releases, and this concern is understandable. The obvious preference is for the project maintainers to handle this and make an official LTS available. The big concern here is that third party LTS could fracture the project in the long term.

Rustyrazorblade Radio, A Distributed System Podcast

I haven’t blogged in a while, which is a bummer because I was determined to write an article a week for the entire year. I haven’t even come remotely close to that goal.

I’ve recently switched jobs from DataStax to Consulting with The Last Pickle, which has been pretty hectic. Add to that 3 presentations at the Cassandra Summit and the end result is very little time for personal projects.

Working Relationally With Cassandra

I’ve spent the last 4 years working in the big data world with Cassandra because it’s the only practical solution if you have a requirement to scale out, uptime is a priority, and you need predictable performance. I’ve heard different ways of describing where Cassandra fits in your architecture, but I think the best way to think of it is close to your customer. Think of the servers your mobile apps communicate with or what holds your product inventory.

Cassandra Dataset Manager Preview 1 Released

One of the problems of learning a new database is getting used to a new way of data modeling. PostgreSQL looks different from Redis, which is different from a graph, and is different from Cassandra.

Cassandra Dataset Manager aims to reduce the time spent in a frustrating trial and error process trying to learn proper data modeling techniques for Apache Cassandra and Datastax Enterprise by providing curated data models which have been designed by professionals with years of experience. Think of it as a package manager for Cassandra data models and sample data.